Customer-obsessed science

Research areas

-

February 20, 2025Using large language models to generate training data and updating models through both fine tuning and reinforcement learning improves the success rate of code generation by 39%.

-

-

-

December 24, 2024

Featured news

-

2025We present the history-aware transformer (HAT), a transformer-based model that uses shoppers’ purchase history to personalise outfit predictions. The aim of this work is to recommend outfits that are internally coherent while matching an individual shopper’s style and taste. To achieve this, we stack two transformer models, one that produces outfit representations and another one that processes the history

-

2025Contrastive Learning (CL) proves to be effective for learning generalizable user representations in Sequential Recommendation (SR), but it suffers from high computational costs due to its reliance on negative samples. To overcome this limitation, we propose the first Non-Contrastive Learning (NCL) framework for SR, which eliminates computational overhead of identifying and generating negative samples. However

-

2025Large Language Models (LLMs), exemplified by Claude and LLama, have exhibited impressive proficiency in tackling a myriad of Natural Language Processing (NLP) tasks. Yet, in pursuit of the ambitious goal of attaining Artificial General Intelligence (AGI), there remains ample room for enhancing LLM capabilities. Chief among these is the pressing need to bolster long-context comprehension. Numerous real-world

-

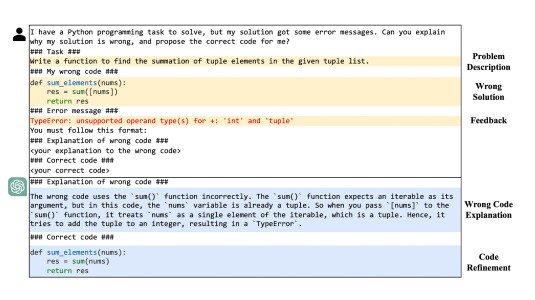

2025Language models are aligned to the collective voice of many, resulting in generic out-puts that do not align with specific users’ styles. In this work, we present Trial-Error-Explain In-Context Learning (TICL), a tuning-free method that personalizes language models for text generation tasks with fewer than 10 examples per user. TICL iteratively expands an in-context learning prompt via a trial-error-explain

-

2025Due to the scarcity of agent-oriented pre-training data, LLM-based autonomous agents typically rely on complex prompting or extensive fine-tuning, which often fails to introduce new capabilities while preserving strong generalizability. We introduce Hephaestus-Forge, the first large-scale pre-training corpus designed to enhance the fundamental capabilities of LLM agents in API function calling, intrinsic

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all