Joan Feigenbaum is an Amazon Scholar and the Grace Murray Hopper professor of computer science at Yale. In this article, Feigenbaum talks about secure multiparty computation (MPC) and privacy-preserving machine learning (PPML) – two cryptographic techniques that are being used to address cloud-computing privacy concerns and accelerate enterprise cloud adoption.

Joan Feigenbaum, Amazon Scholar According to a 2019 report released by Cybersecurity Insiders, security risks—including the loss or leakage of information—are leading factors that discourage enterprises and government organizations from adopting cloud-computing technologies. As organizations accelerate the flow of sensitive consumer information to the cloud in order to take advantage of its massive compute power, the research area of cryptographic computing is growing in importance.

At its essence, cryptographic computing focuses on the design and implementation of protocols for using information without revealing it. For example, a county government looking to prioritize the rollout of services based on different areas’ demographics could calculate the average age of residents in different zip codes without running the risk of revealing (indeed without even learning) the ages of individual residents.

Cryptographic computing is not a new field. In fact, Gentry’s breakthrough scheme for fully homomorphic encryption (FHE) was published as far back as 2008.

In one of its extensively studied forms, FHE gives each user a public key and a corresponding private key. A user can encrypt any input data set using the public key, give the encrypted input to another party (say a cloud-computing service) that performs computations on it, and then decrypt the results of those computations with her secret key. By ensuring that all data are operated on only in an encrypted state, FHE ensures that data uploaded to the cloud remain confidential. Unfortunately, FHE is not yet fast enough for use on very large-scale data sets.

That said, there are more narrowly tailored cryptographic-computing techniques that scale better and have started to see commercial use.

- Secure multi-party computation (MPC)

Secure multi-party computation (MPC) enables n parties P1,...,Pn, with private inputs x1,...,xn, to compute y = f(x1,...,xn) in such a way that all parties learn y but no Pi learns anything about xj, for j≠i, except what is logically implied by y and xi.

Consider the following toy example. Suppose 20 pupils, whom we will call P1 through P20, are in the same class and have received their graded exams from their teacher. They want to compute the average of their grades without revealing their individual grades, which we will denote by g1 through g20. They can use the following simple MPC protocol. P1 chooses a random number r, computes x1 = g1 + r, and sends x1 to P2. Then P2 computes x2 = x1 + g2 and sends x2 to P3. They continue in this fashion until P20 computes x20 = x19 + g20 and sends x20 to P1. In the last step, P1 computes x20 – r, which is of course the sum g1 + g2 + … + g20 of the individual grades. He divides this sum by 20 to obtain the average and broadcasts the result to all of the pupils.

If all of the pupils follow this protocol faithfully, then they all learn the average, but none learns anything about the others’ grades except what is logically implied by the average and his own grade. Here, “following the protocol faithfully” requires not colluding with another pupil to discover someone else’s grade. If, say, P3 and P5 executed all of the steps of the protocol correctly but also got together on the side to pool their information, they could compute P4’s grade g4. That is because g4 = x4 – x3, and, during the execution of the protocol, P3 learns x3 and P5 learns x4. Fortunately, there are techniques (the details of which are beyond the scope of this article) for ensuring that this type of collusion does not reveal private inputs; they include secret-sharing schemes, described below.

One powerful class of MPC protocols proceeds in multiple rounds. In the first round, each Pi breaks xi into shares, using a secret-sharing scheme, and sends one share to each Pj. The information-theoretic properties of secret sharing guarantee that no other party (or even limited-sized coalition of other parties) can compute xi from the share(s). The parties then execute a multi-round protocol to compute shares of y, in which the shares of intermediate results computed in each round also do not reveal xi. In the last round, the parties broadcast their shares of y so that all of them can reconstruct the result.

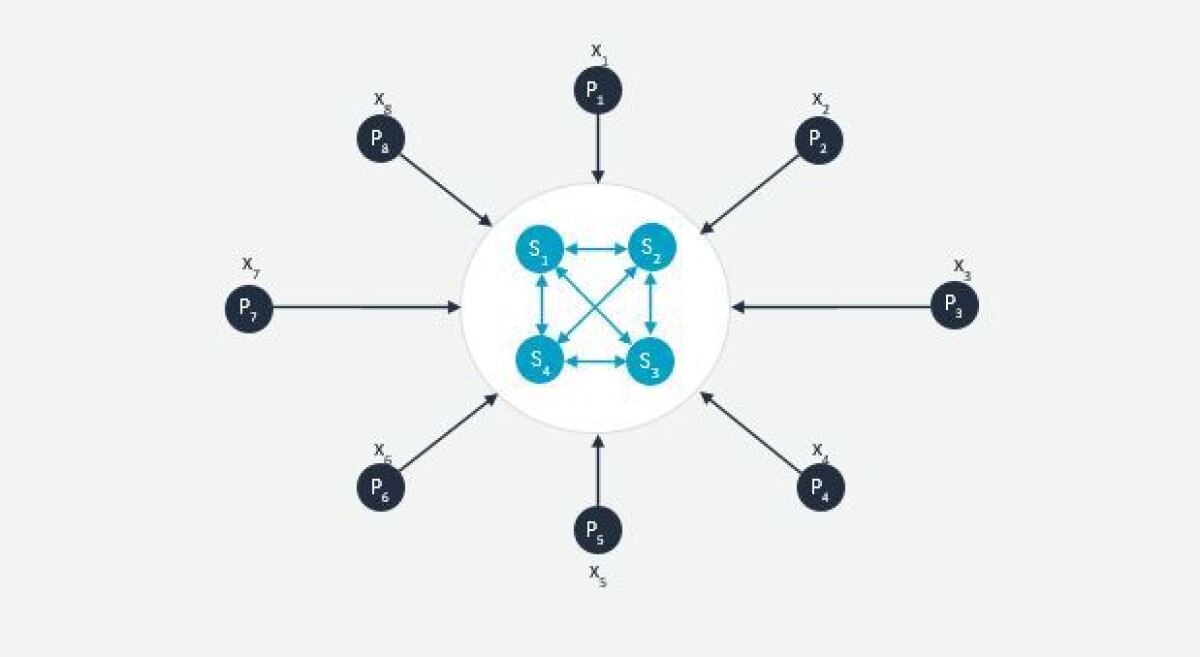

In the secure-outsourcing protocol architecture, depicted below, the parties P1,...,Pn play the role of input providers and a disjoint, much smaller set of parties S1,...,Sk play the role of secure-computation servers; typically, 2 ≤ k ≤ 4. The input providers share their inputs with the servers, which then execute a basic, k-party MPC protocol to compute y. For an appropriate choice of secret-sharing scheme, the inputs remain private as long as at least one server does not collude with the others. Note that cloud-computing companies are ideally positioned to supply secure computation servers!

The Secure-Outsourcing Architecture with n=8 and k=4 Image credit: Joan Feigenbaum - Privacy-preserving machine learning (PPML)

An ML training algorithm is given a set of solved instances of a classification problem and produces a model to be used by an ML prediction algorithm to classify future, as-yet-unsolved instances of the same problem.

Training data, queries (inputs to the prediction algorithm), and predictions (outputs of the prediction algorithm) may contain sensitive information about data subjects. Owners of commercially valuable models regard them as intellectual property and may wish to sell access to them but not permit users to reverse-engineer them. Privacy-preserving machine learning (PPML) is the subarea of cryptographic computing that studies algorithms that protect training data, models, queries, and predictions.

Practical PPML methods are often tailored for specific training or prediction algorithms and may require specific computational architectures. The cloud provider can employ both traditional computer-security techniques (authentication, sandboxing, etc.) and PPML algorithms to protect both sensitive data and intellectual property. For example, the 2019 PPML annual workshop focused on MPC, FHE, and other techniques outlined in this article. In addition, the workshop featured recent results on differential privacy, a powerful data-protection approach that has gained a lot of attention in recent years. Differential privacy enables users to obtain aggregate information from a database while protecting confidential information about individual records in the database. Indeed, the result of a differentially private statistical query is not significantly affected by the presence or absence of any particular individual record.

Image credit: Joan Feigenbaum and Xianrui MengSecure, multi-party computation and privacy-preserving machine learning are only two cryptographic-computing techniques that are candidates for widespread practical deployment. Other techniques include searchable encryption, which enables keyword search on encrypted documents, garbled-circuit protocols, which are a form of secure, two-party computation, and protocols for queries to encrypted databases.

I’m personally excited to see these innovations in cryptographic computing, which will be critical to easing contractual and regulatory barriers to adoption of cloud computing and could herald an era of even stronger growth for the industry. Cryptographic computing will allow individuals around the globe to reap the benefits of cloud computing, such as personalized medicine, movie streaming, and smarter financial-management solutions, while ensuring that our personal information stays private and secure.

More information on Amazon's approach to cryptographic computing and the company's research in this areas is available here.

Cryptographic computing can accelerate the adoption of cloud computing

Amazon Scholar Joan Feigenbaum talks about two cryptographic techniques that are being used to address cloud-computing privacy concerns and accelerate enterprise cloud adoption.

Research areas