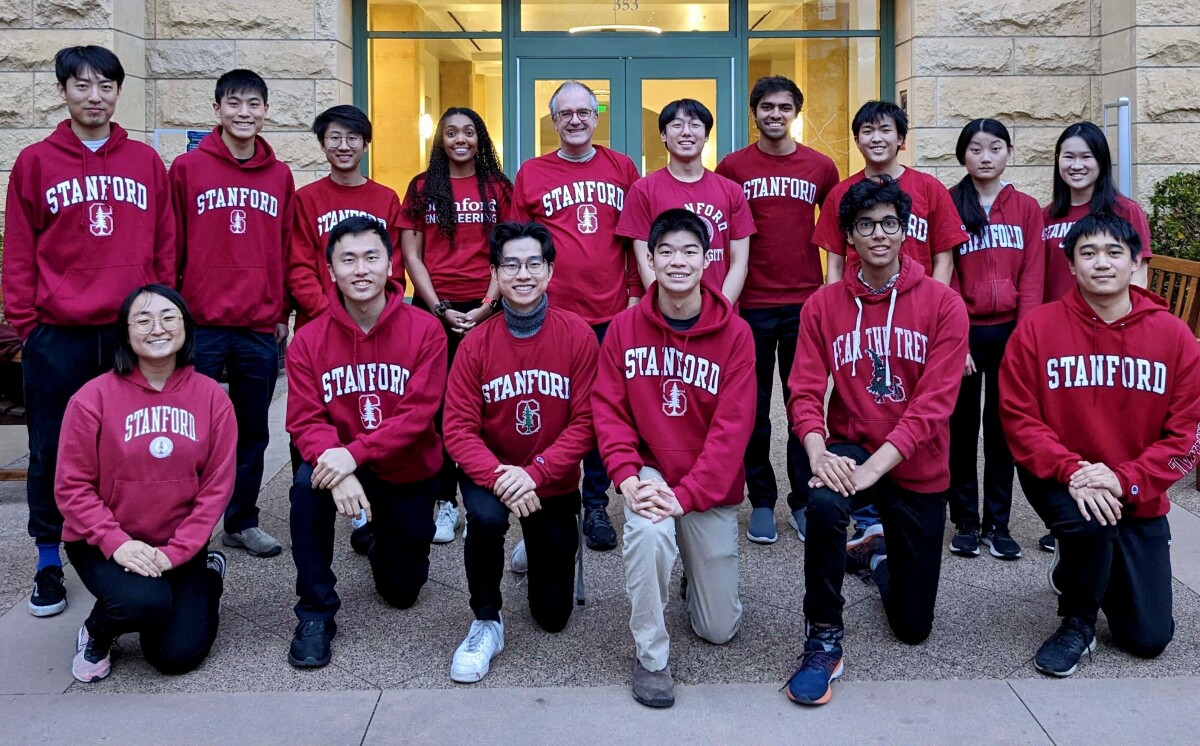

Stanford's Team Chirpy Cardinal is returning to the competition for the third time.

Our goal is to advance the state of open-domain dialogue by blending modern NLP techniques with empathetic, engaging responses. With each of us bringing a diverse range of backgrounds and experiences to the table, we hope for our bot to be able to provide a great experience for customers, one that makes them feel understood and cared for. We are advised by Prof. Christopher D. Manning.

Ryan A. Chi - Team Leader

Chi is a master's student at Stanford working on understanding large language models. Previously, he was the lead contributor for Stanford CRFM's bias investigation team, and was at NVIDIA AI working on NMT systems. This year, he's leading Stanford's Chirpy Cardinal Alexa Prize team. In addition, he has served as the president of Stanford ACM and a coach for the USA Linguistics Olympiad team.

Virginia Adams

Adams is a first year computer science master student, concentrating in AI. She has a background in NLP and worked as an applied research scientist on the conversational AI team at NVIDIA for two years before starting her masters at Stanford.

Thanawan Atchariyachanvanit

Atchariyachanvanit is a sophomore at Stanford University studying computer science. She is an international student from Thailand. Previously, she was an undergraduate research assistant at Stanford NLP Group during the summer.

Steven Cao

Cao is a second-year PhD student at Stanford, researching natural language processing, machine learning, and theory. In the past, he has worked on syntactic parsing, grammar induction, and multilingual modeling. Cao is currently supported by the NSF GRFP.

Gordon Chi

Chi is a master's student at Stanford in building virtual assistants and leveraging large language models. He is a current mentor of the Stanford Undergraduate Research in CS program, and was a former President of Stanford ACM and International Linguistics Olympiad Contestant. He is passionate about applying game-playing learning approaches in real world domains in NLP and healthcare.

Nathan A. Chi

Chi is a sophomore at Stanford, studying computer science. He is interested in AI and its applications to healthcare. In addition to the Stanford AI Lab, he is a section leader for Stanford’s introductory CS course and serves as the current president of Stanford ACM. He researches chatbots and biomedical AI at Stanford, Yale and Harvard.

Gary Dai

Dai is an Aeronautical and Astronautical Engineering masters student concentrating in ML, robotics, and control. He worked at Meta for internship on Recommendation system and Language model knowledge distillations.

Scott Hickmann

Scott is a sophomore studying Computer Science at Stanford University. He is a full-stack developer who worked on computer vision and NLP projects in industry. Scott is also teaching a web development class for Stanford ACM. He is looking forward to delving deeper into scalable NLP solutions to improve real-world customer-facing interactions.

Jeremy Kim - Co-Team Leader

Kim is a junior at Stanford University studying mathematics, originally from New York City. Kim loves brainstorming solutions to problems with other people, and is eager to pursue his passions through the Alexa Prize contest.

Siyan Li

Li is a second-year master's student in computer science at Stanford University. Her current research focus is on dialogue systems, both in speech and text. She has been an intern at Amazon Robotics, and conducted research at Carnegie Mellon, University of Southern California, and IT University of Copenhagen. Li will be applying to PhD programs this year, and she is passionate about applying dialogue systems in healthcare contexts, specifically in a mental health related context.

Kristie Park

Park is a junior at Stanford double majoring in Biomechanical Engineering and Sociology with a minor in Music. She is interested in the intersection between graphic design and empathetic communication.

Shashank Rammoorthy

Rammoorthy is a masters student in Computer Science, interested in systems and ML, and is especially curious about what makes systems scale well. Previously, he did self supervised learning research at the Stanford CoCo Lab.

Parth Sarthi

Sarthi is a sophomore at Stanford studying Computer Science. He has an interest in Retrieval Augmented Generation and reducing hallucinations in Large Language Models. Additionally, Sarthi is a part of the Contextual AI research team.

Ji Hun Wang

Wang is a junior at Stanford, majoring in Computer Science and Linguistics. His research explores the explainability of Large Language Models, multimodal deep learning, and the use of deep learning to study formal aspects of natural languages. He is currently a member of the Stanford ML Group.

Brian Y. Xu

Xu is an undergraduate studying mathematics and statistics who is interested in analyzing human behavior and answering social questions through the lens of robust mathematical modeling. He previously interned with the CS Theory Group.

Brian Z. Xu

Xu is a sophomore at Stanford studying Mathematical and Computational Science. He is also a research engineer with the Stanford Social Neuroscience Lab. Xu is interested in exploring creative uses of natural language processing for problem-solving.

Katherine Yu

Yu is a junior at Stanford University studying computer science and mathematics. She has done research with the Stanford Theory Group on sampling algorithms, and is interested in computer systems and user- facing software.

Christopher Manning - Faculty advisor

Manning is the inaugural Thomas M. Siebel Professor in Machine Learning in the Departments of Linguistics and Computer Science at Stanford University, Director of the Stanford Artificial Intelligence Laboratory (SAIL), and an Associate Director of the Stanford Human-Centered Artificial Intelligence Institute (HAI). His research goal is computers that can intelligently process, understand, and generate human language material. Manning is a leader in applying Deep Learning to Natural Language Processing, with well-known research on the GloVe model of word vectors, question answering, tree-recursive neural networks, machine reasoning, neural network dependency parsing, neural machine translation, sentiment analysis, and deep language understanding.