As one of eight university teams tasked with the challenge to create a conversational AI system for Amazon's Alexa, we are building a fully functioning socialbot that you can hold a real conversation with.

Faculty advisor: David Wingate

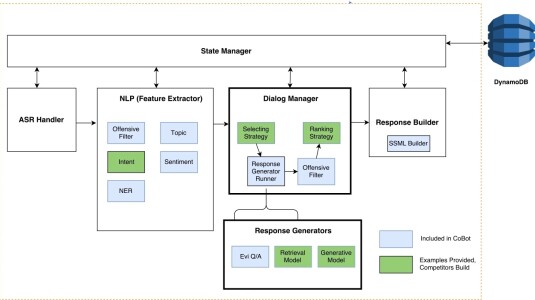

EVE stands for Emotive Adversarial Ensembles, a combination of machine learning terms that describe her overall structure. Eve attempts to express and interpret emotion using a group of response generators, called an ensemble, that help her decide what to say. The word adversarial does not describe her personality, but rather is a reference to the way her component neural networks will be trained.

Nancy F. - Team leader

Nancy is a PhD student with an emphasis in knowledge representation, automated decision-making, and cognitive systems. Born and raised in Livermore, California, she has also lived in Utah and Germany, where she worked on solar yield calculation software for photovoltaic systems. Her research on common-sense knowledge extraction was recently featured on Live Science, ACM TechNews, and TechCrunch.

In addition to work published at IJCAI, NIPS, ICRA and other peer-reviewed conferences, Nancy also enjoys writing science fiction, and has written on request for TOR Books, MIT’s Technology Review, and the Dark Expanse online strategy game. Her novelette "That Undiscovered Country" received the Jim Baen Memorial Award, which was jointly created by Baen Books and the National Space Society to honor the role played by science fiction in advancing real-world science.

Andrew C.

I am a computational mathematician that specializes in machine learning. I love answering tough questions with data. I have a particular interest in high performance computing, natural language processing, and intelligent visualizations. I have experience in applications of deep learning to socially beneficial projects (e.g., hearing aids and medical imaging).

Ben M.

Ben is a student and research scientist with a passion for teaching others and sharing his skills. He was the founder of 3 robotics clubs, a member of the team that won the IEEE CIG 2016 & 2017 Artificial Text Adventurer Competition, and a Flight Director at the Christa McAuliffe Space Education Center. In 2017 he published papers at NIPS and IJCAI on Affordance Extraction via Word Embeddings and Informing Action Primitives Through Free-Form Text. His current work in the Perception, Control, and Cognition laboratory at Brigham Young University focuses on emergent communication protocols for acquisition of symbol groundings.

Daniel R.

Daniel is a master's student at BYU with a gnawing urge to perform linguistic gymnastics. Last year he worked with a group building a system that would generate poetry using word embeddings. Since then, he was an integral part of the team that won the 2016 and 2017 IEEE CIG Text-based Adventure AI competition, and has both studied implementations of Word2Vec, skip-thought vectors, RNNs, and GANs. Daniel is an avid hiker and a collector of fantasy books and vinyl records.

Tyler E.

Tyler has been conducting Deep Learning research since 2015. During this time he has proven his ability to read, understand, and reproduce cutting-edge research papers working at the Perception, Cognition, and Control research lab at BYU. Tyler's research has pushed the boundaries of human knowledge with innovative experiments in depth map processing. He has also gained a lot of practical knowledge through multiple software engineering internships with Microsoft and working as a research scientist doing deep learning and computer vision for an insurance drone company, Loveland Innovations. He is currently working on modeling cognition for NLP using probablisitic programming. He is ambitious and motivated to build models that will help solve real world problems.

William M.

William is a CS master's student and research scientist at BYU. His research focuses on the intersection of computer systems and machine learning. His work experience includes building and maintaining fault tolerant massively parallel systems for BYU Supercomputing and building a distributed speech to text pipeline and a speech/text analytics engine at an AI centric startup for the past two years.

Zachary B.

Zachary is an undergraduate studying Applied Computational Mathematics with an emphasis in Computer Science. He is currently employed by the Perception, Control, and Cognition Lab (PCCL) at Brigham Young University and recently co-authored on an academic paper which was published in the Conference on Robot Learning (CoRL) 2017. The paper introduces an algorithm for increasing an autonomous agent's accuracy in analogical reasoning tasks using natural language word embeddings. Moving forward, Zachary is interested in utilizing unsupervised learning methods to reduce the amount of necessary training data for machine learning algorithms, as well as research in emergent communication protocols.

David Wingate - Faculty advisor

David Wingate is an Assistant Professor at Brigham Young University and the faculty administrator of the Perception, Control and Cognition laboratory. His research interests lie at the intersection of perception, control and learning. Specific interests include probabilistic programming, probabilistic modeling (particularly with structured Bayesian nonparametrics), reinforcement learning, dynamical systems modeling, information