Last week, the Swedish Academy announced that John Clauser, Alain Aspect, and Anton Zeilinger had won the Nobel Prize in physics “for experiments with entangled photons, establishing the violation of Bell inequalities, and pioneering quantum information science”.

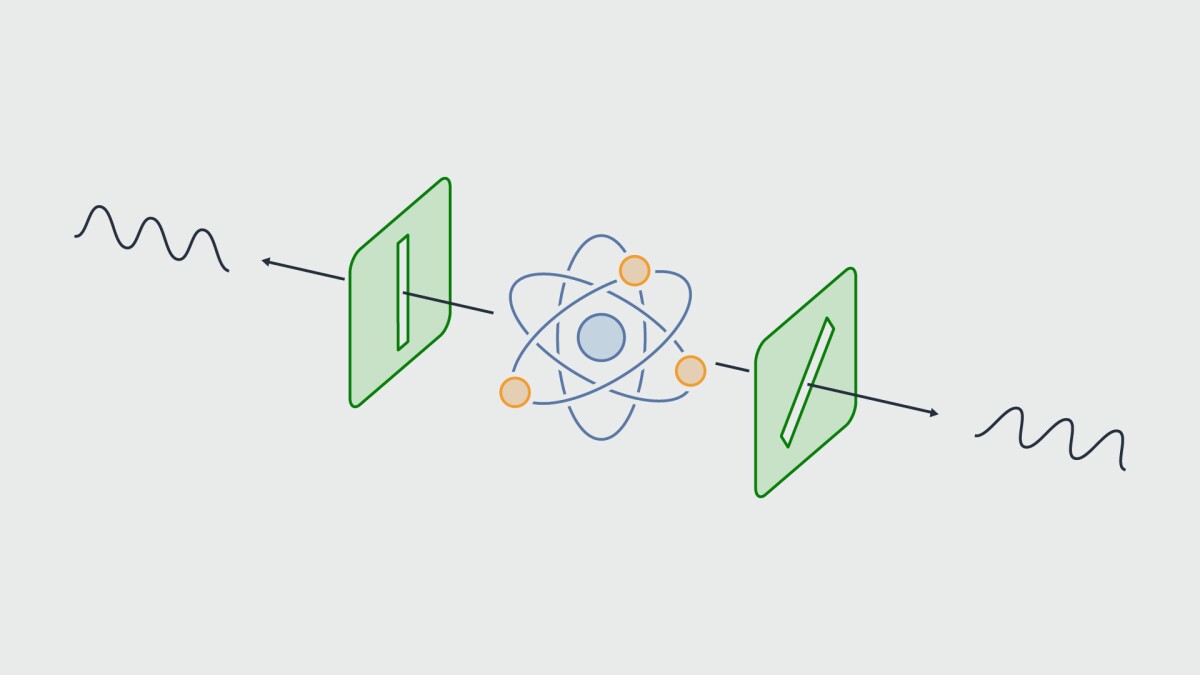

When two quantum subsystems — particles, atoms, or molecules — are “entangled”, then measurements performed on one are correlated with measurements performed on the other. The experimental setup that Clauser, Aspect, and Zeilinger investigated — in which entangled quantum subsystems have been widely separated — is closely related to those used in modern quantum networks.

The first application of quantum networking is likely to be quantum key distribution, in which two parties exchange private cryptographic keys and use quantum entanglement to ensure that their communication channel hasn’t been compromised.

On the occasion of the Swedish Academy’s announcement, Amazon Science asked Antia Lamas-Linares, who leads Amazon Web Services’ Center for Quantum Networking, three questions about Clauser, Aspect, and Zeilinger’s work and its implications for her own field.

- Q.

One of the things that Clauser and Aspect did to win the Nobel Prize was a set of experiments that challenged the “hidden-variable hypothesis”. What is the hidden-variable hypothesis, and what were their experiments?

A.At the beginnings of quantum mechanics, when people started looking at this theory that was really successful at doing things like predicting the spectra coming out of atoms, and they started looking at the implications for how we understand physical theories, there was this epic battle that went on for years between Einstein and Bohr, two giants of early 20th-century physics.

The problem was that as you look deeply into the theory, you realize that there is, for example, inherent randomness. You can prepare a couple of systems in identical ways, and you measure something about the system, and the outcomes are different. And they are different for very fundamental reasons. It's not just, oh, we just didn't have enough information. No, even with perfect information, the outcomes of identical measurement can be different. This was not compatible with how physical theories were supposed to work.

Entanglement has made this progression from being an uncomfortable property of quantum systems — and a philosophical question — to something that constitutes the basis of quantum technologies.

Antia Lamas-LinaresIt became a sort of hobby of Einstein’s to come up with little paradoxes based on these things that challenged the way we understand physical theories, from a very foundational point of view. In quantum mechanics, you can formally write something that we call an entangled state, and this is a state that involves more than one particle or more than one subsystem. It describes correlations between parts of that system.

Einstein’s point was, if I take the two subsystems and separate them very far away, then I can measure something on one and know the outcome in the other one instantaneously, or faster than light communication, which is not compatible with relativity. His conclusion was that there must be an underlying theory that “explained” the quantum-mechanical correlations and the apparent randomness of measurement outcomes.

Essentially, the results look random because there are these hidden variables that we don't know about. But if we knew their values, the results would be predictable. These theories became known as “hidden variable” theories.

It wasn't until the ’60s that John Bell wrote a beautiful, very simple theorem where he said, let's assume that there is some underlying information, some underlying physical theory that works in this general way. Can I have a set of measurements that have different outcomes from the quantum-mechanical model?

This is called the Bell inequality. It says, if you measure a particular sequence of correlations and you get a value below a certain threshold, then there could be an underlying hidden-variable theory of quantum mechanics. But if the value is above this threshold, then quantum mechanics cannot be explained by hidden variables. At this point, no matter how uncomfortable we are with the implications of quantum mechanics, we don’t get to pretend there is an underlying theory that will explain it all away.

It really changes our perception of the nature of reality. The randomness in measurement results is fundamental to nature. It's not just an accident from the lack of information about the hidden variables.

Eventually, people said, Well, okay, let's test these things. For that, you need to make entangled particles, and you need to measure them in the way prescribed by Bell’s theorem. That's essentially what John Clauser and Alain Aspect did during the late ’70s and early ’80s, with increasing levels of sophistication. Anton Zeilinger wasn't part of those Bell inequality measurements, but he used entanglement in a multitude of ground-breaking experiments, such as quantum teleportation, entanglement swapping, and the generation of tripartite entangled states.

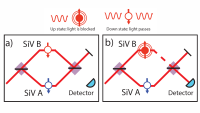

In his original experiment, John Clauser used irradiated calcium atoms to emit pairs of entangled photons and polarizing filters to measure correlations between their polarizations. Adapted from a figure by Johan Jarnestad for the Royal Swedish Academy of Sciences - Q.

So what does this all have to do with quantum networking?

A.With quantum networking, the two sides start by sharing entangled particles. The idea is that the outcomes on both sides are perfectly correlated, but if a malicious party tries to measure in-between, the correlations are broken, and this is detectable by the legitimate parties. It's related to the fact that quantum mechanics has properties like the no-cloning theorem that ensure that unknown quantum states cannot be perfectly copied. So you cannot just take any particle that is coming by and make a copy, because this inevitably alters the original state.

So the two sides take a subset of the results, and using classical communication, they say, Hey, let's check that nobody has interfered with our measurements. What did you get for the first one? How about number 3,047? And they can check that the correlations are as expected from quantum theory. They sacrifice maybe 10% of their data to check this, and if the correlations hold, they can be sure that nobody has otherwise tampered with the entangled particles.

Now to be clear, they're not sharing a message. They're sharing correlated random numbers, obtained from measurements on the entangled pair of particles. Correlated random numbers are exactly what you need for a symmetric cryptographic key. When you use a key as part of a cryptographic system, you need two parties to share a secret. It doesn't matter what the secret is, as long as it is not predictable. In this way quantum mechanics gives us the two requirements for a cryptographic key: randomness and privacy.

- Q.

It’s been 50 years since Clauser’s original experiment. How has our thinking about these questions changed in the interim?

A.What’s exciting about this is that entanglement has made this progression from being an uncomfortable property of quantum systems — and a philosophical question — to something that constitutes the basis of quantum technologies. We no longer consider entanglement an uncomfortable consequence of quantum mechanics. It is now a resource.

Alain Aspect has said that when he was doing his PhD, he was repeatedly discouraged from pursuing these experiments because working on the foundations of physics was a career killer. This is not what you do if you want to be successful.

My own PhD centered on building entanglement sources, which is a very engineering-focused task. I needed to engineer a system — in my case, a nonlinear crystal — to give me higher-quality entangled photon pairs. I wasn’t trying to prove philosophical questions regarding entanglement.

You could consider large parts of what Oskar [Painter, head of quantum hardware at Amazon Web Services] does and what I do as “entanglement engineering”. Our groups in AWS do quantum engineering, and entanglement is a vital resource that we produce, channel, and measure. That is a pretty radical evolution from where things stood when John, Alain, and Anton did their groundbreaking work.