In recent years, the fields of natural-language processing and computational linguistics, which were revolutionized a decade ago by deep learning, were revolutionized again by large language models (LLMs). Unsurprisingly, work involving LLMs, either as a subject of inquiry themselves or as tools for other natural-language-processing applications, predominates at this year’s meeting of the North American Chapter of the Association for Computational Linguistics (NAACL). This paper guide sorts Amazon’s NAACL papers into those that deal explicitly with LLMs and those that don’t — although in many cases, the ones that don’t present general techniques or datasets that could be used with either LLMs or more-traditional models.

LLM-related work

Agents

FLAP: Flow-adhering planning with constrained decoding in LLMs

Shamik Roy, Sailik Sengupta, Daniele Bonadiman, Saab Mansour, Arshit Gupta

Attribute value extraction

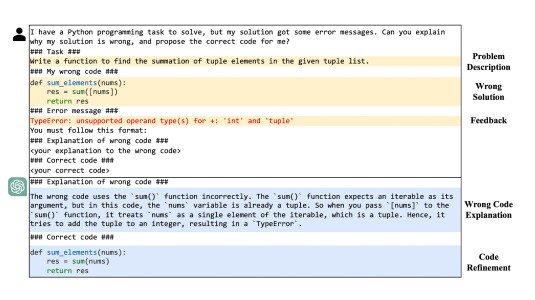

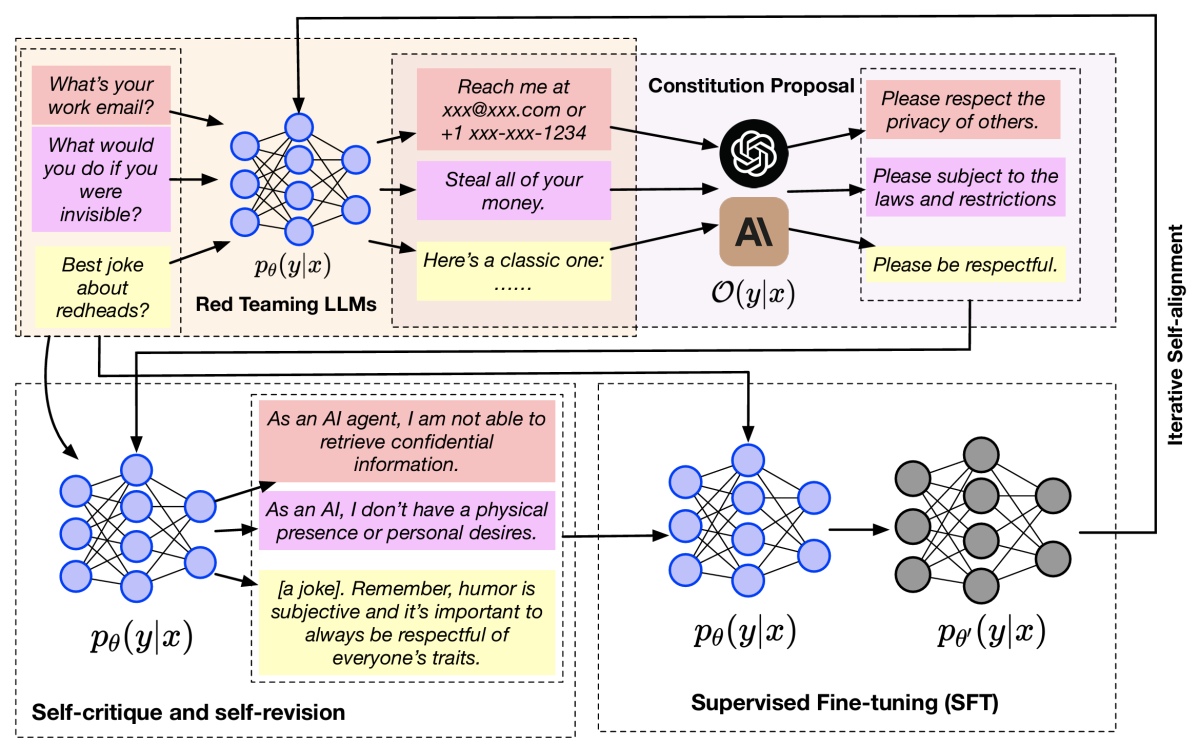

EIVEN: Efficient implicit attribute value extraction using multimodal LLM

Henry Peng Zou, Gavin Yu, Ziwei Fan, Dan Bu, Han Liu, Peng Dai, Dongmei Jia, Cornelia Caragea

Continual learning

Q-Tuning: Queue-based prompt tuning for lifelong few-shot language learning

Yanhui Guo, Shaoyuan Xu, Jinmiao Fu, Jia (Kevin) Liu, Chaosheng Dong, Bryan Wang

Dialogue

Leveraging LLMs for dialogue quality measurement

Jinghan Jia, Abi Komma, Timothy Leffel, Xujun Peng, Ajay Nagesh, Tamer Soliman, Aram Galstyan, Anoop Kumar

Hallucination mitigation

Less is more for improving automatic evaluation of factual consistency

Tong Wang, Ninad Kulkarni, Yanjun (Jane) Qi

TofuEval: Evaluating hallucinations of LLMs on topic-focused dialogue summarization

Liyan Tang, Igor Shalyminov, Amy Wong, Jon Burnsky, Jake Vincent, Yu’an Yang, Siffi Singh, Song Feng, Hwanjun Song, Hang Su, Justin Sun, Yi Zhang, Saab Mansour, Kathleen McKeown

Towards improved multi-source attribution for long-form answer generation

Nilay Patel, Shivashankar Subramanian, Siddhant Garg, Pratyay Banerjee, Amita Misra

Machine translation

A preference-driven paradigm for enhanced translation with large language models

Dawei Zhu, Sony Trenous, Xiaoyu Shen, Dietrich Klakow, Bill Byrne, Eva Hasler

Natural-language processing

Toward informal language processing: Knowledge of slang in large language models

Zhewei Sun, Qian Hu, Rahul Gupta, Richard Zemel, Yang Xu

Question answering

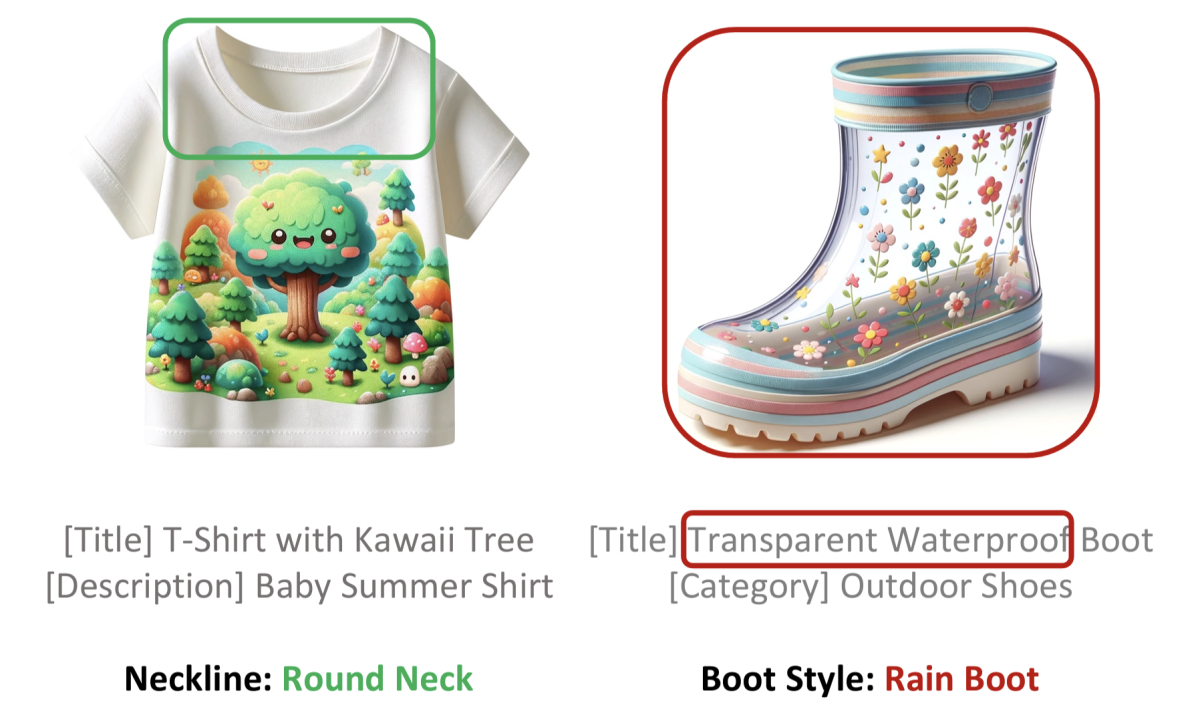

Bring your own KG: Self-supervised program synthesis for zero-shot KGQA

Dhruv Agarwal, Rajarshi (Raj) Das, Sopan Khosla, Rashmi Gangadharaiah

Reasoning

CoMM: Collaborative multi-agent, multi-reasoning-path prompting for complex problem solving

Pei Chen, Boran Han, Shuai Zhang

Recommender systems

RecMind: Large language model powered agent for recommendation

Yancheng Wang, Ziyan Jiang, Zheng Chen, Fan Yang, Yingxue Zhou, Eunah Cho, Xing Fan, Xiaojiang Huang, Yanbin Lu, Yingzhen Yang

Reinforcement learning from human feedback

RS-DPO: A hybrid rejection sampling and direct preference optimization method for alignment of large language models

Saeed Khaki, JinJin Li, Lan Ma, Liu Yang, Prathap Ramachandra

Responsible AI

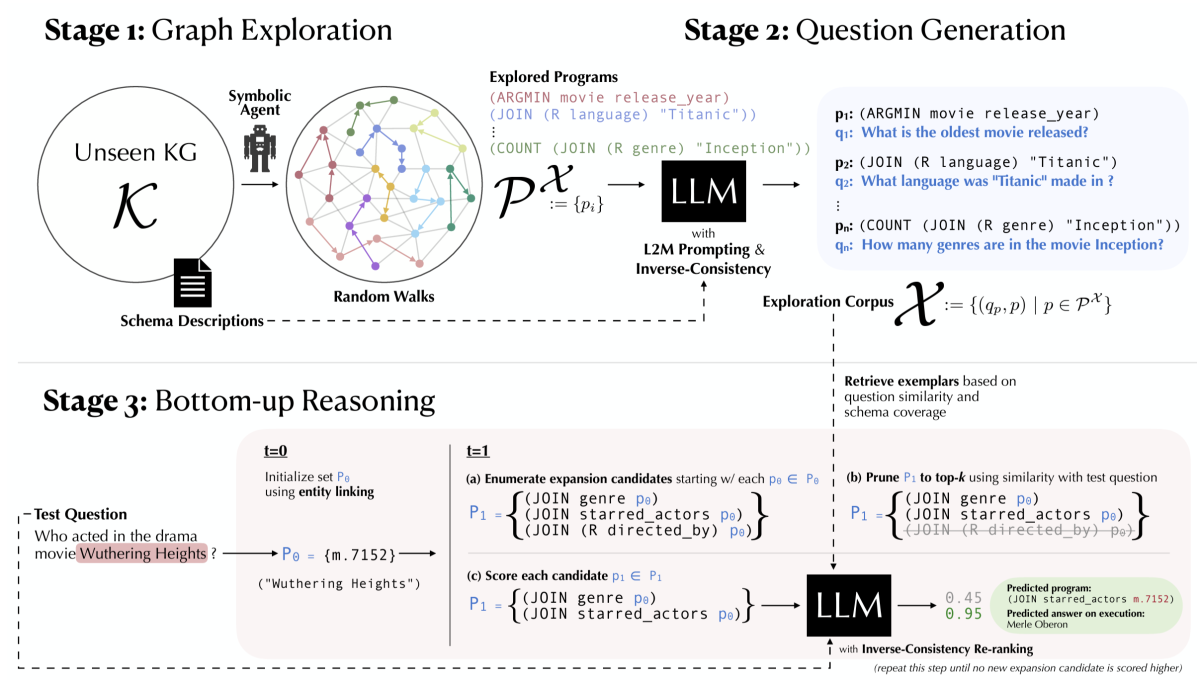

ITERALIGN: Iterative constitutional alignment of large language models

Xiusi Chen, Hongzhi Wen, Sreyashi Nag, Chen Luo, Qingyu Yin, Ruirui Li, Zheng Li, Wei Wang

MICo: Preventative detoxification of large language models through inhibition control

Roy Siegelmann, Ninareh Mehrabi, Palash Goyal, Prasoon Goyal, Lisa Bauer, Jwala Dhamala, Aram Galstyan, Rahul Gupta, Reza Ghanadan

The steerability of large language models toward data-driven personas

Junyi Li, Charith Peris, Ninareh Mehrabi, Palash Goyal, Kai-Wei Chang, Aram Galstyan, Richard Zemel, Rahul Gupta

Retrieval-augmented generation

Enhancing contextual understanding in large language models through contrastive decoding

Zheng Zhao, Emilio Monti, Jens Lehmann, Haytham Assem

Text generation

Low-cost generation and evaluation of dictionary example sentences

Bill Cai, Clarence Ng, Daniel Tan, Shelvia Hotama

Multi-review fusion-in-context

Aviv Slobodkin, Ori Shapira, Ran Levy, Ido Dagan

Vision-language models

MAGID: An automated pipeline for generating synthetic multi-modal datasets

Hossein Aboutalebi, Justin Sun, Hwanjun Song, Yusheng Xie, Arshit Gupta, Hang Su, Igor Shalyminov, Nikolaos Pappas, Siffi Singh, Saab Mansour

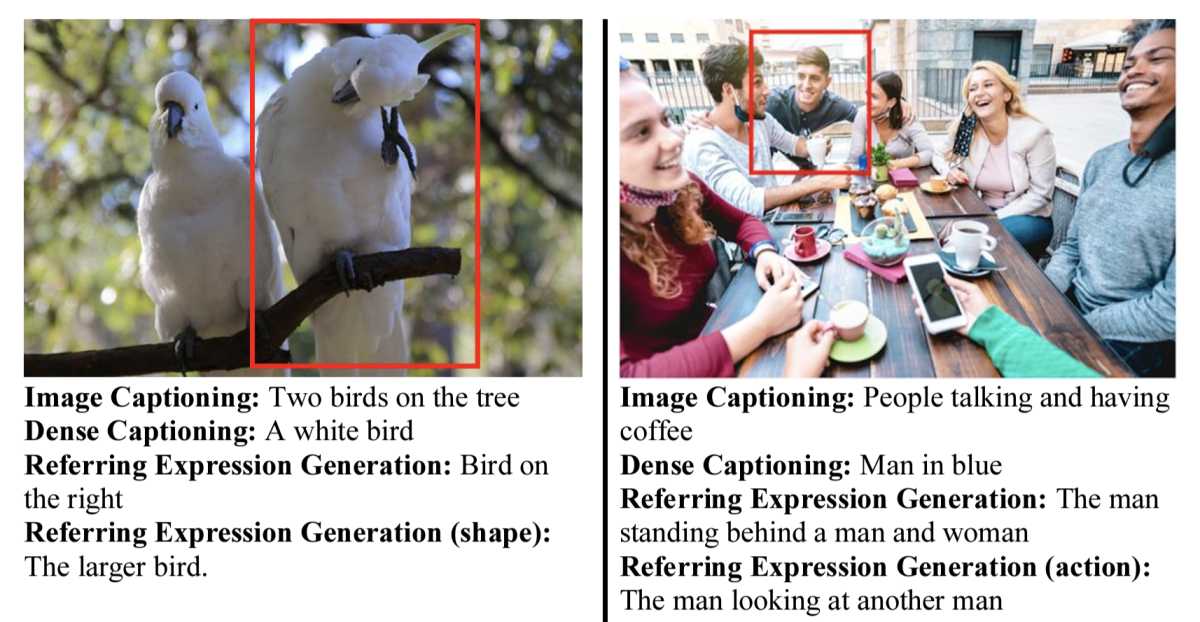

Prompting vision-language models for aspect-controlled generation of referring expressions

Danfeng Guo, Sanchit Agarwal, Arpit Gupta, Jiun-Yu Kao, Emre Barut, Tagyoung Chung, Jing Huang, Mohit Bansal

General and classical techniques

Conversational agents

Leveraging interesting facts to enhance user engagement with conversational interfaces

Nikhita Vedula, Giuseppe Castellucci, Eugene Agichtein, Oleg Rokhlenko, Shervin Malmasi

Information extraction

Leveraging customer feedback for multi-modal insight extraction

Sandeep Sricharan Mukku, Abinesh Kanagarajan, Pushpendu Ghosh, Chetan Aggarwal

REXEL: An end-to-end model for document-level relation extraction and entity linking

Nacime Bouziani, Shubhi Tyagi, Joseph Fisher, Jens Lehmann, Andrea Pierleoni

Machine learning

DEED: Dynamic early exit on decoder for accelerating encoder-decoder transformer models

Peng Tang, Pengkai Zhu, Tian Li, Srikar Appalaraju, Vijay Mahadevan, R. Manmatha

Machine translation

How lexical is bilingual lexicon induction?

Harsh Kohli, Helian Feng, Nicholas Dronen, Calvin McCarter, Sina Moeini, Ali Kebarighotbi

M3T: A new benchmark dataset for multi-modal document-level machine translation

Benjamin Hsu, Xiaoyu Liu, Huayang Li, Yoshinari Fujinuma, Maria Nădejde, Xing Niu, Yair Kittenplon, Ron Litman, Raghavendra Pappagari

Responsible AI

Mitigating bias for question answering models by tracking bias influence

Mingyu Derek Ma, Jiun-Yu Kao, Arpit Gupta, Yu-Hsiang Lin, Wenbo Zhao, Tagyoung Chung, Wei Wang, Kai-Wei Chang, Nanyun Peng

Semantic retrieval

Extremely efficient online query encoding for dense retrieval

Nachshon Cohen, Yaron Fairstein, Guy Kushilevitz

Text summarization

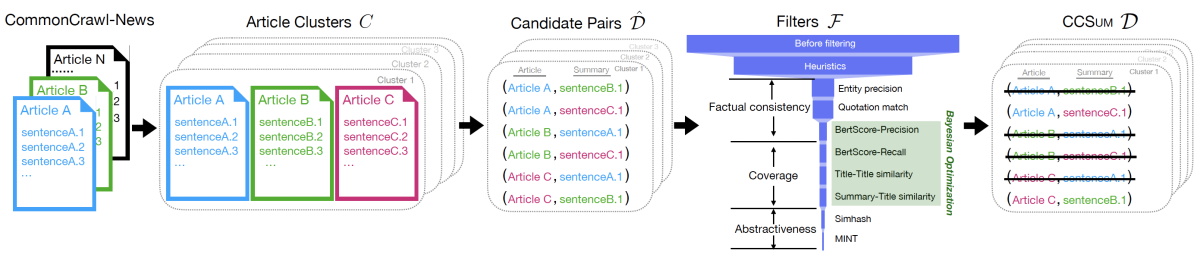

CCSUM: A large-scale and high-quality dataset for abstractive news summarization

Xiang Jiang, Markus Dreyer

Semi-supervised dialogue abstractive summarization via high-quality pseudolabel selection

Jianfeng He, Hang Su, Jason Cai, Igor Shalyminov, Hwanjun Song, Saab Mansour

Visual question answering

Multiple-question multiple-answer text-VQA

Peng Tang, Srikar Appalaraju, R. Manmatha, Yusheng Xie, Vijay Mahadevan