Alexa’s ability to respond to customer requests is largely the result of machine learning models trained on annotated data. The models are fed sample texts such as “Play the Prince song 1999” or “Play River by Joni Mitchell”. In each text, labels are attached to particular words — SongName for “1999” and “River”, for instance, and ArtistName for Prince and Joni Mitchell. By analyzing annotated data, the system learns to classify unannotated data on its own.

Regularly retraining Alexa’s models on new data improves their performance. But annotation is expensive, so we would like to annotate only the most informative training examples — the ones that will yield the greatest reduction in Alexa’s error rate. Selecting those examples automatically is known as active learning.

Last week, at the annual meeting of the North American Chapter of the Association for Computational Linguistics (NAACL), we presented a new approach to active learning that, in experiments, improved the accuracy of machine learning models by 7% to 9%, relative to training on randomly selected examples.

We compared our technique to four other active-learning strategies and showed gains across the board. Our new approach is 1% to 3.5% better than the best-performing approach previously reported. In addition to extensive testing with previously annotated data (in which the labels were suppressed to simulate unannotated data), we conducted a smaller trial with unlabeled data and human annotators and found that our results held, with improvements of 4% to 9% relative to the baseline machine learning models.

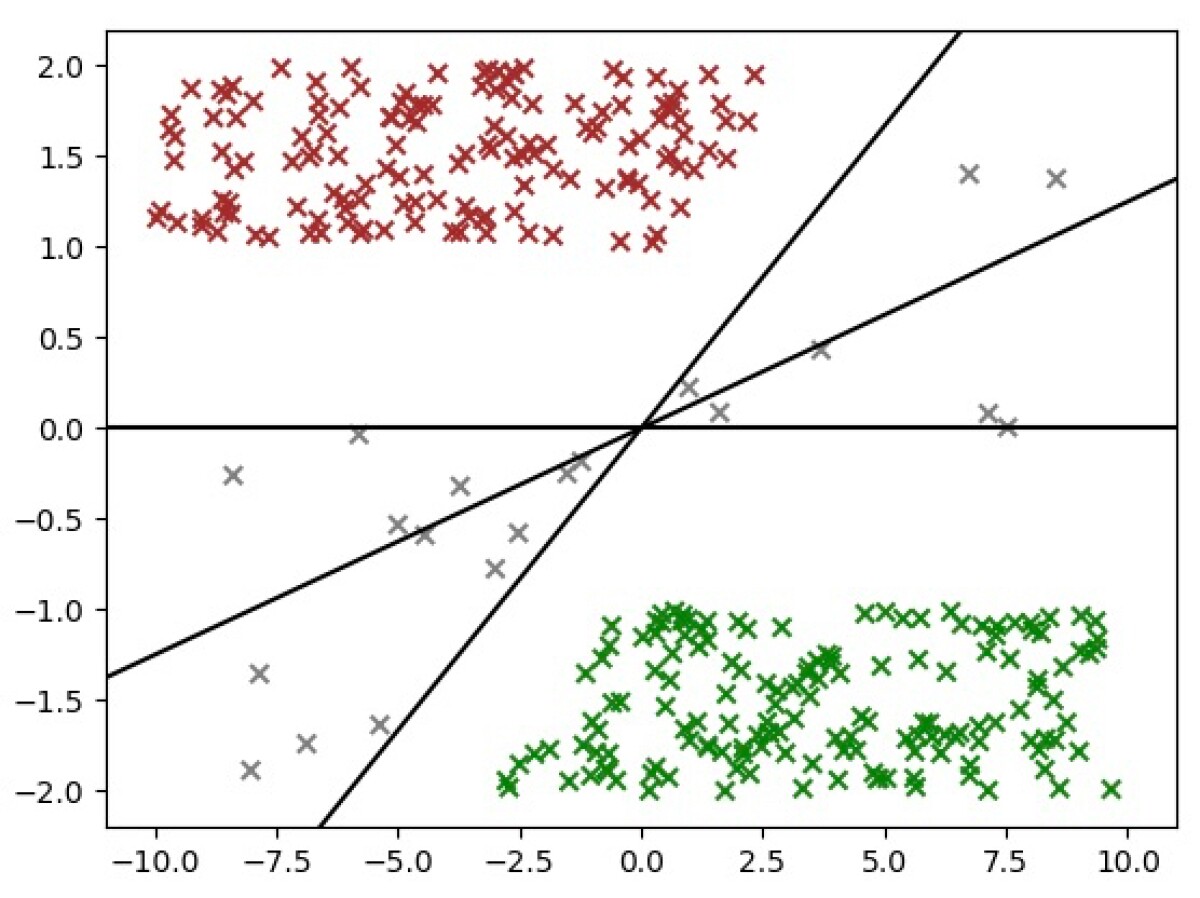

The goal of active learning is to canvass as many candidate examples as possible to find those with the most informational value. Consequently, the selection mechanism must be efficient. The classical way to select examples is to use a simple linear classifier, which assigns every word in a sentence a weight. The sum of the weights yields a score, and a score greater than zero indicates that the sentence belongs to a particular category.

For instance, if the classifier is trying to determine whether a sentence belongs to the category music, it would probably assign the word “play” a positive weight, because music requests frequently begin with the word “play”. But it might assign the word “video” a negative weight, because that’s a word that frequently denotes the customer’s desire to play a video, and the video category is distinct from the music category.

Such weights are learned from training examples. During training, the linear classifier is optimized using a loss function, which measures the distance between its performance and perfect classification of the training data.

Typically, in active learning, examples are selected for annotation if they receive scores close to zero — whether positive or negative — which means that they are near the decision boundary of the linear classifier. The hypothesis is that hard-to-classify examples are the ones that a model will profit from most.

Researchers have also investigated committee-based methods, in which linear models are learned using several different loss functions. Some loss functions emphasize getting the aggregate statistics right across training examples; others emphasize getting the right binary classification for any given example; still others impose particularly harsh penalties for giving the wrong answer with high confidence; and so on.

Traditional committee-based methods also select low-scoring examples, but they add another criterion: at least one of the models must disagree with the others in its classification. Again, the assumption is that hard-to-classify examples will be the most informative.

In our experiments, we explored a variant on the committee-based approach. First, we tried selecting low-scoring examples on which the majority of linear models have scores greater than zero. Because this majority positive filter includes examples with all-positive scores, it yields a larger pool of candidates than the filter that enforces dissent. To select the most informative examples from that pool, we experimented with several different re-ranking strategies.

Most importantly, we used a conditional-random-field (CRF) model to do the re-ranking. Where the linear models classify requests only according to domain — such as music, weather, smart home, and so on — the CRF models classify the individual words of the request as belonging to categories such as ArtistName or SongName.

If the CRF easily classifies the words of a request, the score increases; if the CRF struggles, the score decreases. (Again, low-scoring requests are preferentially selected for annotation.) Adding the CRF classifier does not significantly reduce the efficiency of the algorithm because we execute the re-ranking only on examples where the majority of models agreed.

For re-ranking, we add the committee scores and then take the absolute value of the sum. This permits individual models on the committee to provide high-confidence classifications, so long as strong positive scores are offset by strong negative scores.

The committee approaches reported in the literature enforced dissent among the models; interestingly, using the criterion of majority scores greater than zero yielded better results, even without the CRF. With the CRF, however, the error rate shrank by an additional 1% to 2%.

Acknowledgments: John Kearney, Abhyuday Jagannatha, Imre Kiss, Spyros Matsoukas