Just as Alexa can wake up without the need to press a button, she also automatically detects when a user finishes her query and expects a response. This task is often called “end-of-utterance detection,” “end-of-query detection,” “end-of-turn detection,” or simply “end-pointing.”

The challenges of end-pointing are two-fold: On the one hand, we don’t want Alexa to be too eager to reply and potentially cut a user off during a speech pause or while the user is just taking a breath (we call this an “early end-point”). On the other hand, Alexa also should not wait too long before replying to the user query (or even mistakenly consider background speech as device-directed and continue listening) since this is perceived as high latency by the customer (we call such cases “late end-points”).

The ideal end-pointer knows to distinguish speech pauses or hesitation from final pauses, in order to trigger instantly after a query ends while remaining patient in cases of hesitation (note: in online or streaming speech recognition systems, the input speech signal is not observed all at once but received in small chunks and processed in real-time). Given sufficient example data, the design of an end-pointer algorithm can be considered a machine learning problem.

Next week, at the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2018, my colleagues and I will present a paper that proposes an end-of-utterance detection system that allows for resource-efficient adaptation for new domains and across languages. More specifically, we train a machine learning model to estimate, for an observed segment of audio, whether the segment is “complete” (i.e., containing a full user query) or whether more speech input is expected.

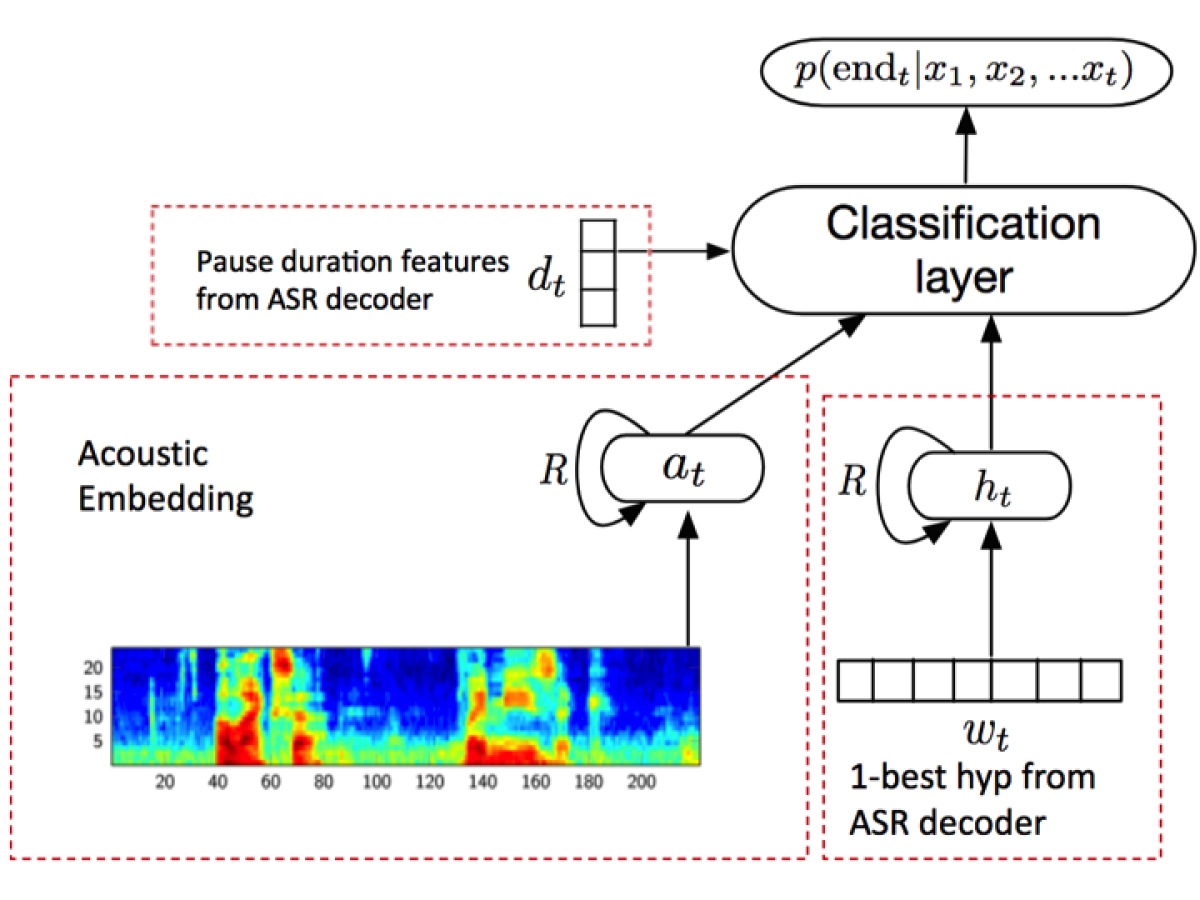

The model comprises three components: an acoustic classifier, a word-based classifier, and a final classification layer that combines the previous classifiers and additional meta-features from the automatic speech recognition (ASR) system to form a final decision.

Let’s look at each component individually. The acoustic classifier is a long-short-term-memory (LSTM) recurrent neural network that, on its input side, consumes a short snippet of audio (typically in the order of 25 milliseconds). On its output side, the classifier emits a probability (between 0 and 1) of how likely the segment of audio (absorbed so far) constitutes a complete user query. As a result, the acoustic classifier should learn different acoustic patterns that indicate whether a speech pause is final or not. Cues that indicate an end of turn in human-to-human conversations (that the network may implicitly learn) include speaking rate, pitch and vowel lengths.

The second model, the word-based classifier, is also an LSTM network that consumes one word at a time, the current best partial transcript that the ASR system is hypothesizing (note: this partial hypothesis can change over time as the ASR system receives more and more chunks of audio). Similar to the acoustic classifier, the word-based classifier is trained to output a probability indicating whether the current observation (i.e., sequence of words) likely constitutes a complete user query. The intuition is that not only acoustics but also the spoken content, i.e. words, carry information on whether a speech segment is final or not. For example, the fact that the transcript “play music by” ends in a preposition makes it likely that the user has something else to add.

In addition to these two classifiers, a third model component comes into play: meta-features emitted by the ASR decoder. These meta-features describe for how long (if at all) the ASR decoder is running “idle.” In other words, how meaningful are the incoming audio chunks (actual content vs. silence/noise)?

Finally, the above-mentioned classification layer (again a neural network) consumes the individual predictions from the acoustic and the word-based classifier as well as the meta-features from the ASR decoder and outputs a final prediction score (between 0 and 1) indicating whether an end-point should be triggered and a reply to the user initiated.

Acknowledgements: Ariya Rastrow, Chengyuan Ma, Guitang Lan Kyle Goehner, Gautam Tiwari, Shaun Joseph, Bjorn Hoffmeister