In recent years, the amount of textual information produced daily has increased exponentially. This information explosion has been accelerated by the ease with which data can be shared across the web. Most of the textual information is generated as free-form text, and only a small fraction is available in structured format (Wikidata, Freebase etc.) that can be processed and analyzed directly by machines.

To develop an ability to answer accurately almost any conceivable question, we must improve our ability to transform free-form text to structured knowledge. Further, we must safeguard against false information spreading from unreliable sources. The latter problem is known as “fact verification”. It is not only a challenge for news organizations and social media platforms, but also for any application or online service where automatic information extraction is performed.

While there has been significant progress in the fields of automatic question-answering [e.g. SQUAD], and natural language inference [e.g. SNLI], because of the availability of large-scale datasets, until now there hasn’t been a similar attempt at creating a fact extraction and verification dataset. In an effort to catalyze research and development to address the problems of fact extraction and verification, my colleague Christos Christodoulopoulos and I, in collaboration with researchers from the University of Sheffield, have created a large-scale fact extraction and verification dataset. We are making this dataset, comprising more than 185,000 evidence-backed claims, publicly available for download by members of the community who are interested in addressing this challenge. This dataset could be used to train AI systems to extract verifiable information; such a technology would also help us further advance AI systems capable of answering any question with verifiable information.

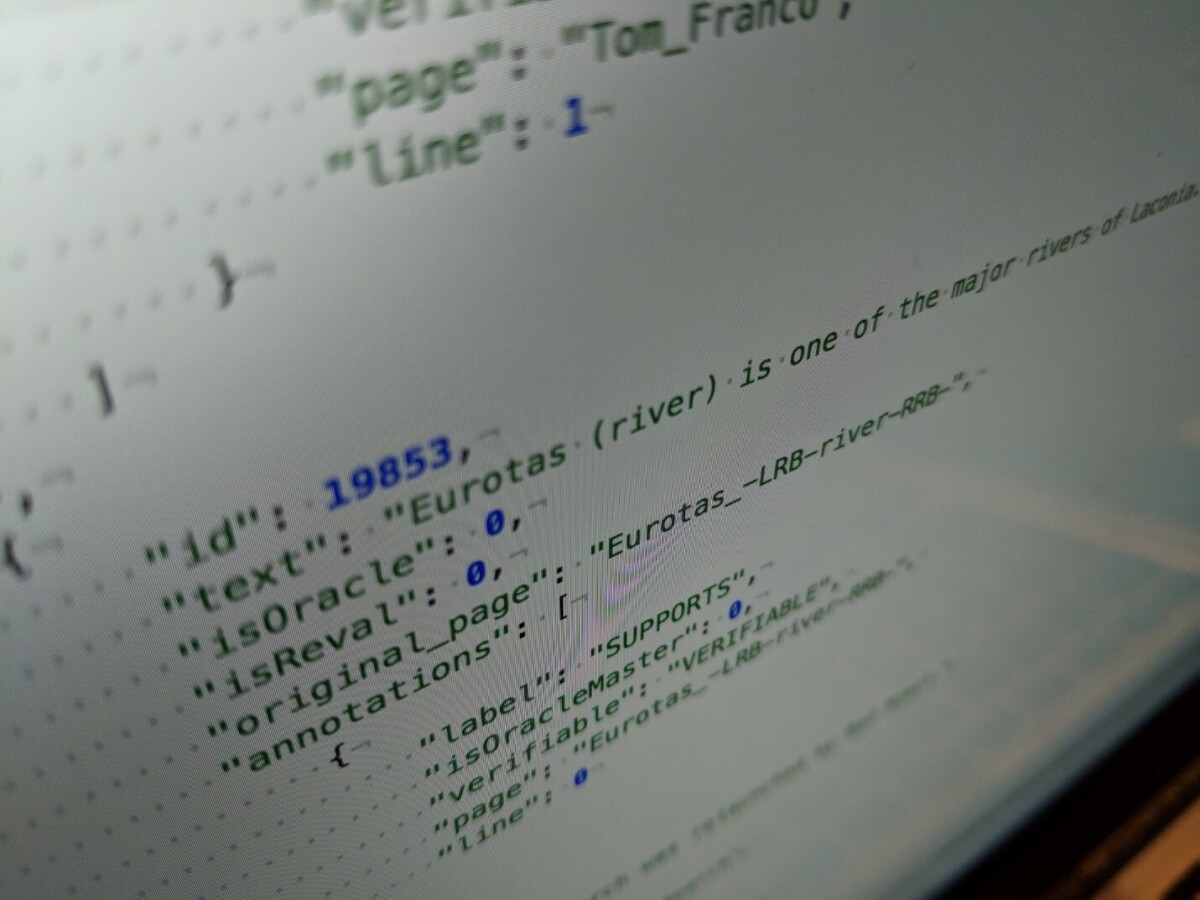

The claims in the dataset were generated manually by human annotators who extracted them from Wikipedia pages. False claims were generated by mutating true claims in a variety of ways, some of which were meaning-altering. Claims were verified by multiple annotators, each of whom was different from the person who constructed the claim. During the verification step, annotators were required to label a claim for its validity and also supply full-sentence textual evidence from Wikipedia articles for the label.

The primary challenge has been that evidence for a given claim may span multiple sentences across many documents. For example, take the claim “Colin Firth is a Gemini.” To verify the claim, we must look at Colin Firth’s Wikipedia page, where we find the sentence: “Colin Firth (born 10 September 1960) ...”. We also must find additional evidence from the Wikipedia page for Gemini which states that: “Under the tropical zodiac, the sun transits this sign between May 21 and June 21.” To ensure annotation consistency, we developed suitable guidelines and user interfaces, resulting in high inter-annotator agreement (0.6841 Fleiss κ) in claim verification classification, with 95.42% precision and 72.36% recall in evidence retrieval.

In addition to making this dataset publicly available, we are also organizing a public machine-learning competition, inviting the academic and industry research communities to tackle the problem. The results of the competition, descriptions of the participating systems, as well as presentations of research on the topics of fact extraction and verification, will be presented at the first FEVER workshop collocated with Empirical Methods in Natural Language Processing conference (EMNLP 2018). The workshop will also include invited talks from a number of leading researchers from the fields of information extraction, argument mining, fact verification and natural language processing, among others.

More details on the dataset, the annotation process, and some baseline implementations for the task can be found on our website and in this paper that will be published in the proceedings of the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2018).

Paper: "FEVER: a large-scale dataset for Fact Extraction and VERification"

Acknowledgements: Dr. Andreas Vlachos and PhD student James Thorne, from the Department of Computer Science at the University of Sheffield