The benefits of quality sleep are well documented, and sleep affects nearly every aspect of our physical and emotional well-being. Yet one in three adults doesn’t get enough sleep. Given Amazon’s expertise in machine learning and radar technology innovation, we wanted to invent a device that would help customers improve their sleep by looking holistically at the factors that contribute to a good night’s rest.

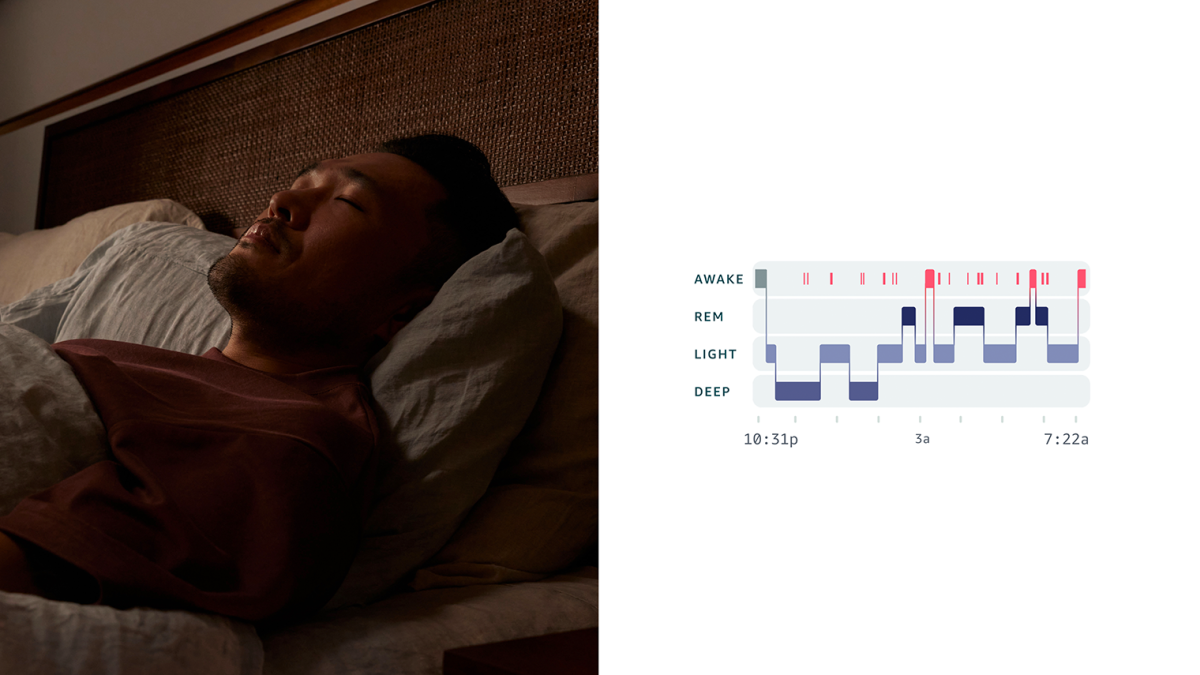

That’s why we’re excited to announce that Amazon has unveiled its first dedicated sleep device — Halo Rise, a combined bedside sleep tracker, wake-up light, and smart alarm. Powered by custom machine learning algorithms and a suite of built-in sensors, Halo Rise accurately determines users’ sleep stages and provides valuable insights that can be used to optimize their sleep, including information about their sleep environments. Halo Rise has no sensors to wear, batteries to charge, or apps to open. And since a good wake-up experience is core to good sleep, Halo Rise features a wake-up light and smart alarm, designed to help customers start the day feeling rested and alert.

Designing with customer trust as our foundation

Customer privacy and safety are foundational to Halo Rise, and that's evident in both the hardware design and the technologies used to power the experience. Halo Rise features neither a camera nor a microphone and instead relies on ambient radar technology and machine learning to accurately determine sleep stages: deep, light, REM (rapid eye movement), and awake.

The technology at the core of Halo Rise is a built-in radar sensor that safely emits and receives an ultralow-power radio signal. The sensor uses phase differences between reflected signals at different antennas to measure movement and distance. Through on-chip signal processing, Halo Rise produces a discrete waveform corresponding to the user’s respiration. The device cannot detect noise or visual identifiers associated with an individual user, such as body images.

Using built-in radar technology enables us to prioritize customer privacy while still delivering accurate measurements and useful results. Customers have the option to manually put Halo Rise into Standby mode, which turns off the device’s ability to detect someone’s presence or track sleep.

Intuitive and accurate experience

To design the sleep-tracking algorithm that powers Halo Rise, we thought about the most common bedtime behaviors and the ways in which customers and their families (pets included) might engage with the bedroom. This led us to innovate on five main technological fronts:

- Presence detection: Halo Rise activates its sleep detection only when someone is in range of the sensor. Otherwise, the device remains in a monitoring mode, where no data is transmitted to the cloud.

- Primary-user tracking: Halo Rise distinguishes the sleep of the primary user (the user closest to the device) from that of other people or pets in the same bed, even though the respiration signal cannot be associated with individual users.

- Sleep intent detection: Halo Rise detects when the user first starts trying to sleep and distinguishes that attempt from other in-bed activities — such as reading or watching TV — to accurately measure the time it takes to fall asleep, an important indicator of sleep health.

- Sleep stage classification: Halo Rise reliably correlates respiration-driven movement signals with sleep stages.

- Smart-alarm integration: During the user’s alarm window, the Halo Rise smart alarm checks the user’s sleep stage every few minutes to detect light sleep, while also maximizing sleep duration.

Presence detection

Halo Rise has an easy setup process. To get started, a customer will place Halo Rise on their bedside table facing their chest and note in the Amazon Halo app what side of the bed they sleep on — and that’s it: Halo Rise is ready to go. The radar sensor detects motion within a 3-D geometric volume that fans out from the sensor, an area called the detection zone. Within this zone, the presence detection algorithm estimates the location of the bed and an “out-of-bed” area between the bed and the device.

On-chip algorithms detect the motion and location of respiration events within the detection zone. In both cases — motion and respiration — the algorithm evaluates the quality of the signals. On that basis, it computes a score indicating its confidence that the readings are reliable and a user is present. Only if the confidence score crosses a reliability threshold does Halo Rise begin streaming sensor data to the cloud, where it is processed by the primary-user-tracking algorithm.

Primary-user tracking

We know that many of our customers share their beds, be it with other people or with pets, so our algorithms are designed to track the sleep of only the primary user. Halo Rise starts a sleep session after it detects someone’s presence within the detection zone for longer than five minutes. From there, the primary-user-tracking algorithm runs continuously in the background, sensing the closest user’s sleep stages. As long as the user sleeps on their side of the bed, and their partner sleeps on the other side, Halo Rise will track the primary user’s sleep quality irrespective of who comes to bed first and who leaves the bed last.

During the sleep session, Halo Rise dynamically monitors changes in the user’s distance from the sensor, the respiration signal quality, and abrupt changes in respiration patterns that indicate another person’s presence. These changes cause the algorithm to reassess whether it’s actually sensing the intended user and to ignore the data unrelated to the primary user. For instance, if the user gets into bed after their partner has already fallen asleep, or if they use the restroom in the middle of the night, Halo Rise detects that and adjusts the sleep results accordingly.

Sleep intent detection

Another big algorithmic challenge we faced was determining when a user is quietly sitting in bed reading their Kindle or watching TV rather than trying to fall asleep. The time it takes to fall asleep (also known as sleep latency) is an important indicator of sleep health. Too short of a time may result from sleep deprivation, while too long of a time may be due to difficulty winding down.

To address this problem, we used a combination of presence and primary-user tracking along with a machine-learning model trained and evaluated on tens of thousands of hours of sleep diaries to accurately identify when the user is trying to sleep. The model uses sensor data streamed from the device — including respiration, movement, and distance — to generate a sleep intent score. The score is then post-processed by a regularized change-point detection algorithm to determine when the user is trying to fall asleep or wake up.

Sleep stage classification

Wearable health trackers like Halo Band and Halo View use heart rate and motion signals to determine sleep stages during the night, but Halo Rise uses respiration. To learn how to reliably recognize those stages, we needed to develop new machine learning models.

We pretrained a deep-learning model to predict sleep stages using a rich and diverse clinical dataset that included tens of thousands of hours of sleep collected by academic and research sources. The research included sleep data measured using the clinical gold standard, polysomnography (PSG). PSG studies use a large array of sensors attached to the body to measure sleep, including respiratory inductance plethysmography (RIP) sensors, whose output is analogous to the respiration data measured by Halo Rise.

Pretraining the model to predict sleep stages from RIP sensors enabled it to develop meaningful representations of the relationship between respiration and sleep prior to additional training on radar datasets collected alongside PSG. To collect radar training data for the models, we partnered with sleep clinics to conduct thousands of hours of PSG studies. Ultimately, this enables our models to classify sleep stages using just a built-in radar in the comfort of a customer’s home.

A smarter wake-up experience

When woken naturally during a light sleep stage, people are most likely to feel rested, refreshed, and ready to tackle the day. Consequently, Halo Rise features a wake-up light, which gently simulates the colors and gradual brightening of a sunrise, and a smart alarm. Customers can also set an audible smart alarm that’s integrated with our sleep stage classification algorithms, optimizing their wake experience. Ahead of their scheduled wake-up time, the audible smart alarm monitors their sleep stages and wakes them up at their ideal time for getting up. This combination of wake-up light and smart alarm is shown to increase cognitive and physical performance throughout the day.

The smart-alarm algorithms are trained around two factors: sensing when the user is in light sleep and maximizing the user’s sleep duration. For the first component, Halo Rise needs to continuously monitor sleep stages during the alarm window — the 30 minutes before a user’s scheduled alarm — to identify when the user has entered a light sleep stage, known as the “wake window.”

At this phase, our algorithms work to sense “wakeable events,” such as a change in motion or breathing. This requires incrementally computing sleep stages to trigger the alarm with low latency. Unlike many sleep algorithms, Halo Rise does not require data from the entirety of the sleep session to classify sleep stages, allowing predictions to be used directly for alarm triggers as data is streamed.

For the second component, the system’s models are trained to predict the latest moment to trigger the alarm during the wake window. This ensures that as the user drifts between sleep stages, they are getting those crucial minutes of additional sleep before the alarm goes off.

A solution you can trust

To evaluate our machine learning algorithms, we collected thousands of hours of sleep studies comparing Halo Rise to PSG for over a hundred sleepers, developed with input from leading sleep labs. While sleep studies are typically conducted in sleep labs, we performed in-home PSG studies at participants’ homes under supervision of registered PSG technologists to test the device in naturalistic settings.

We used three different registered PSG technologists to reliably annotate ground truth sleep stages per the American Academy of Sleep Medicine’s scoring rules. We then compared Halo Rise’s outputs to the ground truth sleep data across 14 different sleep metrics — including time asleep, time awake, time to fall asleep, and accuracy for every 30 seconds — following analysis guidelines from a standardized framework for sleep stage classification assessment. This evaluation was supplemented by thousands of sleep diaries from our beta trials, expanding our evaluation to a diverse population of adults to account for variations in preferred sleep postures, age, body shapes, and other background conditions.

What’s next?

As we look to invent new products that help our customers live better longer, Halo Rise is an important step in giving our customers greater agency over their health and well-being. By looking holistically at the end-to-end sleep experience — not just going to sleep but also getting up in the morning — Halo Rise unlocks an entirely new way for customers to understand and manage sleep. We’re excited to help them make sense of valuable sleep data, from the quality and quantity of their sleep to their room’s environment, and deliver actionable insights and resources to improve it in the future. Halo Rise is just getting started, and we are going to learn from our customers how this technology can continue to evolve and become even more personalized to better meet their needs.