At the 2021 Conference of the European Association for Computational Linguistics (EACL), we received honorable mention in the best-long-paper category for our paper "Hidden biases in unreliable news detection datasets”, coauthored with Xiang Zhou (while he was an Amazon intern) and Mohit Bansal from the University of North Carolina at Chapel Hill.

In this paper, we studied datasets used by the research community for developing models to automatically identify unreliable news. We found that the datasets had biases that are responsible for much of the accuracy in identifying unreliable news that previous papers reported. This suggests that models built on these datasets will not generalize well in a real-world setting.

To provide the research community a path forward, we followed up the analysis with a detailed study of the structure of the bias, guidelines for reducing the bias in existing datasets, and guidelines for developing higher-quality datasets in the future.

Data collection

We started our analysis by looking at the data collection strategies used for creating unreliable-news-article datasets. Creating such datasets requires collecting news articles and their corresponding labels (for instance, “reliable” or “unreliable”).

As expected, collecting the labels is the most challenging task. Some fact-checking websites (e.g., PolitiFact, GossipCop) assign labels to individual articles. While this provides accurate labels, the process is both time consuming and expensive, resulting in comparatively small datasets.

An approach that scales better is assigning a reliability (or bias) score to each news outlet (or site, such as cnn.com or nytimes.com). This is as an easy way to create large-scale datasets, but it generates noisy labels. We studied biases in datasets that take both approaches — site- and article-level labeling.

Keyword correlations

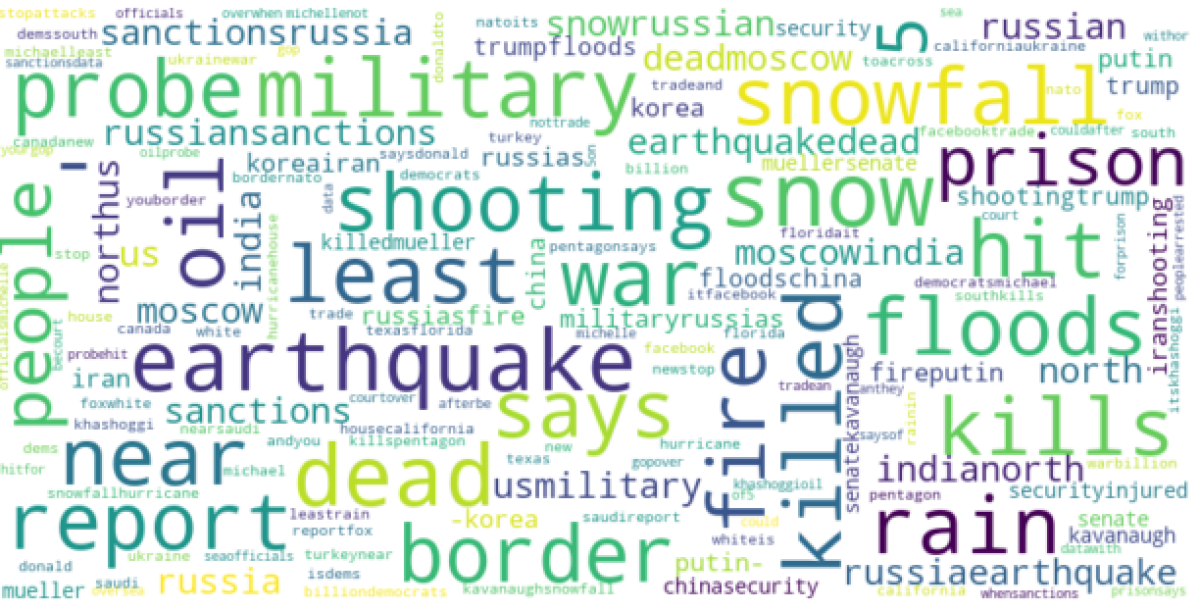

As a representative example of a dataset that is annotated at the article level, we studied the popular FakeNewsNet dataset. We trained a simple (logistic-regression) model to predict the labels (“reliable” or “unreliable”) of news items in the dataset on the basis of keywords and found that its accuracy (78%) was almost as high as that of a state-of-the-art BERT-based model (81%). Examining the keywords that drove the model’s performance, we found that celebrity names (“Brad”, “Pitt”, “Jenner”, etc.) predicted the “unreliable” label, while neutral terms like “2018” or “season” predicted the “reliable” label.

These results indicate that the ability to predict the labels of the articles in such datasets may depend on the presence of simple keywords that flag topics, such as celebrity news, rather than any deeper pattern. This implies that the dataset composition is biased, because it has strong correlations between topic words and the unreliable-news label. (It doesn’t mean that articles mentioning Brad Pitt or celebrities in general are intrinsically unreliable.)

This is partly due to biases in the fact-checking sites’ article selections. Another source of bias is that in the process of constructing FakeNewsNet, the authors used a web search engine, with its own proprietary news-ranking and verification processes, to retrieve the full texts of the news articles (which are not provided by fact-checking sites). This sometimes results in mismatches, in which unreliable content is replaced with reliable content without an update to the label.

Site classification

We also studied the NELA dataset, which uses site-level labels. We find even more challenges with site-level labeling, mostly due to the weak labeling process, where an article from a supposedly unreliable news source can be factual and vice versa.

While the literature reports models that are highly accurate at labeling news articles from NELA and similar datasets as reliable or unreliable, we found that much of the accuracy is due to having articles from the same sites in both training and test data. This means that the model can ignore the task of identifying unreliable content and just learn that particular sites are reliable or unreliable.

To demonstrate this point, we conducted a “random labels” experiment, where we randomly shuffled all the site-level labels such that they no longer represented the reliability of the site but were just an arbitrary feature of the site itself. We found that the models trained using random labels performed within 2% of the accuracy of models trained on the true labels. (These models are learning to identify sites, but that’s not practically useful, because the site name is included in any given article’s web address.)

We also show that while using a clean train/test site split is necessary, it is not sufficient to measure a given model’s generalization power. We further tested different site splits and found that the performance varies depending on how similar the sites in the test and training sets are: higher accuracy on a test set is correlated with higher similarity between the sites in the training set and test set.

We then took properly split datasets — with low similarity between train and test sets — trained models on them, and examined what kinds of articles were most prone to be erroneously identified as reliable or unreliable. We discovered that the models are most prone to errors when the topics are politics and world news and most accurate on sports and entertainment. Reliability of news is important on any topic, but the finding that model performance is degraded on politics and world news topics underscores the importance of improving data for unreliable-news detection.

Recommendations

Our paper showed that, to ensure that improvements in model performance reflect real unreliable-news detection capabilities, the community needs to make several changes in data collection, dataset construction, and experimental design. To facilitate these changes, we provide a table of recommended best practices (see below). We hope that this paper will stimulate quality improvments in unreliable-news modeling, analysis, and data. All of our code is licensed under Apache 2 and is available on GitHub.

Data collection |

Dataset construction |

Experiment design |

Collect from less biased or unbiased resources (e.g., original news outlets) |

Examine the most salient words to check for biases in the datasets |

Apply debiasing techniques when developing models on biased datasets |

Collect from diverse resources (in terms of sources, topics, time, etc.) |

Run simple bag-of-words baselines to check how severe the bias is |

Check the performance on sources/dates not in your training set |

Collect precise article-level labels, if possible |

Provide train/dev/test splits with non-overlapping source/time |

Check the performance on sources with limited examples |

| -- |

-- |

Test your model on multiple complementary datasets (e.g., with different domains, styles, etc.) |