Recently, AI models that can engage in open-domain dialogue have made a lot of progress — the top finishers in the Alexa Prize challenge, for instance.

But dialogue models still struggle with conversations that require commonsense inferences. For example, if someone says, “I'm going to perform in front of a thousand people tomorrow”, the listener might infer that the speaker is feeling nervous and respond, "Relax, you'll do great!”

To aid the research community in the development of commonsense dialogue models, we are publicly releasing a large, multiturn, open-domain dialogue dataset that is focused on commonsense knowledge.

Our dataset contains more than 11,000 dialogues we collected with the aid of workers recruited through Amazon Mechanical Turk.

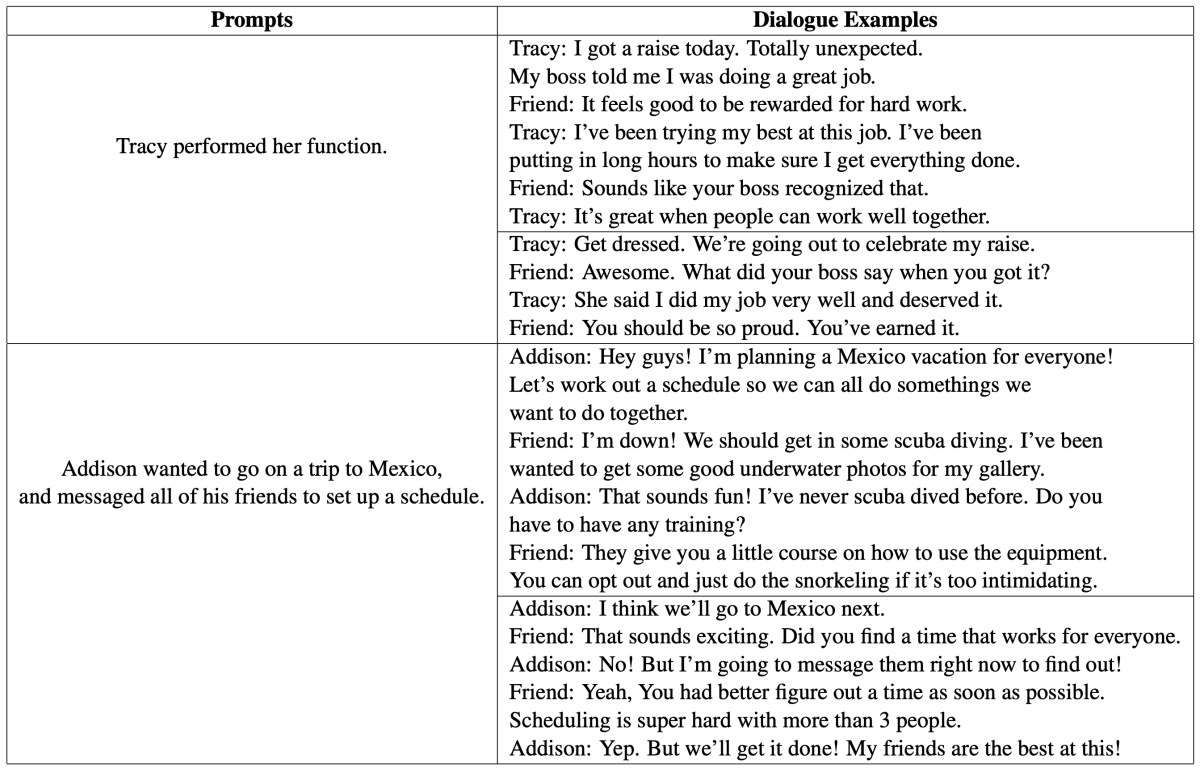

To create dialogue examples, we provided workers with prompts culled from SocialIQA, a large-scale benchmark for commonsense reasoning about social situations, which is based on the ATOMIC knowledge graph. The prompts are sentences like “Addison wanted to go on a trip to Mexico and messaged all of his friends to set up a schedule” or “Tracy performed her function.”

We showed each prompt to five people, and we asked them to create multiturn dialogues based on those prompts. On average, each dialogue had 5.7 turns. Below are some sample prompts and dialogues:

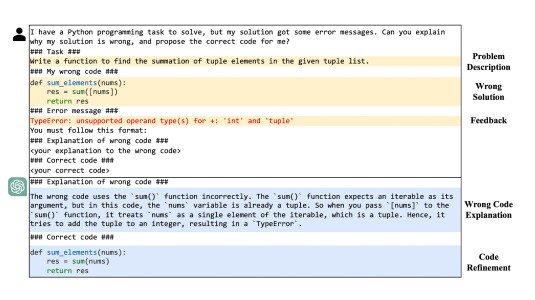

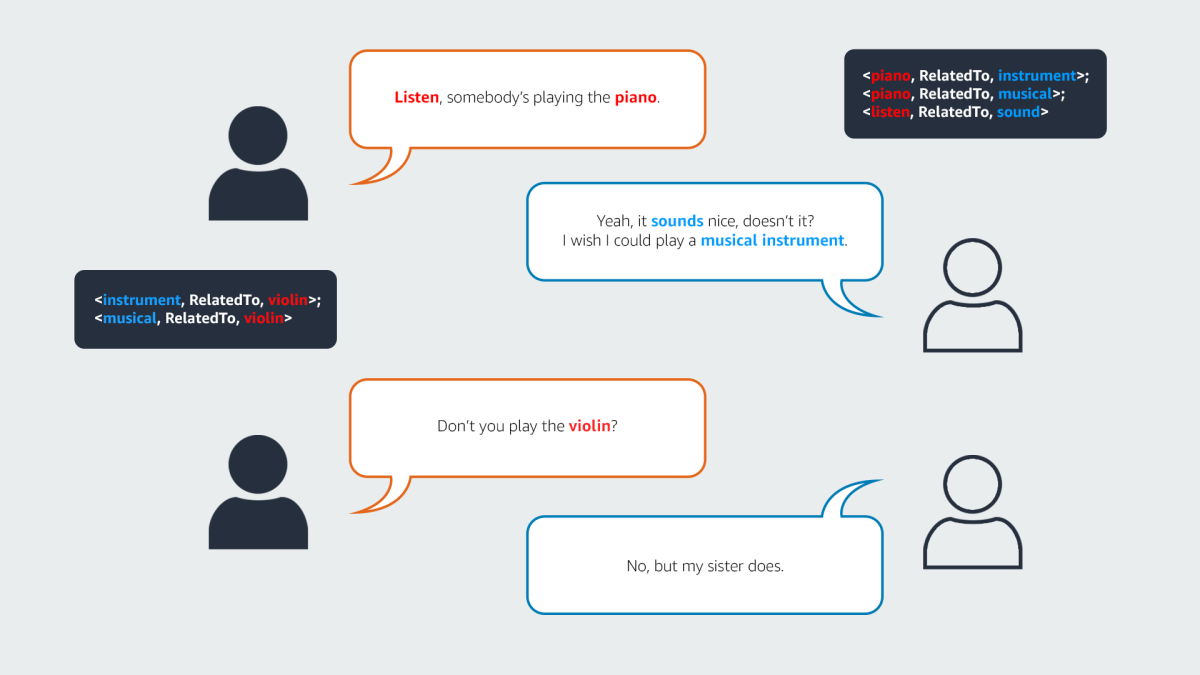

From the dialogues, we extracted examples of commonsense inference by using the public commonsense knowledge graph Conceptnet. Conceptnet encodes semantic triples with the structure <entity1, relationship, entity2>, such as <doctor, LocateAt, hospital> or <specialist, TypeOf, doctor>.

From our candidate dialogues, we kept those in which concepts mentioned in successive dialogue turns were related through Conceptnet triples, as illustrated in the following figure. This reduced the number of dialogues from 25,000 to about 11,000.

Effectiveness study

To study the impact of commonsense-oriented datasets on dialogue models, we trained a state-of-the-art pre-trained language model, GPT2, using different datasets. One is a combination of existing datasets. The other includes our new dataset and dialogues from existing datasets that we’ve identified as being commonsense-oriented using ConceptNet.

To evaluate the models’ performance, we used two automatic metrics: ROUGE, which measures the overlap between a generated response and a reference response for a given dialogue history, and perplexity, which measures a model’s likelihood of generating the reference response.

We also conducted a human study to evaluate the different models’ outputs on a subset of test dialogues. As we report in a paper we presented at SIGDIAL 2021, the model trained using our dataset and commonsense filter data outperformed the baselines on all three measures.

In the paper, we propose an automatic metric that focuses on the commonsensical aspect of response quality. The metric uses a regression model that factors in features such as length, the likelihood scores from neural models such as DialoGPT, and the number of one-hop and two-hop triples from ConceptNet that can be found between the dialogue history and the current response turn.

We trained the model on human-evaluation scores for responses generated by the dialogue models we used in our experiments. In tests, our metric showed higher correlation with human-annotation scores than either a neural network trained to predict human assessment or a regression model that didn’t use Conceptnet features.

Looking ahead

We are happy to release our dataset to help with research in dialogue response generation. We have conducted only preliminary research with the data and are hoping that the community will use the data for research on commonsense dialogue models. There are many interesting research questions to purse, such as, Do we need to explicitly perform commonsense reasoning for response generation? Or can end-to-end models do this implicitly?

Our work on the automatic metric is also just a beginning. We don’t have a good understanding yet of how to determine if a response is appropriate or commensensical, either from a psycholinguistic or a model development point of view. We are looking forward to seeing more advances from the community in these and other related directions.