Amazon scientists are continuously expanding Alexa’s natural-language-understanding (NLU) capabilities to make Alexa smarter, more useful, and more engaging.

We also enable external developers to build and deploy their own Alexa skills through the Alexa Skills Kit (ASK), allowing for an effectively unlimited expansion of Alexa’s NLU capabilities for our customers. (There are currently more than 40,000 Alexa skills.) In general, the machine learning models that provide NLU functionality require annotated data for training. Skill developers, and particularly those external ASK developers with limited resources or experience, would find skill development much more efficient if they could spend less time on data collection and annotation.

At the upcoming Human Language Technologies conference of the North American chapter of the Association for Computational Linguistics (NAACL 2018), we will present a technique that lets us scale Alexa’s NLU capabilities faster, with less data. Our paper is called “Fast and Scalable Expansion of Natural Language Understanding Functionality for Intelligent Agents”.

Our work uses transfer learning and deep learning for rapid development of NLU models in low-resource settings, by transferring knowledge from mature NLU functions. In this, we take advantage of linguistic patterns that are shared across domains. For example, named entities like city, time, and person and conversational phrases like ‘tell me about’, ‘what is’, ‘can you play’, etc., can appear across domains such as Music, Local Search, Video, Calendar, or Question Answering. In our work, we reuse large amounts of existing annotated data from mature domains such as Music or Weather to bootstrap accurate models for new domains or skills for which we have very little in-domain training data.

Our paper builds upon prior work in deep learning and transfer learning. Deep recurrent models and multitask learning architectures are state-of-the-art for many natural-language-processing (NLP) problems. Transfer learning is common in the computer vision community, where deep networks are typically trained on large sets of image data, and the lower layers of those networks learn task-independent features that transfer well to different tasks. We propose a similar approach for NLP, where such practices are relatively less explored. Within NLP, there is rich related work in the earlier domain adaptation literature and recent papers that use deep learning to transfer representations across domains and languages.

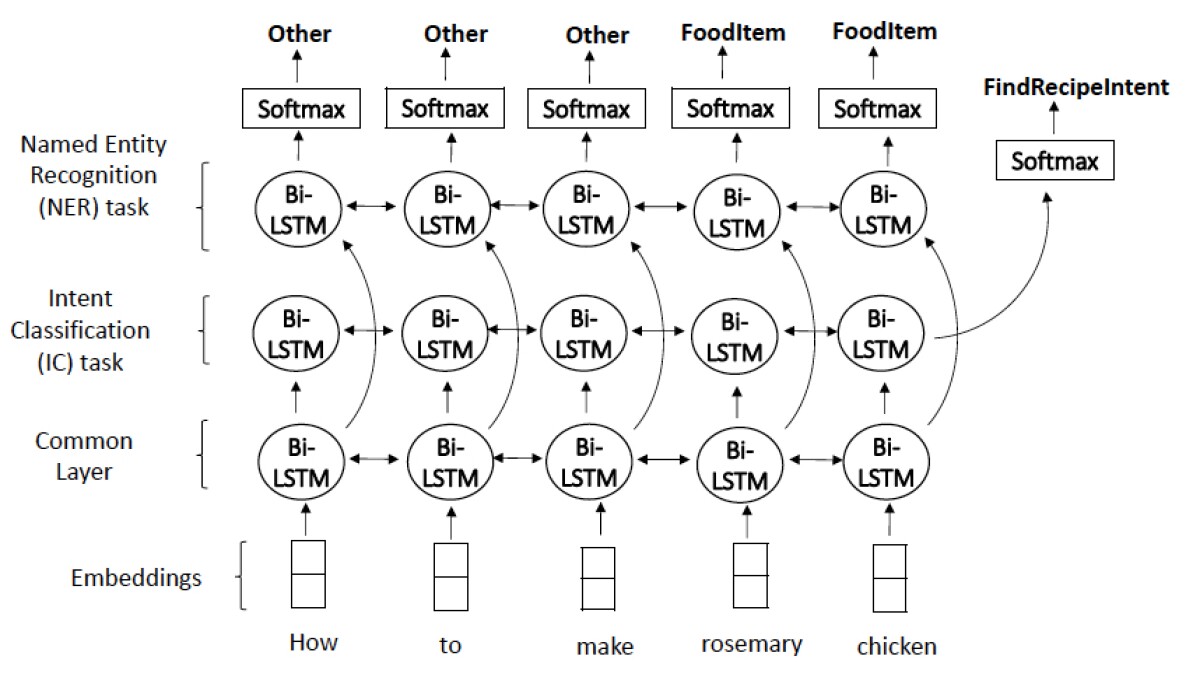

Our NLU system includes models for user intent classification (IC) and named-entity recognition (NER), which are used to classify both user intentions, such as “PlayMusic”, and named entities, such as people, dates, and times. Our methodology uses deep multitask bidirectional LSTM (bi-LSTM) models to jointly learn the IC and NER tasks. Our network (illustrated below) includes a common bi-LSTM layer and two stacked task-specific bi-LSTM layers for NER and IC respectively.

Transfer learning happens in two stages. First, we build generic multitask bi-LSTM models trained on millions of annotated data across existing, high-volume domains. This stage is also referred to as pretraining, where the lower bi-LSTM layers learn domain-independent embeddings for words and phrases. When a small amount of annotated data for the new domain becomes available, we begin the second, fine-tuning stage, where the generic model is adapted to the new data. First, we replace the upper network layers, resetting their weights and resizing them to fit the new problem, while the lower layers are retrained with the weights learned during the pretraining stage. Then we retrain the whole model on the available domain data. This allows us to effectively transfer knowledge from the large pool of annotated data from mature domains into the new domain.

We experimented with this approach on hundreds of domains, from both first-party, Amazon-developed Alexa functions and Alexa skills developed by third parties. ASK skills represent a very-low-resource setting: developers typically provide only a few hundred examples of training utterances for each of their skill models. We evaluated our methods on 200 skills and attained an average error reduction of 14% relative to strong baselines that do not use transfer learning. This allows us to significantly increase the accuracy of third-party developers’ NLU models without requiring them to annotate more data.

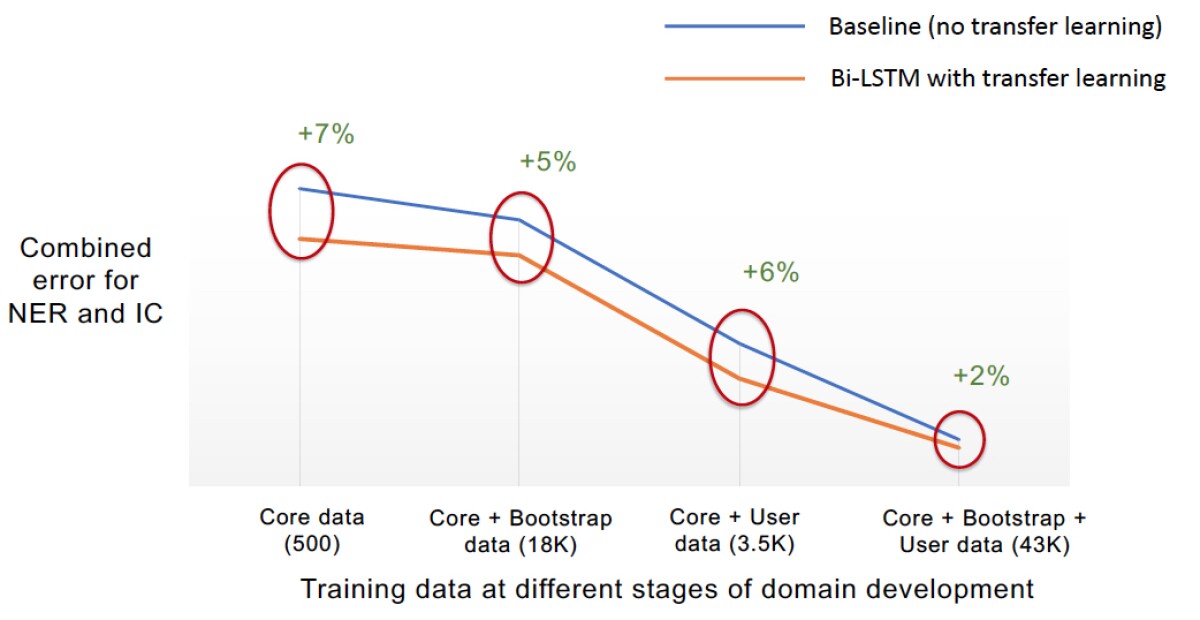

For domains developed by Amazon scientists and engineers, we simulated the early stages of domain development, when training data is gradually becoming available through internal data collection. Below we illustrate the combined NER and IC error at various stages for both a strong baseline that does not use transfer learning and our proposed transfer learning model. Our model consistently outperforms the baseline, with the greatest gains at the earliest and lowest-resource stages of domain development (up to 7% relative error reduction). As a result, we can reach high NLU accuracy with less data, reducing the need for data collection and enabling us to more rapidly deliver new functionality to Alexa users.

Finally, we found that, in addition to providing higher accuracy, the fine-tuning stage of our pretrained models converges about five times as fast as bi-LSTMs trained "from scratch" (i.e., without transfer learning). This enables faster turnaround time when training bi-LSTM models for new functionality.

Paper: "Fast and Scalable Expansion of Natural Language Understanding Functionality for Intelligent Agents"

Acknowledgments: Anuj Goyal, Spyros Matsoukas