Earlier this month at the International Conference on Computational Linguistics (Coling), we were honored to receive the best-paper award in the industry track for our paper “Leveraging user paraphrasing behavior in dialog systems to automatically collect annotations for long-tail utterances.”

In the paper, we investigate how to automatically create training data for Alexa’s natural-language-understanding systems by identifying cases in which customers issue a request, then rephrase it when the initial response is unsatisfactory.

Initial experiments with our system suggest that it could be particularly useful in helping Alexa learn to handle the long tail of unusually phrased requests, which are collectively numerous but individually rare.

First, some terminology. Alexa’s natural-language-understanding (NLU) systems characterize most customer requests according to intent, slot, and slot value. In the request “Play ‘Blinding Lights’ by the Weeknd”, for instance, the intent is PlayMusic, and the slot values “Blinding Lights” and “the Weeknd” fill the slots SongName and ArtistName.

We refer to different expressions of the same intent, using the same slots and slot values, as paraphrases: “I want to hear ‘Blinding Lights’ by the Weeknd” and “Play the Weeknd song ‘Blinding Lights’” are paraphrases. The general form of a request — “Play <SongName> by <ArtistName>” — is called a carrier phrase.

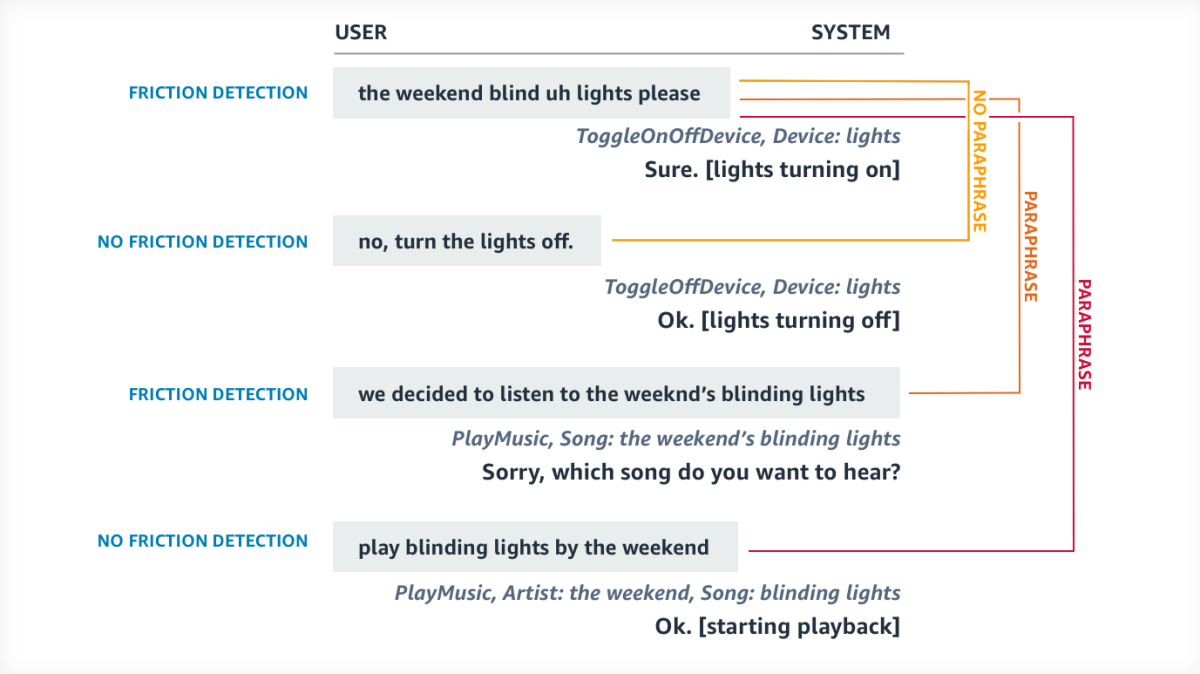

The situation we investigate is one in which a customer issues a request then rephrases it — perhaps interrupting the initial response — in a way that leads to successful completion of an interaction. Our goal is to automatically map the intent and slot labels from the successful request onto the unsuccessful one and to use the annotated example that results as additional training data for Alexa’s NLU system.

This approach is similar to the one used by Alexa’s self-learning model, which already corrects millions of errors — in either Alexa’s NLU outputs or customers’ requests — each month. But self-learning works online and leaves the underlying NLU models unchanged; our approach works offline and modifies the models. As such, the two approaches are complementary.

Our system has three different modules. The first two are machine learning models, the third a hand-crafted algorithm:

- the paraphrase detector determines whether an initial request and a second request that follows it within some short, fixed time span are in fact paraphrases of each other;

- the friction detector determines whether the paraphrases resulted in successful or unsuccessful interactions with Alexa; and

- the label projection algorithm maps the slot values from the successful request onto the words of the unsuccessful one.

Paraphrase detector

Usually, paraphrase detection models are trained on existing data sets of labeled pairs of sentences. But those data sets are not well suited to goal-directed interactions with voice agents. So to train our paraphrase detector, we synthesize a data set containing both positive and negative examples of paraphrased utterances.

To produce each positive example, we inject the same slots and slot values into two randomly selected carrier phrases that express the same intent.

To produce negative examples, we take the intent and slots associated with a given request and vary at least one of them. For instance, the request “play ‘Blinding Lights’ by the Weeknd” has the intent and slots {PlayMusic, SongName, ArtistName}. We might vary the slot ArtistName to the slot RoomName, producing the new request “play ‘Blinding Lights’ in the kitchen”. We then pair the altered sentence with the original.

To maximize the utility of the negative-example pairs, we want to make them as difficult to distinguish as possible, so the variations are slight. We also restrict ourselves to substitutions — such as RoomName for ArtistName — that show up in real data.

Friction detector

As inputs to the friction detection module, we use four types of features:

- the words of the utterance;

- the original intent and slot classifications from the NLU model;

- the confidence scores from the NLU models and the automatic speech recognizer;

- the status code returned by the system that handles a given intent — for instance, a report from the music player on whether it found a song title that fit the predicted value for the slot SongName.

Initially, we also factored in information about the customer’s response to Alexa’s handling of a request — whether, for instance, the customer barged in to stop playback of a song. But we found that that information did not improve performance, and as it complicated the model, we dropped it.

The output of the friction model is a binary score, indicating a successful or unsuccessful interaction. Only paraphrases occurring within a short span of time, the first of which is unsuccessful and the second of which is successful, pass to our third module, the label projection algorithm.

Label projection

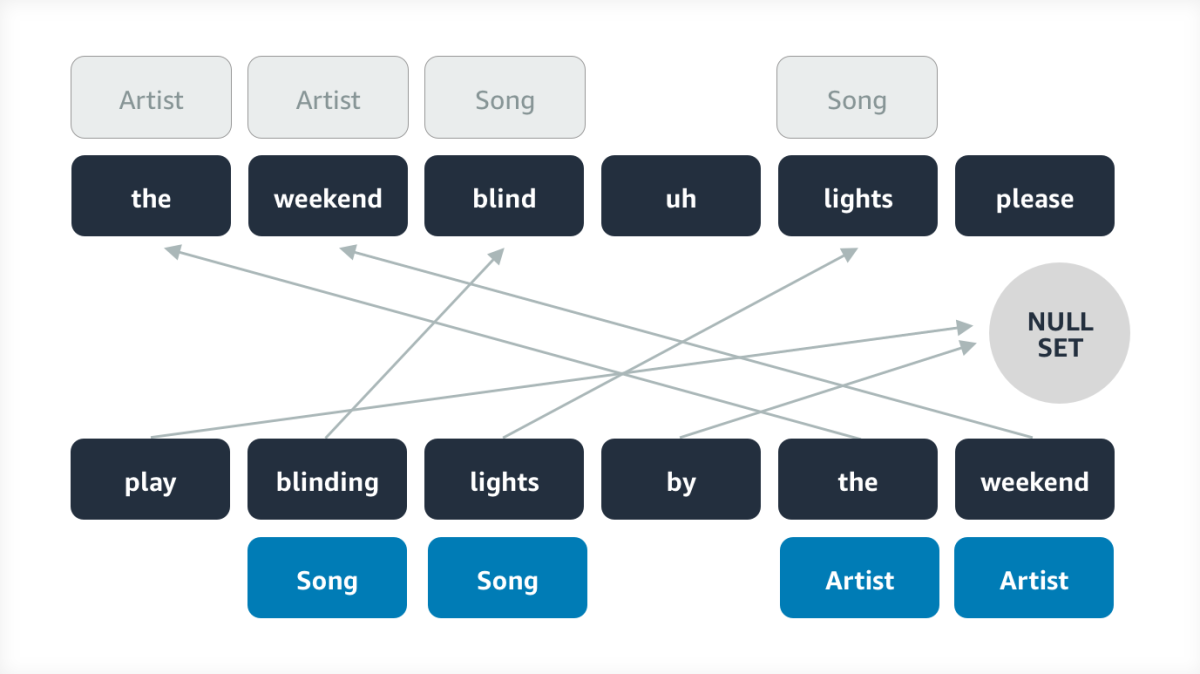

The label projection algorithm takes the successful paraphrase and maps each of its words onto either the unsuccessful paraphrase or the null set.

The goal is to find a mapping that minimizes the distance between the paraphrases. To measure that distance, we use the Levenshtein edit distance, which counts the number of insertions, deletions, and substitutions required to turn one word into another.

The way to guarantee a minimum distance would be to consider the result of every possible mapping. But experimentally, we found that a greedy algorithm, which selects the minimum-distance mapping for the first word, then for the second, and so on, results in very little performance dropoff while saving a good deal of processing time.

After label projection, we have a new training example, which consists of the unsuccessful utterance annotated with the slots of the successful utterance. But we don’t add that example to our new set of training data unless it is corroborated some minimum number of times by other pairs of unsuccessful and successful utterances.

We tested our approach using three different languages, German, Italian, and Hindi, with the best results in German. At the time of our experiments, the Hindi NLU models had been deployed for only six months, the Italian models for one year, and the German models for three. We believe that, as the Hindi and Italian models become more mature, the data they generate will become less noisy, and they’ll profit more from our approach, too.