Of the 23 papers that Amazon researchers are presenting at next week's Conference on Empirical Methods in Natural Language Processing (EMNLP), the majority concentrate on two topics: natural-language understanding, or the semantic interpretation of text, and question answering, both of which are important across Amazon businesses, including Alexa, Amazon Web Services, and the Amazon Store.

The remaining 10 papers cover a range of topics, from self-learning and information retrieval to language modeling and machine translation.

Within the area of natural-language understanding, Amazon researchers apply a battery of techniques — such as semi-supervised learning, few-shot learning, and contrastive learning — to a variety of subproblems, such as visual referring-expression recognition, or identifying which object in an image a natural-language expression refers to; coreference resolution, or determining whether different terms refer to the same entity; and dealing with distribution shift, or a mismatch between the distribution of data at inference time and the distribution in the training set.

- Feedback attribution for counterfactual bandit learning in multi-domain spoken language understanding

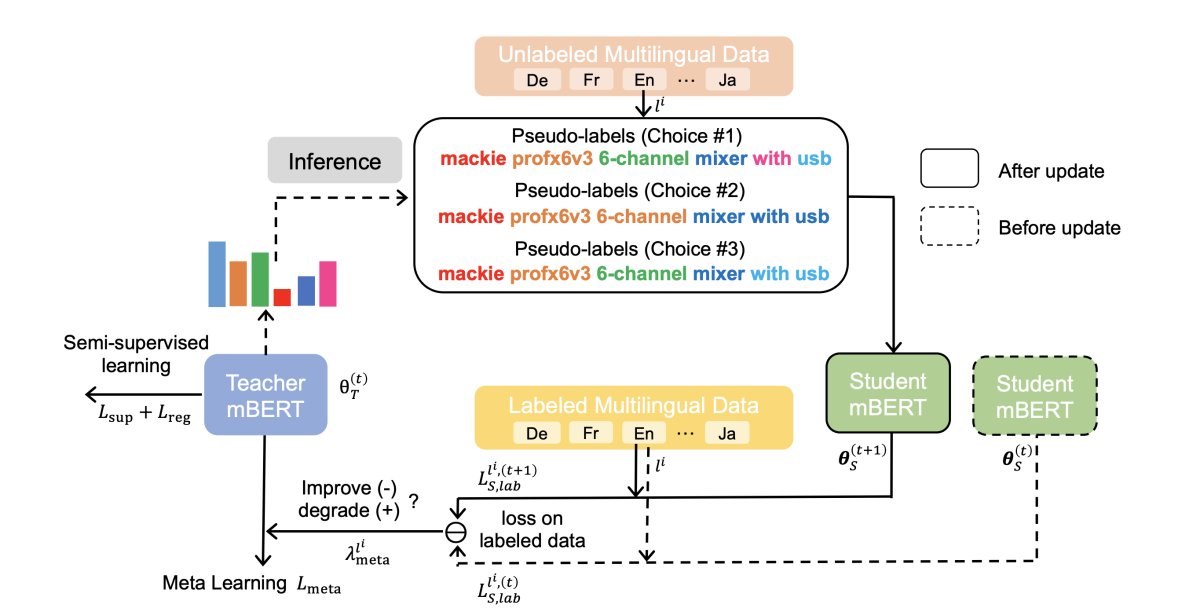

Tobias Falke, Patrick Lehnen - MetaTS: Meta teacher-student network for multilingual sequence labeling with minimal supervision

Zheng Li, Danqing Zhang, Tianyu Cao, Ying Wei, Yiwei Song, Bing Yin - Mind the context: The impact of contextualization in neural module networks for grounding visual referring expression

Arjun R. Akula, Spandana Gella, Keze Wang, Song-Chun Zhu, Siva Reddy - Nearest neighbor few-shot learning for cross-lingual classification

M. Saiful Bari, Batool Haider, Saab Mansour - ODIST: Open world classification via distributionally shifted instances

Lei Shu, Yassine Benajiba, Saab Mansour, Yi Zhang - Pairwise supervised contrastive learning of sentence representations

Dejiao Zhang, Shang-Wen Li, Wei Xiao, Henghui Zhu, Ramesh Nallapati, Andrew O. Arnold, Bing Xiang - Sequential cross-document coreference resolution

Emily Allaway, Shuai Wang, Miguel Ballesteros

Amazon researchers’ work on question answering includes helping conversational-AI agents suggest follow-up questions during interactions with customers; filtration of unanswerable questions to prevent the waste of system resources; and few-shot learning.

- End-to-end entity resolution and question answering using differentiable knowledge graphs

Armin Oliya, Amir Saffari, Priyanka Sen, Tom Ayoola - Expanding end-to-end question answering on differentiable knowledge graphs with intersection

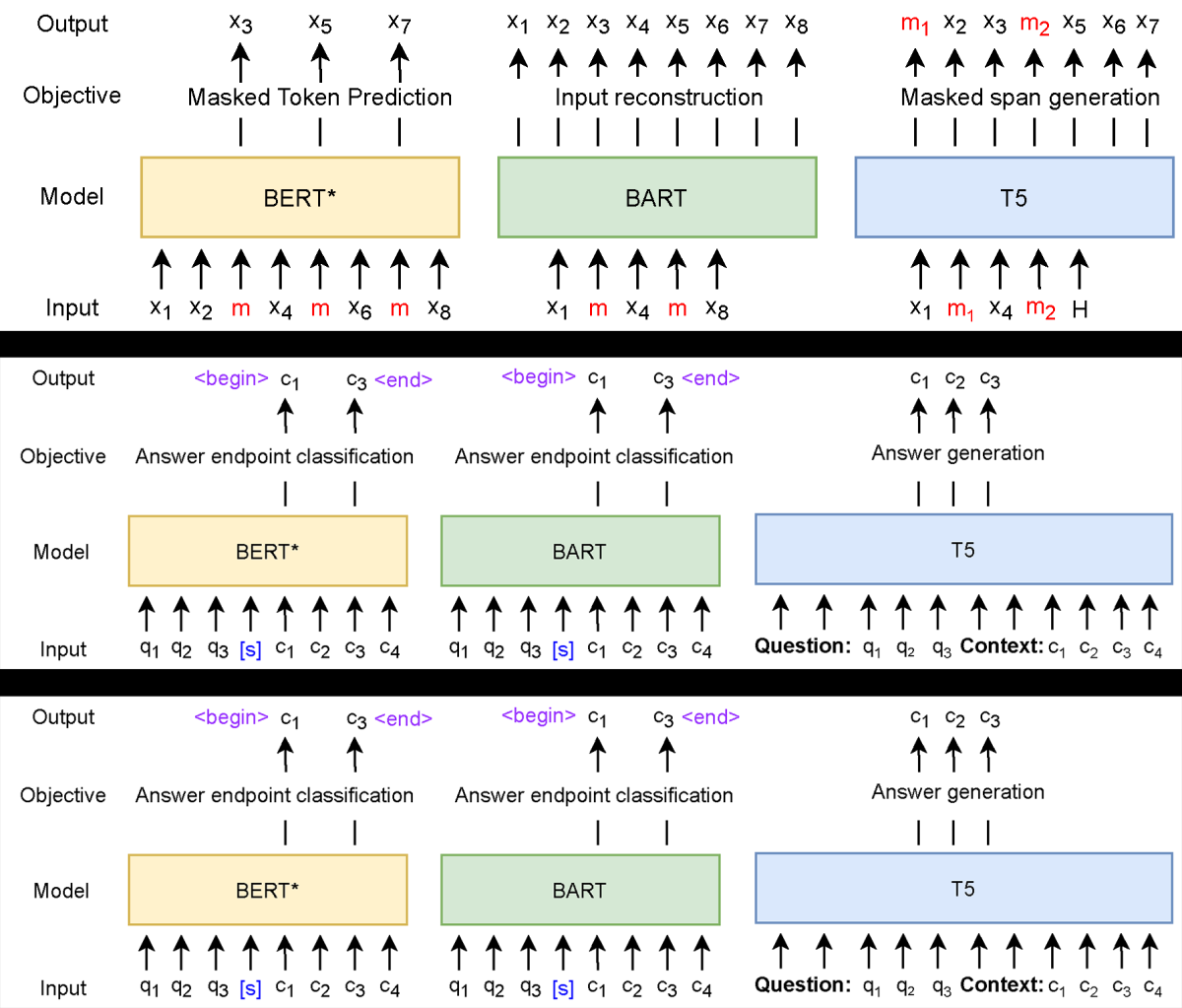

Priyanka Sen, Amir Saffari, Armin Oliya - FewshotQA: A framework for few-shot learning of question answering tasks using pre-trained text-to-text models

Rakesh Chada, Pradeep Natarajan - Generating self-contained and summary-centric question answer pairs via differentiable reward imitation learning

Li Zhou, Kevin Small, Yong Zhang, Sandeep Atluri - Will this question be answered? Question filtering via answer model distillation for efficient question answering

Siddhant Garg, Alessandro Moschitti - Reference-based weak supervision for answer sentence selection using web data

Vivek Krishnamurthy, Thuy Vu, Alessandro Moschitti

Amazon Web Services researchers address questions of fairness in a paper on mitigating gender bias in machine translation models.

- GFST: Gender-filtered self-training for more accurate gender in translation

Prafulla Kumar Choubey, Anna Currey, Prashant Mathur, Georgiana Dinu

In the area of information retrieval, Amazon papers investigate an integrated model for conversational search and the identification of counterfactual claims in product reviews that can create a misleading impression of the reviewer’s sentiment.

- End-to-end conversational search for online shopping with utterance transfer

Liqiang Xiao, Jun Ma, Xin Luna Dong, Pascual Martinez-Gomez, Nasser Zalmout, Chenwei Zhang, Tong Zhao, Hao He, Yaohui Jin - I wish I would have loved this one, but I didn’t: A multilingual dataset for counterfactual detection in product reviews

James O'Neill, Polina Rozenshtein, Ryuichi Kiryo, Motoko Kubota, Danushka Bollegala

A pair of Amazon papers look at the type of language modeling that accounts for so much of the recent success of natural-language-processing models.

- How much pretraining data do language models need to learn syntax?

Laura Perez-Mayos, Miguel Ballesteros, Leo Wanner - Using optimal transport as alignment objective for fine-tuning multilingual contextualized embeddings

Sawsan Alqahtani, Garima Lalwani, Yi Zhang, Salvatore Romeo, Saab Mansour

Alexa researchers combined data mixing and elastic weight consolidation to improve the adaptation of machine translation models to new tasks.

- Improving the quality trade-off for neural machine translation multi-domain adaptation

Eva Hasler, Tobias Domhan, Jonay Trenous, Ke Tran, Bill Byrne, Felix Hieber

Paraphrase generation varies the surface form of sentences while preserving their semantic content, so it can help augment training data for other natural-language-processing tasks.

- Learning to selectively learn for weakly-supervised paraphrase generation

Kaize Ding, Dingcheng Li, Alexander Hanbo Li, Xing Fan, Chenlei (Edward) Guo, Yang Liu, Huan Liu

Self-learning is the use of implicit feedback signals to automatically improve machine learning models, without the need for human intervention.

- A scalable framework for learning from implicit user feedback to improve natural language understanding in large-scale conversational AI systems

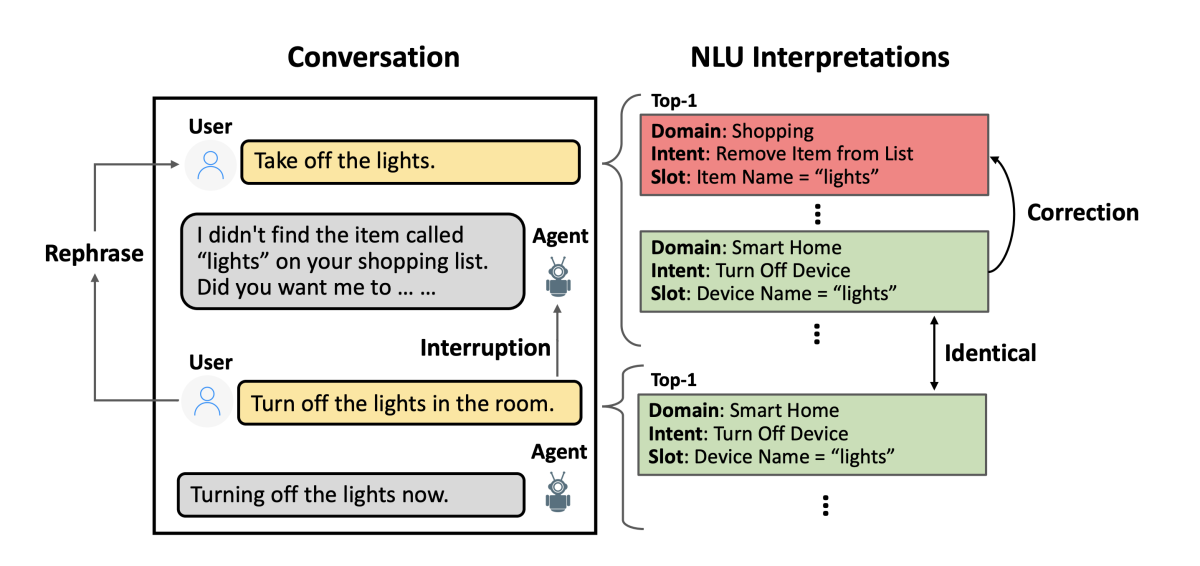

Sunghyun Park, Han Li, Ameen Patel, Sidharth Mudgal, Sungjin Lee, Young-Bum Kim, Spyros Matsoukas, Ruhi Sarikaya - Contextual rephrase detection for reducing friction in dialogue system

Zhuoyi Wang, Saurabh Gupta, Jie Hao, Xing Fan, Dingcheng Li, Alexander Hanbo Li, Chenlei (Edward) Guo

Text summarization is a widely studied problem in natural-language processing, and a new paper from Amazon Web Services considers the particular problems it presents in the context of dialogue.

- A bag of tricks for dialogue summarization

Muhammad Khalifa, Miguel Ballesteros, Kathleen McKeown

For more on Amazon's involvement at EMNLP, see our interview with Georgiana Dinu, an applied scientist with Amazon Web Services and a conference area chair for machine learning for natural-language-processing.