Developing AI-powered predictive models for real-world data typically requires expertise in data science, familiarity with machine learning (ML) algorithms, and a solid understanding of the model’s business context. The complete development cycle for data science applications — from data acquisition through model training and evaluation — can take days or even weeks.

Launched in a beta preview at re:Invent 2024 and generally available since February 28, 2025, Amazon Q Developer in SageMaker Canvas is a new generative-AI-powered assistant that lets customers build and deploy ML models in minutes using only natural language — no ML expertise required.

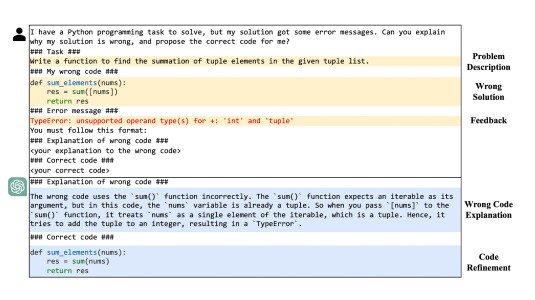

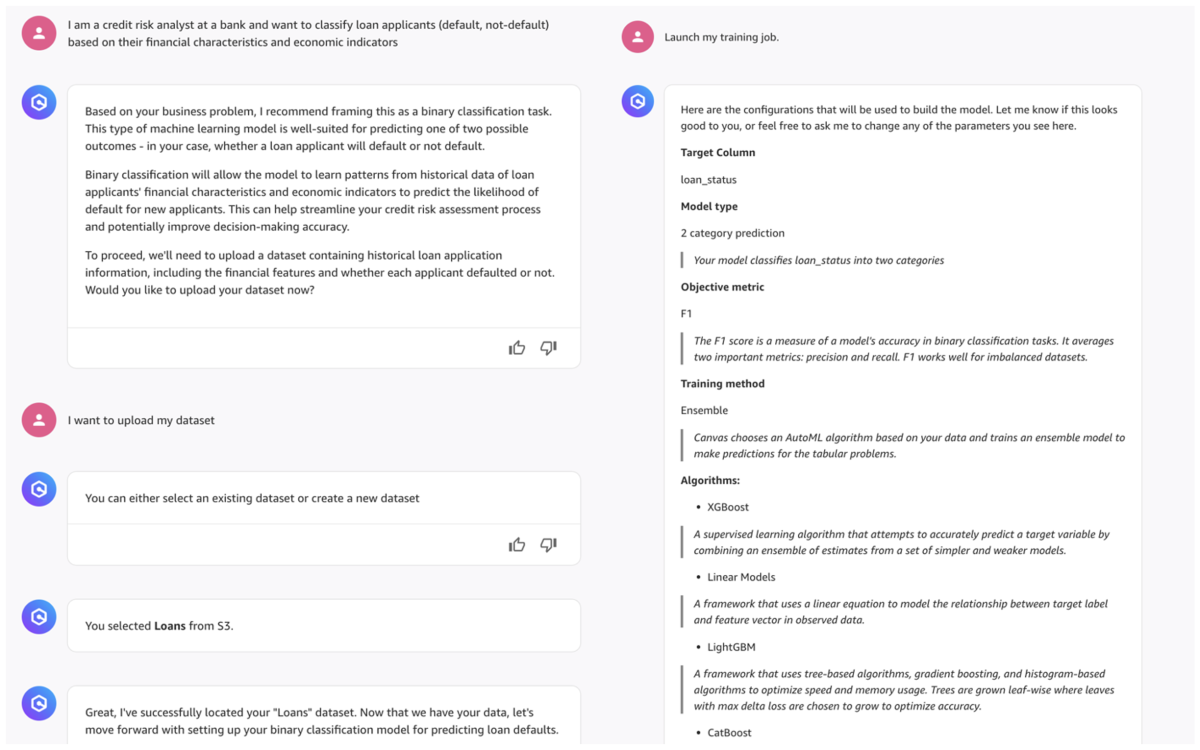

Q Developer has a chatbot format; customers describe their business problems and attach datasets of interest. As an example, a customer might tell the assistant, “I am a credit risk analyst at a bank and want to classify loan applicants (default, not-default) based on their financial characteristics and economic indicators”.

After describing the business problem, the customer can select an existing dataset; create a new dataset from S3, Redshift, SQL, or Snowflake; or simply upload a local CSV file. The dataset is expected to be in tabular format and should contain the target column — the column to be predicted — and a set of feature columns. If the problem involves time series forecasting, the tabular dataset should also contain a time stamp column.

Once the dataset has been provided, Amazon Q Developer guides the customer through the ML model-building process while providing control of every step of the ML workflow.

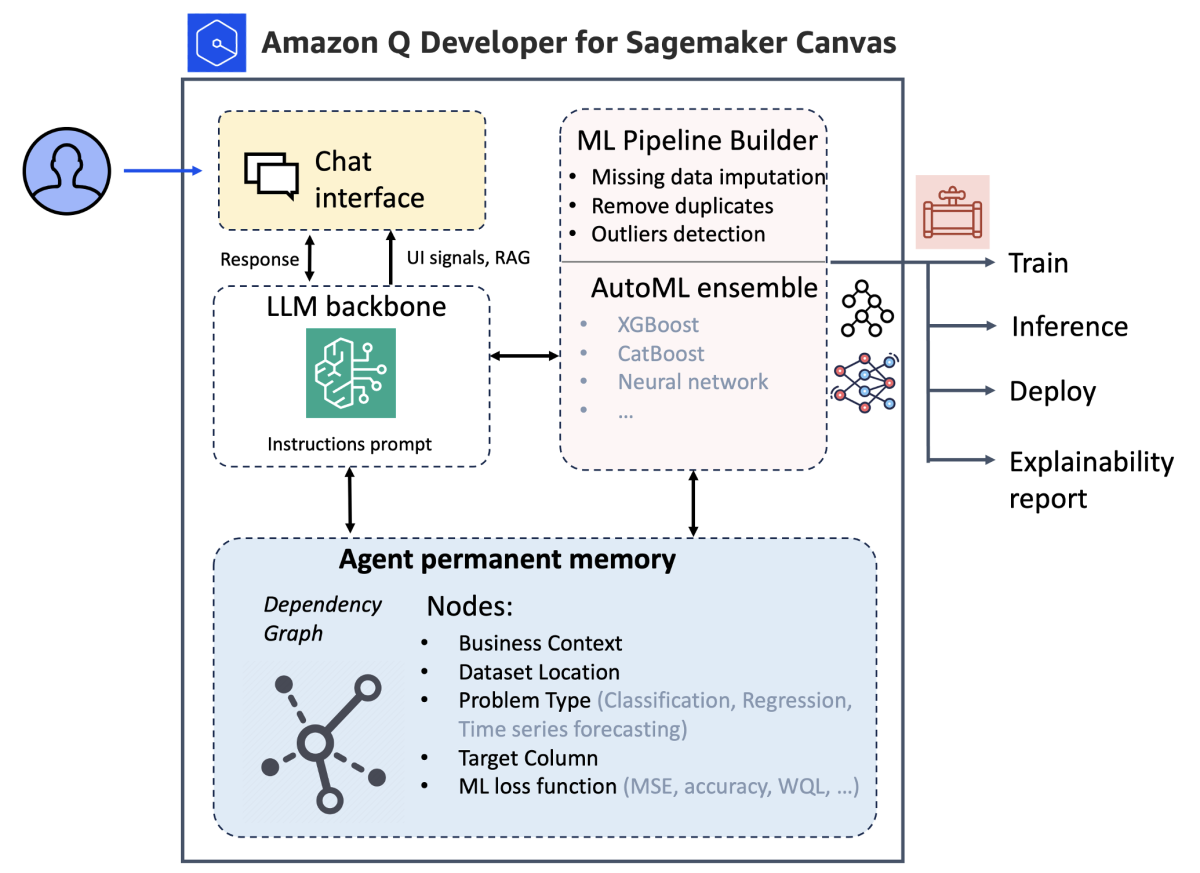

Q Developer assistant is an agentic system, meaning an autonomous system that can act as an agent on the customer’s behalf. An LLM serves as the primary interface between the customer and the agent, and as the conversation progresses, the agent stores intermediate findings in a nonvolatile-memory block. The memory block contains information such as dataset location, business context, problem type, names of feature columns and target columns, and ML loss function.

The memory block is implemented as a dependency graph, where each node represents a problem variable, such as problem_type, evaluation_metric, or target_column. The dependency graph structure helps the agent infer missing variables, which are necessary for the construction of the ML model.

Amazon Q Developer automatically identifies the appropriate ML task type — binary/multiclass classification, regression, or time series forecasting — from the problem description and suggests the appropriate loss function for the ML job, e.g. cross-entropy loss, accuracy, F1 score, or precision and recall for classification tasks; mean square error (MSE), mean absolute error (MAE), or R2 loss for regression tasks; or mean square error, mean absolute scaled error (MASE), mean absolute percentage error (MAPE), or weighted-quantile losses (WQL) for time series forecasting.

To help the user to navigate the steps of data preparation, model building, and ML training, the agent suggests a few most-likely next actions, which are displayed as buttons. Through next-query suggestions, Q Developer helps identify missing information about the dataset and details about the underlying predictive task before proceeding to the ML model-building step.

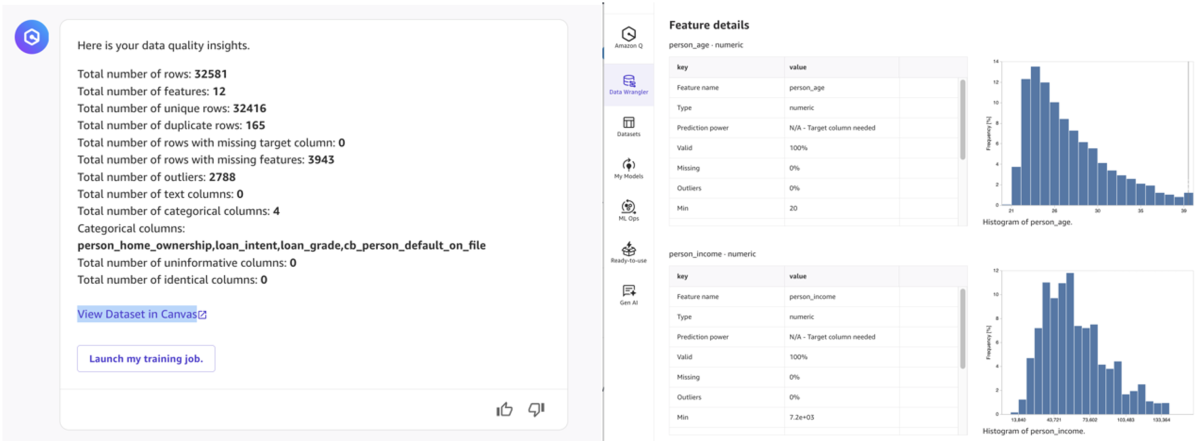

After collecting all required inputs, Amazon Q Developer builds a data-preprocessing pipeline on the back end and prepares the ensemble model for training. During preprocessing, the agent fixes any problems that it’s encountered with the dataset to prepare it for training a high-quality ML model. This step can include data cleaning, where missing values are identified and automatically filled; categorical-feature encoding; outlier handling; and removal of duplicate rows or columns

In addition, throughout the conversation the user can ask follow-up questions about the dataset (e.g., the fraction of rows with missing values or the number of outliers) or dive deeper into model metrics and feature importance by leveraging DataWrangler for advanced analytics and visualization.

To maximize prediction quality, Amazon Q Developer uses an AutoML approach and trains an ensemble of ML models (including XGBoost, CatBoost, LightGBM, linear models, neural-network models, etc.) instead of a single model. After the ensemble’s submodels have been trained, they undergo hyperparameter optimization (HPO). Both the feature engineering and the hyperparameter search are handled automatically by the AutoML algorithm and are abstracted away from the end user.

After the ensemble model is trained, the user can run inference on a test dataset or deploy the model as a SageMaker inference endpoint in just a few clicks. At this point, the user has access to an automatically generated explainability report, which helps the user visualize and understand properties of the dataset, feature attribution scores (feature importance), the model training process, and performance metrics.

Using Amazon Q Developer

Ready to transform your data into powerful ML models without extensive data science expertise? Start exploring Amazon Q Developer in SageMaker Canvas today and experience the simplicity of building ML models using natural-language commands.

Acknowledgments: Vidyashankar Sivakumar, Saket Sathe, Debanjan Datta, and Derrick Zhang