On November 10, at the fourth Fact Extraction and Verification workshop (FEVER), we will be announcing the winners of the third fact verification challenge in the FEVER series. The challenge follows our 2018 FEVER shared task and our 2019 FEVER 2.0 build-it, break-it, fix-it contest.

The announcement is the culmination of a year’s worth of work, starting with the design of our latest dataset, FEVEROUS, or Fact Extraction and Verification Over Unstructured and Structured Information.

FEVER dataset release

The FEVEROUS dataset and shared task were released and launched in May 2021. You can find more information at the FEVER site.

As misleading and false claims proliferate, especially in online settings, there has been a growing interest in fully automated or assistive fact verification systems. Beyond checking potentially unreliable claims, automated fact verification is an invaluable tool for knowledge extraction and question answering, the work we do on my team at Alexa Knowledge. The ability to find evidence that supports or refutes a potential answer will give us more confidence in the answers we provide, and it will also allow us to offer that evidence as part of a follow-up conversation.

Since 2018, to provide the research community with the means to develop large-scale fact-checking systems, I have been working with colleagues from the University of Cambridge, King’s College London, and Facebook on the FEVER series of datasets, shared tasks, and academic workshops.

The FEVEROUS dataset comprises 87,026 manually constructed factual claims, each annotated with evidence in the form of sentences and/or table cells from Wikipedia pages. Based on that evidence, each claim is labeled Supported, Refuted, or NotEnoughInfo. The dataset annotation project was funded by Amazon and designed by the FEVER team.

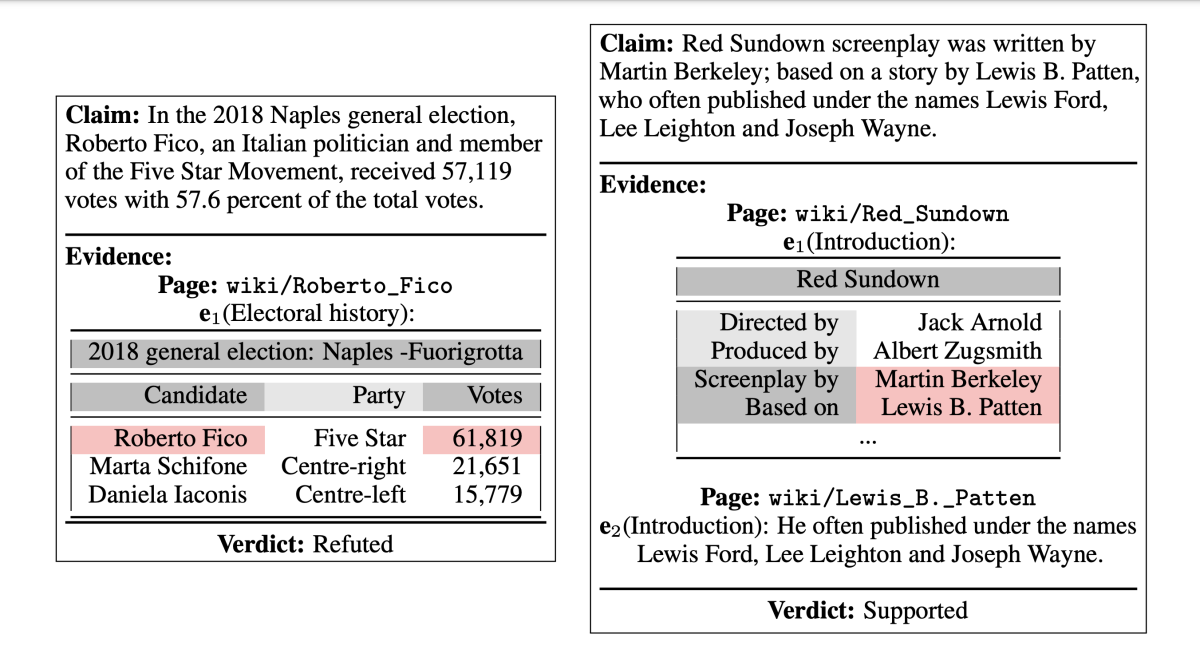

To get an idea of the dataset and the difficulty of the task, consider the two examples below. In the left-hand example, in order to refute the claim, we need to identify the two cells that contain the name of the candidate and the number of votes he received (along with the context — page, section title(s), and the closest row/column headers, highlighted in dark grey). As this first set of evidence refutes at least one part of the claim, we don’t need to continue.

For the example on the right, the evidence consists of two table cells and one sentence from two different pages, supporting the claim.

FEVEROUS contains more-complex claims than the original FEVER dataset (on average, 25.3 words per claim, compared to 9.4 for FEVER) but also a more complete pool of evidence (entire pages, including the tables, compared to just the introduction sections). That brings us closer to real-world scenarios, while maintaining the experimental control of an artificially designed dataset.

While the biggest change from the previous FEVER dataset is the use of structured information as evidence, we also worked to improve the quality of the annotation and remove known biases. For example, in the original dataset, a claim-only baseline (a system that classifies a claim without considering the evidence) was able to get an accuracy score of about 62%, compared to a majority-class baseline (choosing the most frequent label) of 33%. This means that the claim “gives away” its label based on the words it contains. In contrast, the claim-only baseline on FEVEROUS is 58%, against a majority-class baseline of 56% (the three labels don’t appear with equal frequency).

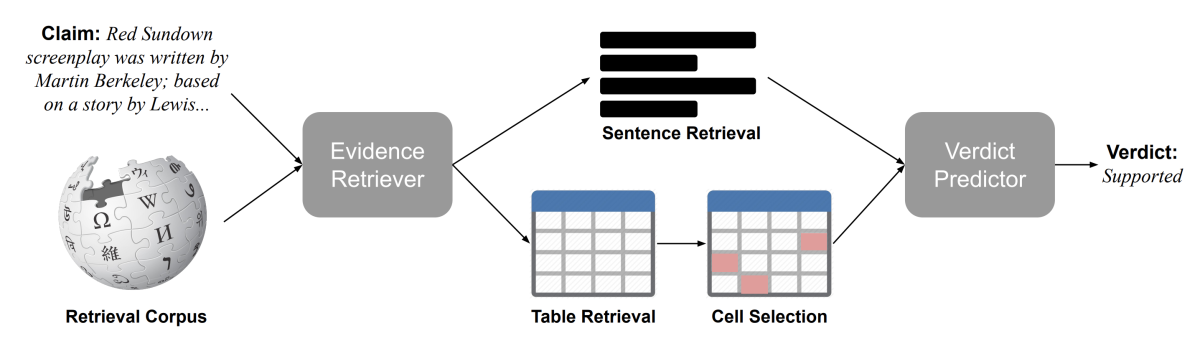

As we did with the first two shared tasks, with FEVEROUS, we released a baseline approach to support researchers in the design of fact-checking systems and to assess the feasibility of the task.

The baseline uses a combination of entity matching and TF-IDF to extract the most relevant sentences and tables to retrieve the evidence, followed by a cell extraction model that returns relevant cells from tables by linearizing them and treating the extraction as a sequence labelling task. Finally, a RoBERTa classifier pre-trained on NLI dataset and fine-tuned on the FEVEROUS training data is used to predict the final label for each claim.

We released the dataset and launched the shared task in May of this year. In late July, we opened the final test phase of the shared task, where participants sent predictions over a blind test set. During the final test phase, we received 13 entries, six of which were able to beat the baseline system. The winning team achieved a FEVEROUS score of 27% (+9% compared to the baseline).

The main emerging trends in the submissions were the use of table-based pretraining with systems like TaPas and an emphasis on multi-hop evidence retrieval.

For further insights about the participating systems and to learn more about the challenge in general, I invite you to join the shared-task session at the fourth FEVER workshop. Besides the discussion of the FEVEROUS challenge, our workshop will feature research papers on all topics related to fact verification and invited talks from leading researchers in the field: Mohit Bansal (UNC Chapel Hill), Mirella Lapata (University of Edinburgh), Maria Liakata (Queen Mary University of London), Pasquale Minervini (University College London), Preslav Nakov (Qatar Computing Research Institute), Steven Novella (Yale University School of Medicine), and Brendan Nyhan (Dartmouth College). I look forward to seeing you all there!