As Alexa-enabled devices continue to expand into new countries, finding information across languages that use different scripts becomes a more pressing challenge. For example, a Japanese music catalogue may contain names written in English or the various scripts used in Japanese — Kanji, Katakana, or Hiragana. When an Alexa customer, from anywhere in the world, asks for a certain song, album, or artist, we could have a mismatch between Alexa’s transcription of the request and the script used in the corresponding catalogue.

To address this problem, we developed a machine-learned multilingual named-entity transliteration system. Named-entity transliteration is the process of converting a name from one language script to another. We describe the design challenges of building such a system in a paper we are presenting this month at the 27th International Conference on Computational Linguistics (COLING 2018).

The first challenge is obtaining a large dataset that contains name pairs in different languages. Since we could not find a publicly available dataset that satisfied our needs, we created a new dataset based on Wikidata, a central knowledge base for Wikipedia and other Wikimedia projects. We have released our dataset online, together with our code, under a Creative Commons license.

The Wikidata page for a given person will usually list versions of his or her name in multiple languages. We automatically collected all available pairings of English versions of names with Japanese, Hebrew, Arabic, or Russian versions. We then applied a few heuristics to filter out noisy pairs, which we detail in the paper. (We initially collected data on titles of works as well, but they too frequently involved translation, not just transliteration.)

In most names, the pronunciation of the last name is independent of the first or middle names. So it makes sense to train a transliteration system on independent pairs of first names, last names, and so on.

Wikidata doesn’t include separate tags for first, middle, and last names, but there are systematic correspondences between the positions of names in different transliterations. So we wrote some scripts that use those correspondences to extract pairs of one-name transliterations. For example, the English/Russian Wikidata label pair [“Amy Winehouse”, “Эми Уайнхаус”] would produce two data instances in our training set: [“Amy”, “Эми”] and [“Winehouse”, “Уайнхаус”]. The result was a dataset containing almost 400,000 one-name pairs.

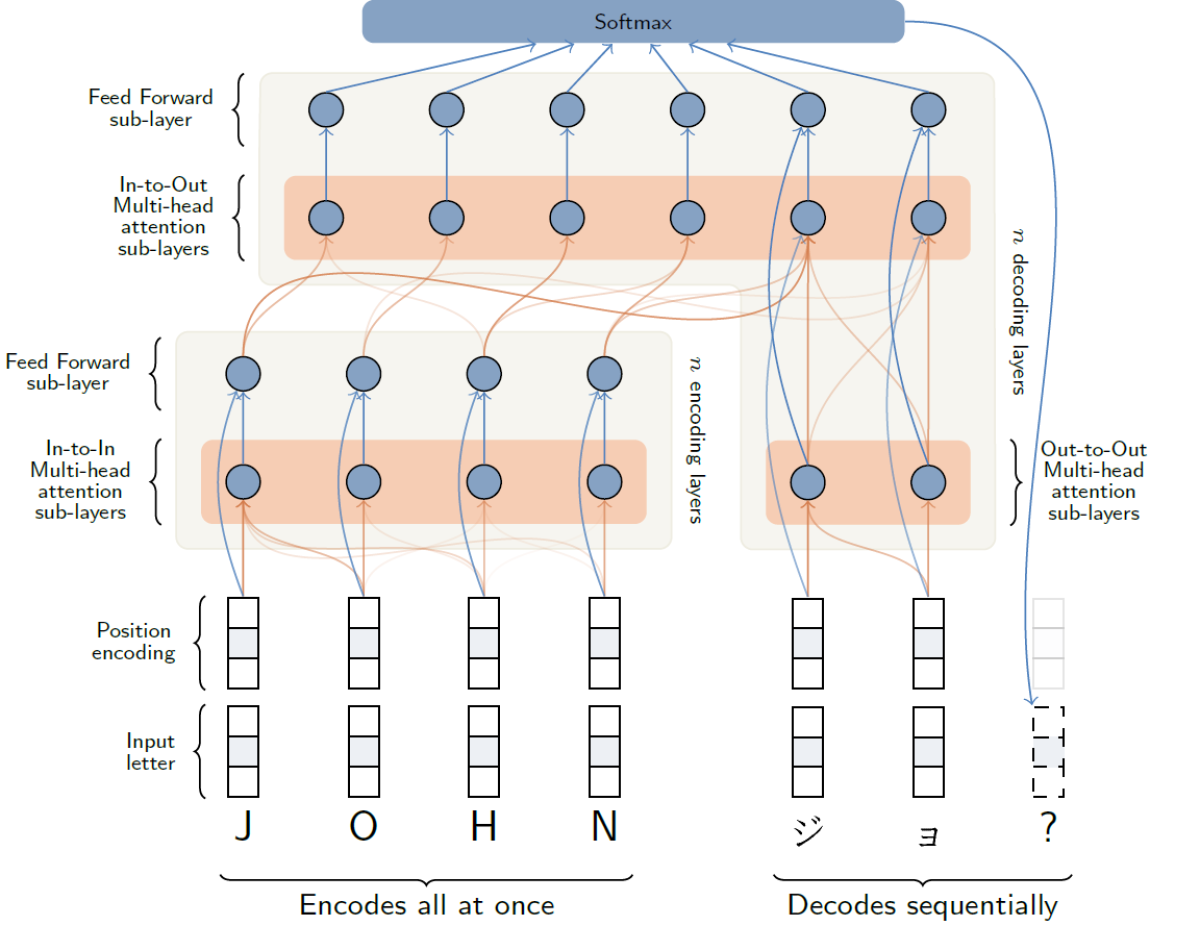

We then used our dataset to train several machine-learning systems, employing both traditional approaches and more recent neural approaches that have yielded strong results on machine translation tasks. We achieved the best results using the Transformer, a neural-network architecture that dispenses with some of the complexities of convolutional or recurrent networks and instead relies on attention mechanisms, which focus the network on particular aspects of the data passing through it.

The Transformer network outperformed an encoder-decoder recurrent neural-network architecture with attention and also a more traditional weighted-finite-state-transducer approach to sequence-to-sequence transduction based on the Phonetisaurus library, a data-driven, open-source toolkit for grapheme-to-phoneme conversion.

While the Transformer achieved the best results overall, there are other factors that affect performance. First, the language pair makes a significant difference. For example, the system performs significantly worse when transliterating English to Hebrew or Arabic than when transliterating English to a more similar language such as Russian.

The direction of the transliteration also plays an important role. In every case, using English as the target language (e.g., training a model to transliterate from Russian to English) results in much worse accuracy, and once again the impact varies depending on the source language. Finally, we also found that the size of the training set doesn't have a significant impact on accuracy. For all languages, we were able to reach close to optimal performance with about 50% of the training data.

The paper contains an error analysis section that offers insights into some of our findings, such as why transliterating into English is harder. (One explanation is that on Wikidata, as elsewhere on the Web, words in Semitic languages are written without diacritical marks, so the network has to guess at the missing vowels.)