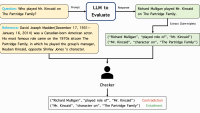

When a large language model (LLM) is prompted with a request such as Which medications are likely to interact with St. John's wort?, it doesn’t search a medically validated list of drug interactions (unless it's been trained to do so). Instead, it generates a list based on the distribution of words associated with St. John’s wort.

The result will likely be a mix of real and potentially fictional medications with varying degrees of interaction risk. These types of LLM hallucinations — assertions or claims that sound plausible but are verifiably incorrect — still impede LLMs’ commercial implementation. And while there are ways to reduce hallucinations in domains such as health care, the need to identify and measure hallucinations remains key to the safe use of generative AI.

In a paper we presented at the last Conference on Empirical Methods in Natural Language Processing (EMNLP), we describe HalluMeasure, an approach to hallucination measurement that employs a novel combination of three techniques: claim-level evaluations, chain-of-thought reasoning, and linguistic classification of hallucinations into error types.

HalluMeasure first uses a claim extraction model to decompose the LLM response into a set of claims. Using a separate claim classification model, it then sorts the claims into five key classes (supported, absent, contradicted, partially supported, and unevaluatable) by comparing them to the context — retrieved text relevant to the request, which are also fed to the classification model.

Additionally, HalluMeasure classifies the claims into 10 distinct linguistic-error types (e.g., entity, temporal, and overgeneralization) that provide a fine-grained analysis of hallucination errors. Finally, we produce an aggregated hallucination score by measuring the rate of unsupported claims (i.e., those assigned classes other than supported) and calculate the distribution of fine-grained error types. This distribution provides LLM builders with valuable insights into the nature of the errors their LLMs are making, facilitating targeted improvements.

Decomposing responses into claims

The first step in our approach is to decompose an LLM response into a set of claims. An intuitive definition of “claim” is the smallest unit of information that can be evaluated against the context; typically, it’s a single predicate with a subject and (optionally) an object.

We chose to evaluate at the claim level because classification of individual claims improves hallucination detection accuracy, and the higher atomicity of claims allows for more precise measurement and localization of hallucinations. We deviate from existing approaches by directly extracting a list of claims from the full response text.

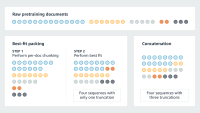

Our claim extraction model uses few-shot prompting and begins with an initial instruction, followed by a set of rules outlining the task requirements. It also includes a selection of example responses accompanied by their manually extracted claims. This comprehensive prompt effectively teaches the LLM (without updating model weights) to accurately extract claims from any given response. Once the claims have been extracted, we classify them by hallucination type.

Advanced reasoning in claim classification

We initially followed the traditional method of directly prompting an LLM to classify the extracted claims, but this did not meet our performance standards. So we turned to chain-of-thought (CoT) reasoning, in which an LLM is asked not only to perform a task but to justify each action it takes. This has been shown to improve not only LLM performance but also model explainability.

We developed a five-step CoT prompt that combines curated examples of claim classification (few-shot prompting) with steps that instruct our claim classification LLM to thoroughly examine each claim’s faithfulness to the reference context and document the reasoning behind each examination.

Once implemented, we compared HalluMeasure’s performance against other available solutions on the popular SummEval benchmark dataset. The results clearly demonstrate improved performance with few-shot CoT prompting (2 percentage points, from 0.78 to 0.8), taking us one step closer to the automated identification of LLM hallucinations at scale.

Fine-grained error classification

HalluMeasure enables more-targeted solutions that enhance LLM reliability by providing deeper insights into the types of hallucinations produced. Beyond binary classifications or the commonly used natural-language-inference (NLI) categories of support, refute, and not enough information, we propose a novel set of error types developed through analyzing linguistic patterns in common LLM hallucinations. For example, one proposed label type is temporal reasoning, which would apply to, say, a response stating that a new innovation is in use, when the context claims that the new innovation will be used in the future.

Additionally, understanding the distribution of error types across an LLM’s responses allows more-targeted hallucination mitigation. For example, if a majority of wrong claims contradict a particular assertion in the context, a common cause — say, allowing a large number (e.g., >10) of turns in a dialogue — could be explored. If fewer turns decrease this error type, restricting the number of turns or using summaries of previous turns could mitigate hallucination.

While HalluMeasure can provide scientists with insights into the source of a model’s hallucinations, there is still evolving risk for generative AI. As a consequence, we continue to drive innovation in the space of responsible AI by exploring reference-free detection, employing dynamic few-shot prompting techniques tailored to specific use cases, and incorporating agentic AI frameworks.