At last week’s ACM Symposium on Operating Systems Principles (SOSP), my colleagues at Amazon Web Services and I won a best-paper award for our work using automated reasoning to validate that ShardStore — our new S3 storage node microservice — will do what it’s supposed to.

Amazon Simple Storage Service (S3) is our fundamental object storage service — fast, cheap, and reliable. ShardStore is the service we run on our storage hardware, responsible for durably storing S3 object data. It’s a ground-up re-thinking of how we store and access data at the lowest level of S3. Because ShardStore is essential for the reliability of S3, it’s critical that it is free from bugs.

Formal verification involves mathematically specifying the important properties of our software and formally proving that our systems never violate those specifications — in other words, mathematically proving the absence of bugs. Automated reasoning is a way to find those proofs automatically.

Traditionally, formal verification comes with high overhead, requiring up to 10 times as much effort as building the system being verified. That’s just not practical for a system as large as S3.

For ShardStore, we instead developed a new lightweight automated-reasoning approach that gives us nearly all of the benefits of traditional formal proofs but with far lower overhead.

Our methods found 16 bugs in the ShardStore code that would have required time-consuming and labor-intensive testing to find otherwise — if they could have been found at all. And with our method, specifying the software properties to be verified increased the ShardStore codebase by only about 14% — versus the two- to tenfold increases typical of other formal-verification approaches.

Our method also allows the specifications to be written in the same language as the code — in this case, Rust. That allows developers to write new specifications themselves whenever they extend the functionality of the code. Initially, experts in formal verification wrote the specifications for ShardStore. But as the project has progressed, software engineers have taken over that responsibility. At this point, 18% of the ShardStore specifications have been written by developers.

Reference models

One of the central concepts in our approach is that of reference models, simplified instantiations of program components that can be used to track program state under different input conditions.

For instance, storage systems often use log-structured merge-trees (LSMTs), a sophisticated data structure designed to apportion data between memory and different tiers of storage, with protocols for transferring data that take advantage of the different storage media to maximize efficiency.

The state of an LSMT, however — data locations and the record of data access patterns — can be modeled using a simple hash table. A hash table can thus serve as a reference model for the tree.

In our approach, reference models are specified using executable code. Code verification is then a matter of ensuring that the state of a component instantiated in the code matches that of the reference model, for arbitrary inputs. In practice, we found that specifying reference models required, on average, about 1% as much code as the actual component implementations.

Dependency tracking

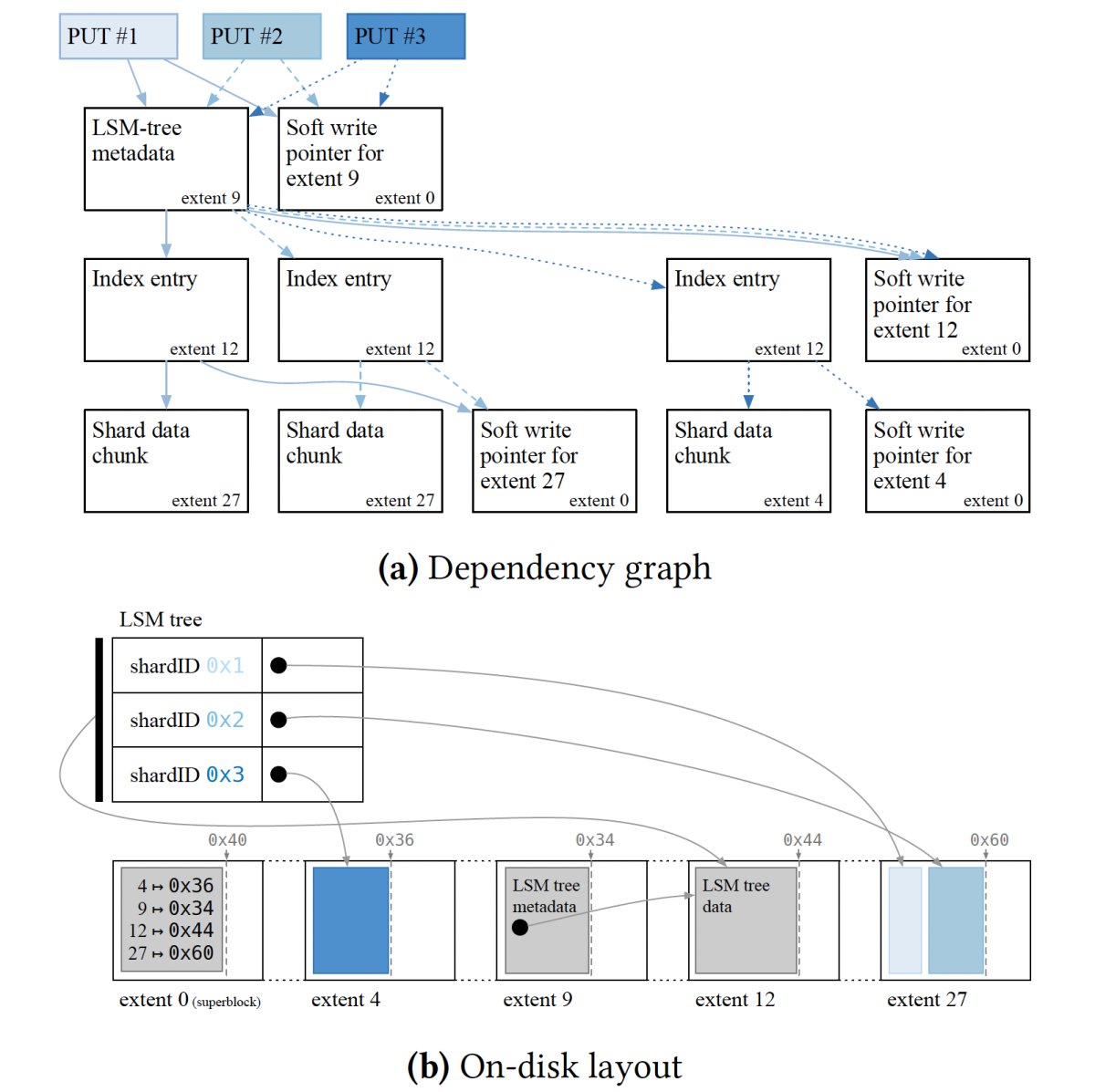

ShardStore uses LSMTs to track and update data locations. Each object stored by ShardStore is divided into chunks, and the chunks are written to extents, which are contiguous regions of physical storage on a disk. A typical disk has tens of thousands of extents. Writes within each extent are sequential, tracked by a write pointer that defines the next valid write position.

The simplicity of this model makes data writes very efficient. But it does mean that data chunks within an extent can’t be deleted individually. Deleting a chunk from an extent requires transferring all the other chunks in the extent elsewhere and then moving the write pointer back to the beginning of the extent.

The sequence of procedures required to write a single chunk of data using ShardStore — the updating of the merge-tree, the writing of the chunk, the incrementation of the write pointer, and so on — create sets of dependencies between successive write operations. For instance, the position of the write pointer within an extent depends on the last write performed within that extent.

Our approach requires that we track dependencies across successive operations, which we do by constructing a dependency graph on the fly. ShardStore uses the dependency graph to decide how to most efficiently write data to disk while still remaining consistent when recovering from crashes. We use formal verification to check that the system always constructs these graphs correctly and so always remains consistent.

Test procedures

In our paper, we describe a range of tests, beyond crash consistency, that our method enables, such as concurrent-execution tests and tests of the serializers that map the separate elements of a data structure to sequential locations in memory or storage.

We also describe some of our optimizations to ensure that our verification is thorough. For instance, our method generates random sequences of inputs to test for specification violations. If a violation is detected, the method systematically pares down the input sequence to identify which specific input or inputs caused the error.

We also bias the random-input selector so that it selects inputs that target the same storage pathways, to maximize the likelihood of detecting an error. If each input read from or wrote to a different object, for instance, there would be no risk of encountering a data inconsistency.

We use our lightweight automated-reasoning techniques to validate every single deployment of ShardStore. Before any change reaches production, we check its behavior in hundreds of millions of scenarios by running our automated tools using AWS Batch.

To support this type of scalable checking, we developed and open-sourced the new Shuttle model checker for Rust code, which we use to validate concurrency properties of ShardStore. Together, these approaches provide a continuous and automated correctness mechanism for one of S3’s most important microservices.