At this year’s Computer Vision and Pattern Recognition Conference (CVPR) — the premier computer vision conference — Amazon Web Services’ vice president for AI and data, Swami Sivasubramanian, gave a keynote address titled “Computer vision at scale: Driving customer innovation and industry adoption”. What follows is an edited version of that talk.

Amazon has been working on AI for more than 25 years, and that includes our ongoing innovations in computer vision. Computer vision is part of Amazon’s heritage, ethos, and future — and today, we’re using it in many parts of the company.

Computer vision technology helps power our e-commerce recommendations engine on Amazon.com, as well as the customer reviews you see on our product pages. Our Prime Air drones use computer vision and deep learning, and the Amazon Show uses computer vision to streamline customer interactions with Alexa. Every day, more than half a million vision-enabled robots assist with stocking inventory, filling orders, and sorting packages for delivery.

I’d like to take a closer look at a few such applications, starting with Amazon Ads.

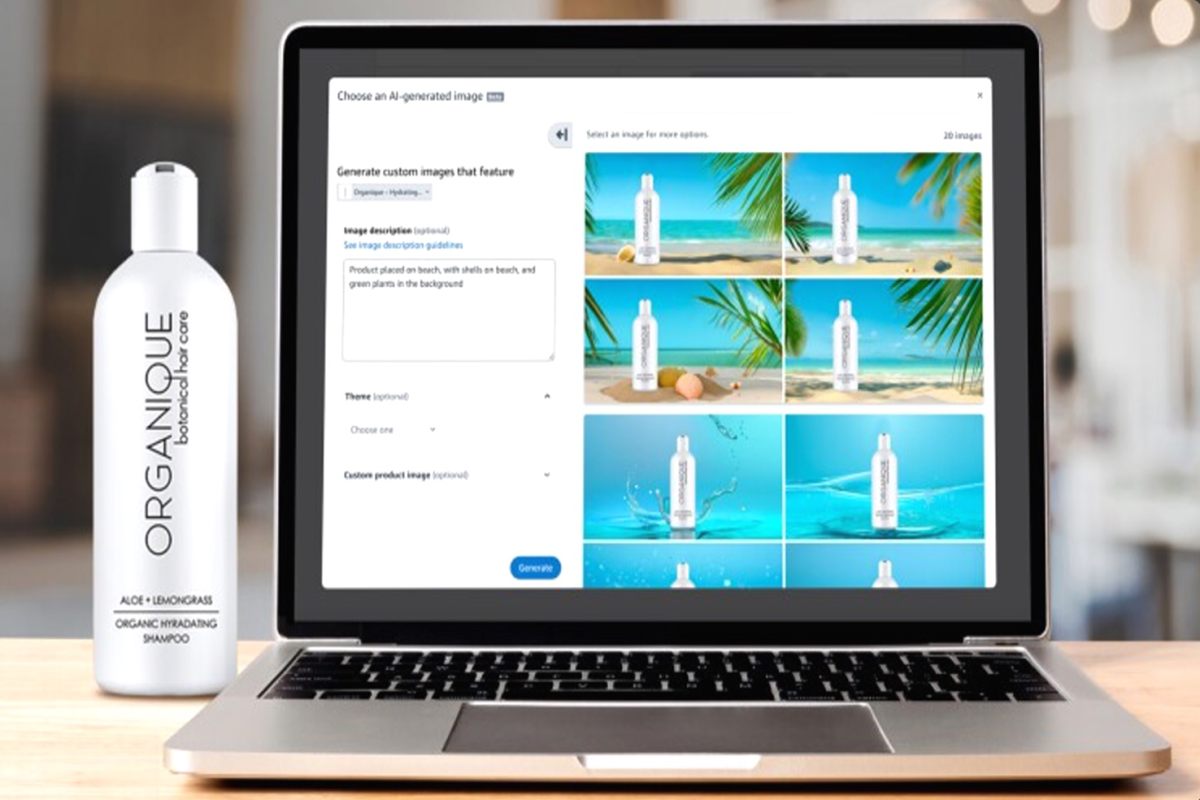

Amazon Ads Image Generator

Advertisers often struggle to create visually appealing and effective ads, especially when it comes to generating multiple variations and optimizing for different placements and audiences. That’s why we developed an AI-powered image generation tool called Amazon Ads Image Generator.

With this tool, advertisers can input product images, logos, and text prompts, and an AI model will generate multiple versions of visually appealing ads tailored to their brands and messaging. The tool aims to simplify and streamline the ad creation process for advertisers, allowing them to produce engaging visuals more efficiently and cost effectively.

To build the Image Generator, we used both Amazon machine learning services such as Amazon SageMaker and Amazon SageMaker Jumpstart and human-in-the-loop workflows that ensure high-quality and appropriate images. The architecture consists of modular microservices and separate components for model development, registry, model lifecycle management, selecting the appropriate model, and tracking the job throughout the service, as well as a customer-facing API.

Amazon One

In the retail setting, we’re reimagining identification, entry, and payment with Amazon One, a fast, convenient, and contactless experience that lets customers leave their wallets — and even their phones — at home. Instead, they can use the palms of their hands to enter a facility, identify themselves, pay, present loyalty cards or event tickets, and even verify their ages.

Amazon One is able to recognize the unique lines, grooves, and ridges of your palm and the pattern of veins just under the skin using infrared light. At registration, proprietary algorithms capture and encrypt your palm image within seconds. The Amazon One device uses this information to create your palm signature and connect it to your credit card or your Amazon account.

To ensure Amazon One’s accuracy, we trained it on millions of synthetically generated images with subtle variations, such as illumination conditions and hand poses. We also trained our system to detect fake hands, such as a highly detailed silicon hand replica, and reject them.

Protecting customer data and safeguarding privacy are foundational design principles with Amazon One. Palm images are never stored on-device. Rather, the images are immediately encrypted and sent to a highly secure zone in the Amazon Web Services (AWS) cloud, custom-built for Amazon One, where the customer’s palm signature is created.

Customers like Crunch Fitness are taking advantage of Amazon One and features like the membership linking capability, which addresses a traditional pain point for both customers and the fitness industry. Crunch Fitness announced that it was the first fitness brand to introduce Amazon One as an entry option for its members at select locations nationwide.

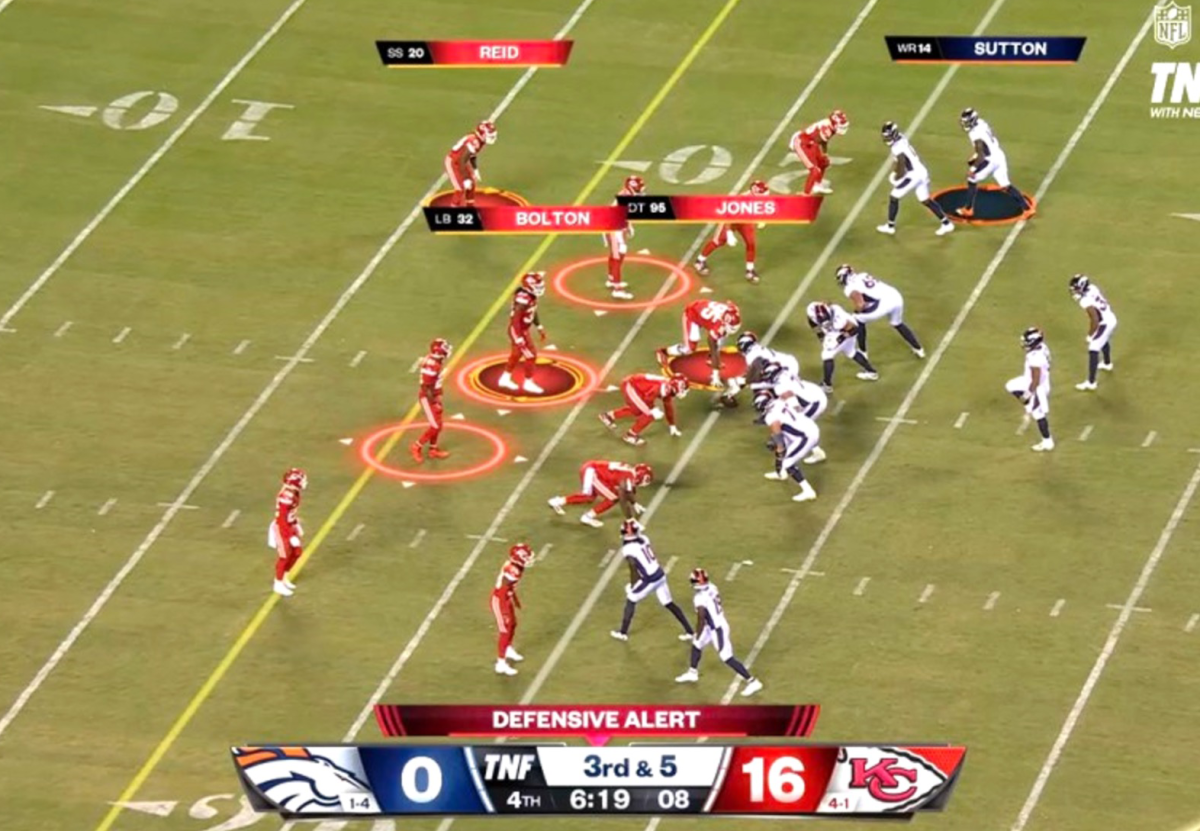

NFL Next Gen Stats

Twenty-five years ago, the height of innovation in NFL broadcasts was the superimposition of a yellow line on the field to mark the first-down distance. These types of on-screen fan experiences have come a long way since then, thanks in large part to AI and machine learning (ML) technologies.

For example, as part of our ongoing partnership with the NFL, we’re delivering Prime Vision with Next Gen Stats during Thursday Night Football to provide insights gleaned by tracking RFID chips embedded in players’ shoulder pads.

One of our most recent innovations is the Defensive Alerts feature shown below, which tracks the movements of defensive players before the snap and uses an ML model to identify “players of interest” most likely to rush the quarterback (circled in red). This unique capability came out of a collaboration between the Thursday Night Football producers, engineers, and our computer vision team.

In recent months, Amazon Science has profiled a range of other Amazon computer vision projects, from Project P.I., a fulfillment center technology that uses generative AI and computer vision to help spot, isolate, and remove imperfect products before they’re delivered to customers, to Virtual Try-All, which enables customers to visualize any product in any personal setting.

But for now, I’d like to turn from Amazon products and services that rely on computer vision to the ways in which AWS puts computer vision technologies directly into our customers’ hands.

The AWS ML stack

At AWS, our mission is to make it easy for every developer, data scientist, and researcher to build intelligent applications and leverage AI-enabled services that unlock new value from their data. We do this with the industry’s most comprehensive set of ML tools, which we think of as constituting a three-layer stack.

At the top of the stack are applications that rely on large language models (LLMs), like Amazon Q, our generative-AI-powered assistant for accelerating software development and helping customers extract useful information from their data.

At the middle layer, we offer a wide variety of services that enable developers to build powerful AI applications, from our computer vision services and devices to Amazon Bedrock, a secure and easy way to build generative-AI apps with the latest and greatest foundation models and the broadest set of capabilities for security, privacy, and responsible AI.

And at the bottom layer, we provide high-performance, cost-effective infrastructure that is purpose-built for ML.

Let’s look at few examples in more detail, starting with one our most popular vision services: Amazon Rekognition.

Amazon Rekognition

Amazon Rekognition is a fully managed service that uses ML to automatically extract information from images and video files so that customers can build computer vision models and apps more quickly, at lower cost, and with customization for different business needs.

This includes support for a variety of use cases, from content moderation, which enables the detection of unsafe or inappropriate content across images and videos, to custom labels that enable customers to detect objects like brand logos. And most recently we introduced an anti-spoofing feature to help customers verify that only real users, and not spoofs or bad actors, can access their services.

Amazon Textract

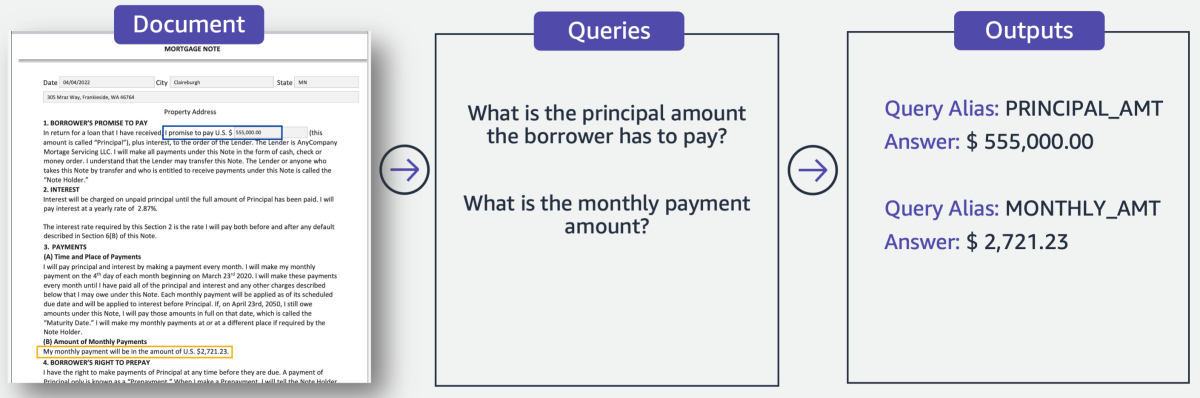

Amazon Textract uses optical character recognition to convert images or text — whether from a scanned document, PDF, or a photo of a document — into machine-encoded text. But it goes beyond traditional OCR technology by not only identifying each character, word, and letter but also the contents of fields in forms and information stored in tables.

For example, when presented with queries like the ones below, Textract can create specialized response objects by leveraging a combination of visual, spatial, and language cues. Each object assigns its query a short label, or “alias”. It then provides an answer to the query, the confidence it has in that answer, and the location of the answer on the page.

Amazon Bedrock

Finally, let’s look at how we’re enabling computer vision technologies with Amazon Bedrock, a fully managed service that makes it easy for customers to build and scale generative-AI applications. Tens of thousands of customers have already selected Amazon Bedrock as the foundation for their generative-AI strategies because it gives them access to the broadest selection of first- and third-party LLMs and foundation models. This includes models from AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, and Stability AI, as well as our own Titan family of models.

One of those models is the Titan Image Generator, which enables customers to produce high-quality, realistic images or enhance existing images using natural-language prompts. Amazon Science reported on the Titan Image Generator when we launched it last year at our re:Invent conference.

Responsible AI

We remain committed to the responsible development and deployment of AI technology, around which we made a series of voluntary commitments at the White House last year. To that end, we’ve launched new features and techniques such as invisible watermarks and a new method for assessing “hallucinations” in generative models.

By default, all Titan-generated images contain invisible watermarks, which are designed to help reduce the spread of misinformation by providing a discreet mechanism for identifying AI-generated images. AWS is among the first model providers to widely release built-in invisible watermarks that are integrated into the image outputs and are designed to be tamper-resistant.

Hallucination occurs when the data generated by a generative model do not align with reality, as represented by a knowledge base of “facts”. The alignment between representation and fact is referred to as grounding. In the case of vision-language models, the knowledge base to which generated text must align is the evidence provided in images. There is a considerable amount of work ongoing at Amazon on visual grounding, some of which was presented at CVPR.

One of the necessary elements of controlling hallucinations is to be able to measure them. Consider, for example, the following image-prompt pair and the output generated by a vision-language (VL) model. If the model extends its output with the highest-probability next word, it will hallucinate a fridge where the image includes none:

Existing datasets for evaluating hallucinations typically consist of specific questions like “Is there a refrigerator in this image?” But at CVPR, our team presented a paper describing a new benchmark called THRONE, which leverages LLMs themselves to evaluate hallucinations in response to free-form, open-ended prompts such as “Describe what you see”.

In other work, AWS researchers have found that one of the reasons modern transformer-based vision-language models hallucinate is that they cannot retain information about the input image prompt: they progressively “forget” it as more tokens are generated and longer contexts used.

Recently, state space models have resurfaced ideas from the ’70s in a modern key, stacking dynamical models into modular architectures that have arbitrarily long memory residing in their state. But that memory — much like human memory — grows lossier over time, so it cannot be used effectively for grounding. Hybrid models that combine state space models and attention-based networks (such as transformers) are also gaining popularity, given their high recall capabilities over longer contexts. Literally every week, a growing number of variants appear in the literature.

At Amazon, we want to not only make the existing models available for builders to use but also empower researchers to explore and expand the current set of hybrid models. For this reason, we plan to open-source a class of modular hybrid architectures that are designed to make both memory and inference computation more efficient.

To enable efficient memory, these architectures use a more general elementary module that seamlessly integrates both eidetic (exact) and fading (lossy) memory, so the model can learn the optimal tradeoff. To make inference more efficient, we optimize core modules to run on the most efficient hardware — specifically, AWS Trainium, our purpose-built chip for training machine learning models.

It's an exciting time for AI research, with innovations emerging at a breakneck pace. Amazon is committed to making those innovations available to our customers, both indirectly, in the AI-enabled products and services we offer, and directly, through AWS’s commitment to democratize AI.