This year, the Amazon Search team had two papers accepted at the Conference on Computer Vision and Pattern Recognition (CVPR), both focusing on image-text feature alignment, or training a neural network to produce joint representations of images and their associated texts. Such representations are useful for a range of computer vision tasks, including text-based image search and image-based text search.

Typically, joint image-text models are trained using contrastive learning, in which the model is fed training samples in pairs, one positive and one negative, and it learns to pull the positive examples together in the representation space and push the positive and negative examples apart. So, for example, a model might be trained on images with pairs of associated text labels, one the correct label and one a random label, and it would learn to associate images with the correct labels in a shared, multimodal representative space.

Both of our CVPR papers grow out of the same observation: that simply enforcing the alignment between different modalities with strong contrastive learning may cause the degeneration of the learned features. To address this problem, our papers explore different ways of imposing additional structure on the representational space, so that the model learns more robust image-text alignments.

Representation codebooks

A neural network trained on multimodal data will naturally tend to cluster data of the same type together in the representational space, a tendency at odds with the purpose of image-text representation learning, which seeks to cluster images with their associated texts.

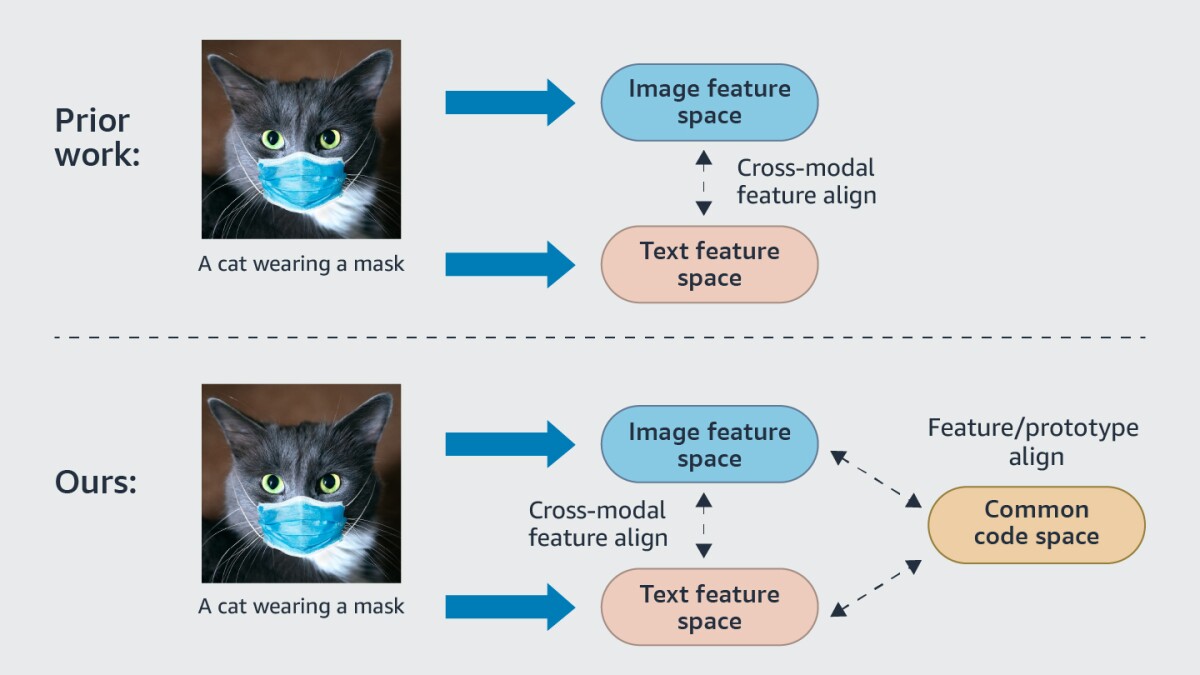

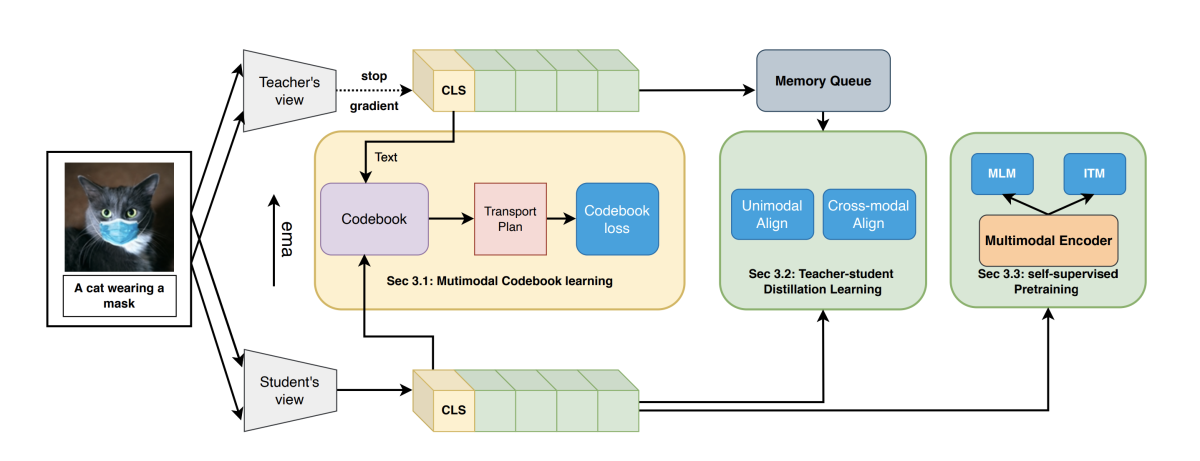

To combat this tendency, in “Multi-modal alignment using representation codebook”, we propose to align image and text at a higher and more stable level using cluster representation. Specifically, we treat image and text as two “views” of the same entity and use a codebook of cluster centers to span the joint vision-language coding space. That is, each center anchors a cluster of related concepts, whether those concepts are expressed visually or textually.

During training, we contrast positive and negative samples via their cluster assignments while simultaneously optimizing the cluster centers. To further smooth out the learning process, we adopt a teacher-student distillation paradigm, in which the output of a teacher model provides training targets for a student model. Specifically, we use the model output for one view — image or text — to guide the student learning of the other. We evaluated our approach on common vision-language benchmarks and obtain new state of the art on zero-shot cross-modality retrieval, or image-based text retrieval and text-based image retrieval on data types unseen during training. Our model is also competitive on various other transfer tasks.

Triple contrastive learning

The success of contrastive learning in training image-text alignment models has been attributed to its ability to maximize the mutual information between image and text pairs, or the extent to which image features can be predicted from text features and vice versa.

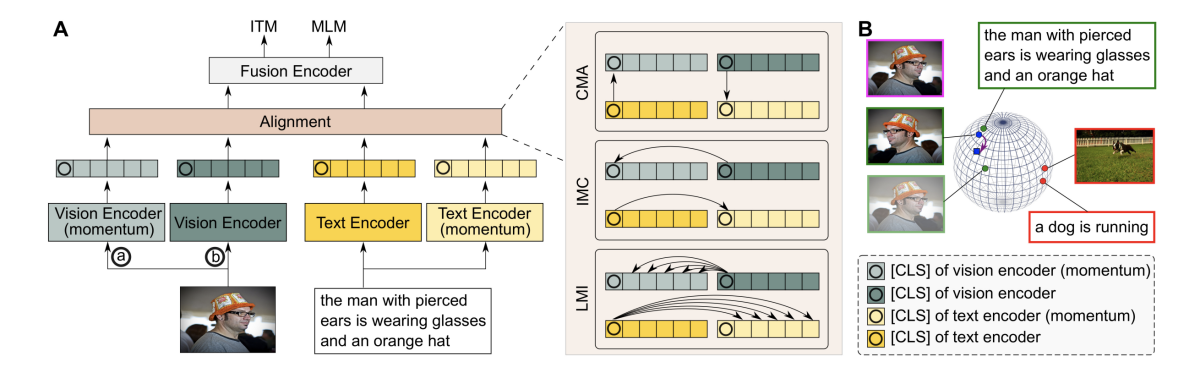

However, simply performing cross-modal alignment (CMA) ignores potentially useful correlations within each modality. For instance, although CMA maps image-text pairs close together in the embedding space, it fails to ensure that similar inputs from the same modality stay close to each other. This problem can get even worse when the pretraining data is noisy.

In “Vision-language pre-training with triple contrastive learning”, we address this problem using triple contrastive learning (TCL) for vision-language pre-training. This approach leverages both cross-modal and intra-modal self-supervision, or training on tasks contrived so that they don’t require labeled training examples. Besides CMA, TCL introduces an intramodal contrastive objective to provide complementary benefits in representation learning.

To take advantage of localized and structural information from image and text input, TCL further maximizes the average mutual information between local regions of image/text and their global summary. To the best of our knowledge, ours is the first work that takes into account local structure information for multimodal representation learning. Experimental evaluations show that our approach is competitive and achieves the new state of the art on various common downstream vision-language tasks, such as image-text retrieval and visual question answering.