Automatic speech recognition systems, which convert spoken words into text, are an important component of conversational agents such as Alexa. These systems generally comprise an acoustic model, a pronunciation model, and a statistical language model. The role of the statistical language model is to assign a probability to the next word in a sentence, given the previous ones. For instance, the phrases “Pulitzer Prize” and “pullet surprise” may have very similar acoustic profiles, but statistically, one is far more likely to conclude a question that begins “Alexa, what playwright just won a … ?”

Traditionally, a speech recognition system will use a single statistical language model to resolve ambiguities, regardless of context. But we could improve the accuracy of the system by leveraging contextual information. Let’s say the language model has to determine the probability of the next word in the utterance “Alexa, get me a … ”. The probabilities of the next word being “salad” or “taxi” would be very different if the customer’s recent interactions with Alexa were about foods’ nutritional content or local traffic conditions.

In a paper we’re presenting at the Interspeech 2018 conference, my colleagues and I describe a machine-learning system that mixes different statistical language models based on contextual clues. The method can accommodate any type of contextual information, but we examine in particular data such as the text of a customer’s request, the history of the customer’s recent Alexa interactions, and metadata such as the time at which the request was issued. The paper is titled “Contextual Language Model Adaptation for Conversational Agents”.

In tests, we compared our system to one that used a single language model and found that it reduced speech transcription errors by as much as 6%. It also improved the recognition of named entities such as people, locations, and businesses by up to 15%. This is particularly important for conversational agents, since the meaning of a sentence can be lost if its named entities are misrecognized.

We trained two different versions of the speech recognizer, one for goal-oriented systems that find specific Alexa skills to handle requests and one for more general conversational agents, like those that compete for the Alexa Prize.

For each of these scenarios, we built multiple language models from scratch. In the first scenario, we trained the language models using utterances that had been annotated according to the type of skill that processed them — skills that play music, report weather or sports scores, compute driving distances, and so on. Each skill class ended up with its own language model. For the general conversational agents — or “chatbots” — we used existing algorithms to automatically infer the topics of transcribed conversations, and we built a separate language model for each topic.

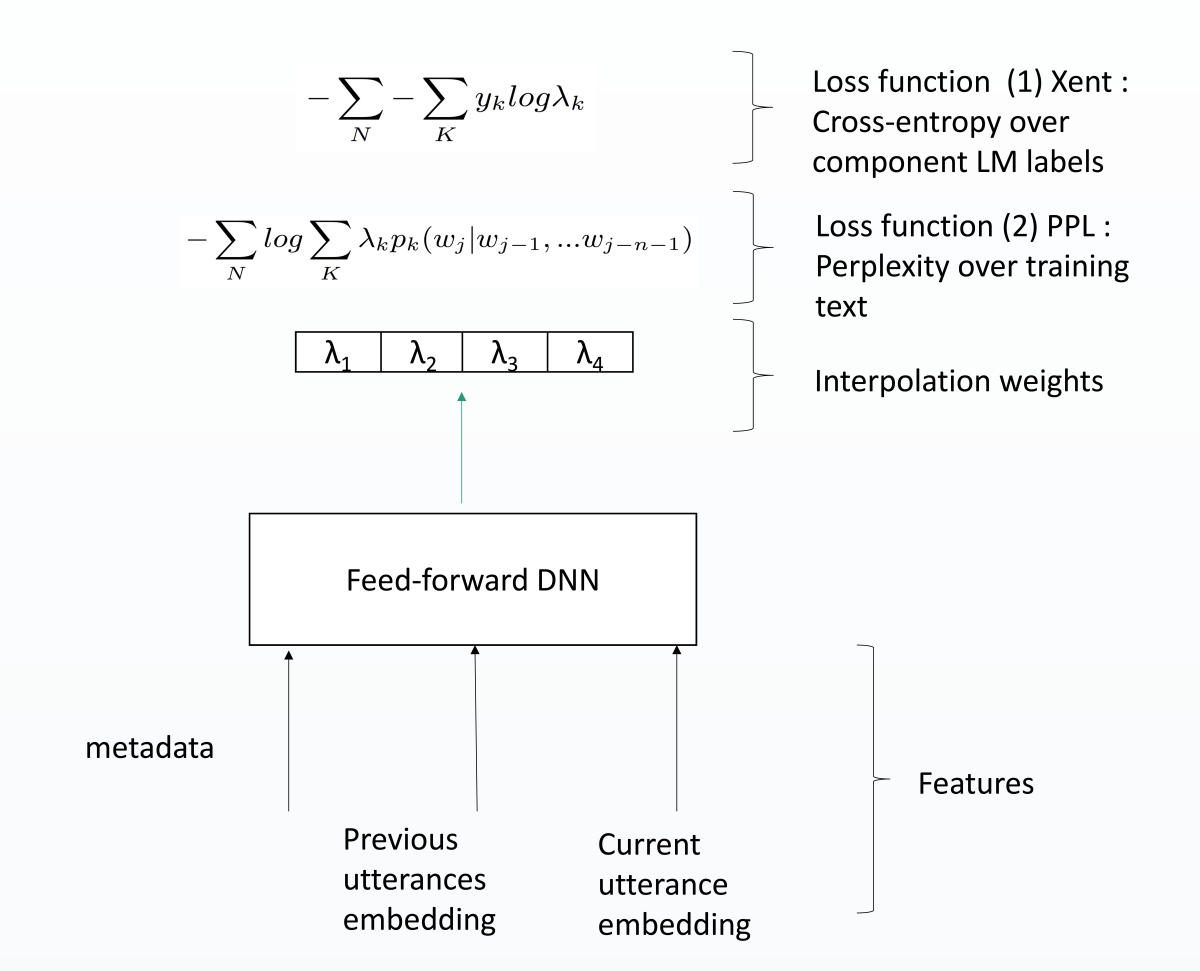

Then, for each scenario, we trained a deep neural network to combine language models dynamically during runtime, so as to minimize two different measures of transcription error.

More precisely, if LM1, LM2, … , LMn are our skill-specific or topic-specific language models, then for each interaction with the conversational agent, the neural network outputs a new set of weights, a1, a2, … , an. For each round of conversation, the speech recognizer uses a combined language model defined as (a1 LM1) + (a2 LM2) + ... + (an * LMn).

So, for instance, given the input “play ‘Thriller,’” the network might give approximately equal weight to the music player and video player models, while giving very little weight to the weather report model. If, however, the input was “play the song ‘Thriller,’” the network might give more weight to the music player model than to the video player model.

In addition, for each of the two scenarios, we ran two different versions of the system: one that makes a single pass through the input data and one that makes two passes, using the speech recognizer’s initial output as a new input. In the case of the goal-oriented utterances, the one-pass system reduced the error rate by 1.35% relative to a system that used a single language model, and the two-pass system reduced it by 3.5%; in the case of the chatbot, the reductions were 2.7% and 6%, respectively. For named-entity recognition, the one-pass system reduced errors by 10%, the two-pass system by 15%.

Paper: "Contextual Language Model Adaptation for Conversational Agents"

Acknowledgements: Behnam Hedayatnia, Linda Liu, Ankur Gandhe, Chandra Khatri, Angeliki Metallinou, Anu Venkatesh, Ariya Rastrow