In recent years, machine translation systems have become much more accurate and fluent. As their use expands, it has become increasingly important to ensure that they are as fair, unbiased, and accurate as possible.

For example, machine translation systems sometimes incorrectly translate the genders of people referred to in input segments, even when an individual’s gender is unambiguous based on the linguistic context. Such errors can have an outsize impact on the correctness and fairness of translations. We refer to this problem as one of gender translation accuracy.

To make it easier to evaluate gender translation accuracy in a wide variety of scenarios, my colleagues and I at Amazon Translate have released a new evaluation benchmark: MT-GenEval. We describe the benchmark in a paper we are presenting at the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP).

MT-GenEval is a large, realistic evaluation set that covers translation from English into eight diverse and widely spoken (but in some cases understudied) languages: Arabic, French, German, Hindi, Italian, Portuguese, Russian, and Spanish. In addition to 1,150 segments of evaluation data per language pair, we release 2,400 parallel sentences for training and development.

Unlike widely used bias test sets that are artificially constructed, MT-GenEval data is based on real-world data sourced from Wikipedia and includes professionally created reference translations in each of the languages. We also provide automatic metrics that evaluate both the accuracy and quality of gender translations.

Gender representations

To get a sense of where gender translation inaccuracies often arise, it is helpful to understand how different languages represent gender. In English, there are some words that unambiguously identify gender, such as she (female gender) or brother (male gender).

A quick guide to Amazon's 40+ papers at EMNLP 2022

Explore Amazon researchers’ accepted papers which address topics like information extraction, question answering, query rewriting, geolocation, and pun generation.

Many languages, including those covered in MT-GenEval, have a more extensive system of grammatical gender, where nouns, adjectives, verbs, and other parts of speech can be marked for gender. To give an example, the Spanish translation of “a tall librarian” is different if the librarian is a woman (una bibliotecaria alta) or a man (un bibliotecario alto).

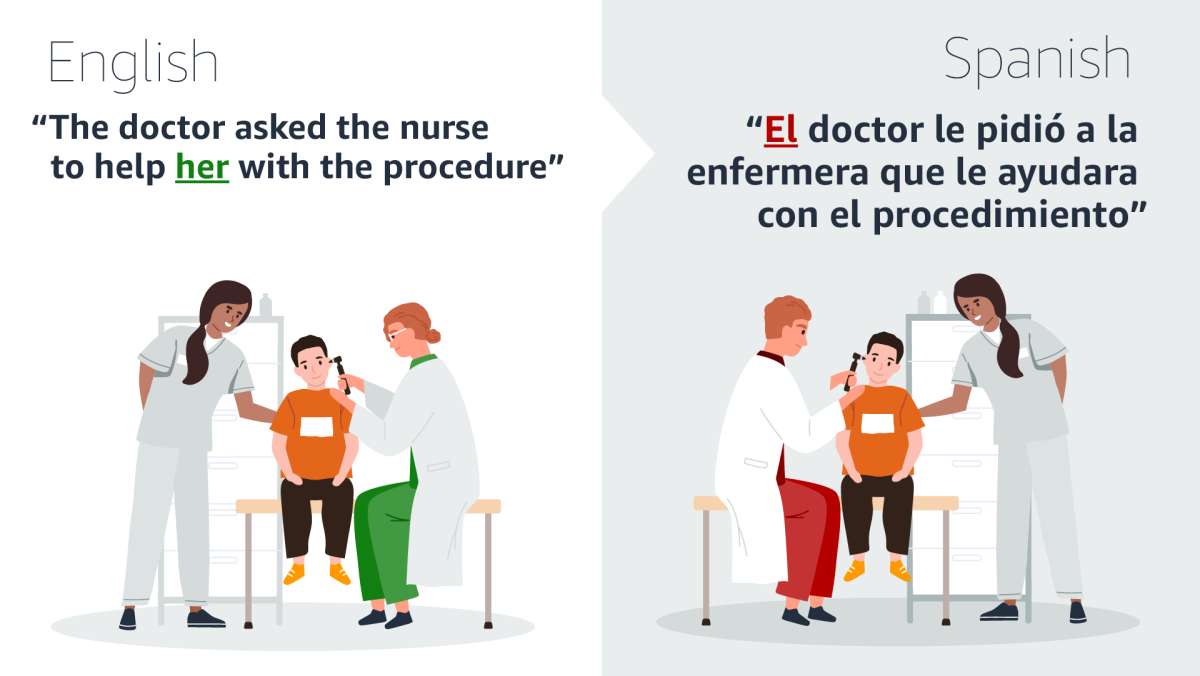

When a machine translation model translates from a language with no or limited gender (like English) into a language with extensive grammatical gender (like Spanish), it must not only translate but also correctly express the genders of words that lack gender in the input. For example, with the English sentence “He is a tall librarian,” the model must correctly select the male grammatical gender for “a” (un, not una), “tall” (alto, not alta) and “librarian” (bibliotecario, not bibliotecaria), all based on the single input word “He.”

In the real world, input texts are often more complex than this simple example, and the word that disambiguates an individual’s gender might be very far — potentially even in another sentence — from the words that express gender in the translation. In these cases, we have observed that machine translation models have a tendency to disregard the disambiguating context and even fall back on gender stereotypes (such as translating “pretty” as female and “handsome” as male, regardless of the context).

While we have seen several anecdotal cases of these types of gender translation accuracy issues, until now there has not been a way to systematically quantify such cases in realistic, complex input text. With MT-GenEval, we hope to bridge this gap.

Building the dataset

To create MT-GenEval, we first scoured English Wikipedia articles to find candidate text segments each of which contained at least one gendered word in a span of three sentences. Because we wanted to ensure that the segments were relevant for evaluating gender accuracy, we asked human annotators to exclude any sentences that either did not refer to individuals (e.g., “The movie She’s All That was released in 1999”) or did not unambiguously express the gender of that individual (e.g., “You are a tall librarian”).

Then, in order to balance the test set by gender, the annotators created counterfactuals for the segments, where each individual’s gender was changed either from female to male or from male to female. (In its initial release, MT-GenEval covers two genders: female and male.) For example, “He is a prince and will someday be king” would be changed to “She is a princess and will someday be queen.” This type of balancing ensures that differently gendered subsets do not have different meanings. Finally, professional translators translated each sentence into the eight target languages.

A balanced test set also allows us to evaluate gender translation accuracy, because for every segment, it provides a correct translation, with correct genders, and a contrastive translation, which differs from the correct translation only in gender-specific words. In the paper, we propose a straightforward accuracy metric: for a given translation with the desired gender, we consider all the gendered words in the contrastive reference. If the translation contains any of the gendered words in the contrastive reference, it is marked incorrect; otherwise, it’s marked correct. Our automatic metric agreed with annotators reasonably well, with F scores of over 80% across all eight target languages (English was the source language).

While this assesses translations on the lexical level, we also introduce a metric to measure differences in machine translation quality for masculine and feminine outputs. We define this gender quality gap as the difference in BLEU scores on the masculine and feminine subsets of the balanced dataset.

Given this extensive curation and annotation, MT-GenEval is a step forward for evaluation of gender accuracy in machine translation. We hope that by releasing MT-GenEval, we can inspire more researchers to work on improving gender translation accuracy on complex, real-world inputs in a variety of languages.