Suppose that you say to Alexa, “Alexa, play Mary Poppins.” Alexa must decide whether you mean the book, the video, or the soundtrack. How should she do it?

Each of Alexa’s core domains — such as the Books domain, the Video domain, or the Music domain — has its own natural-language-understanding (NLU) model, which estimates the likelihood that a given request is intended for it. But those models are trained on different data, so there’s no guarantee that their probability estimates are compatible. Should a 70% estimate from Music be given priority over a 68% estimate from Books, or is it possible that, when it comes to Mary Poppins, the Music model is slightly overconfident?

The standard way to address this problem is to train a machine learning system to take probability estimates from multiple domain models and weight them so that they’re more directly comparable. But in a paper we’re presenting at this year’s IEEE Spoken Language Technologies conference, my colleagues and I present an alternative: a method that lets each domain develop its own weighting procedure, independent of the others.

The advantage of this approach is that each domain can update its own weighting system whenever required, and multiple domains can perform updates in parallel, which is more efficient. We are already using this method in production, to ensure that Alexa customers benefit from system updates as quickly as possible. We call it re-ranking, because it takes a list of domain-specific hypotheses ranked according to an NLU model’s confidence scores and re-ranks them according to a learned set of weights.

Those weights don’t just apply to the domain classification probability. Alexa’s NLU models also classify utterances by intent, or the action the customer wants performed, and slot, or the data item the intent is supposed to act upon. And like the domain classifications, intent and slot classifications are probabilistic.

Within the Music domain, for instance, the utterance “play Thriller” would probably call up the PlayMusic intent (as opposed to, say, the CreateList intent), but the slot classifier might assign similar probabilities to the classifications of the word “Thriller” as AlbumName and SongName.

During training, we feed one of our domain-specific re-rankers not just the domain classification probability for each utterance but the most probable intent and slot hypotheses, too. It could be, for instance, that for purposes of re-ranking, intent confidence is more important than domain confidence, or vice versa.

The system learns these relative values during training, and it produces separate weights for each classification category — domain, intent, and slot. It can also factor contextual information into its re-rankings, learning, for instance, that on a Fire TV, it should give more precedence to Video domain hypotheses than it should on a voice-only device.

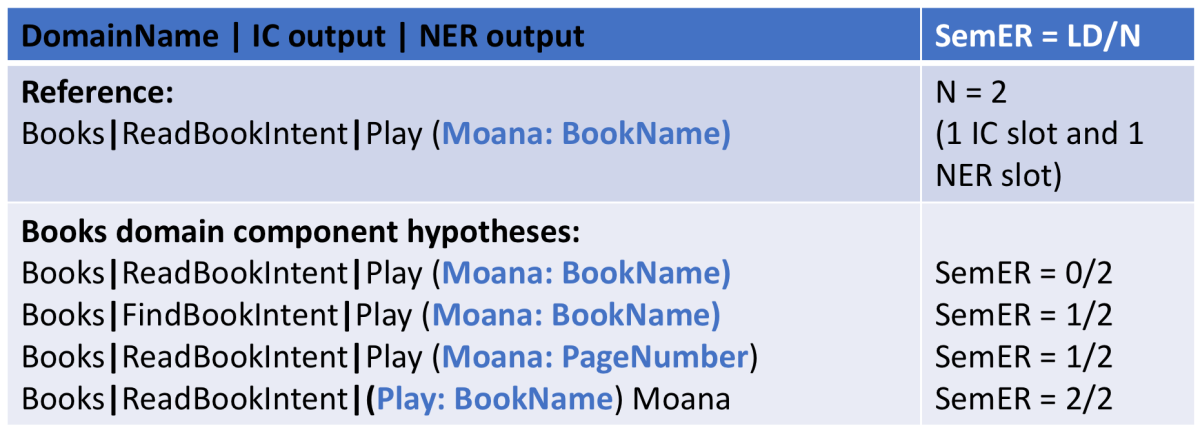

In experiments, we trained our re-ranker using several evaluation criteria, or “loss functions.” The first loss function relies on a metric we call semantic error, which counts the number of errors in a given classification hypothesis and divides it by the total number of intents and slots in the correct classification. If an utterance in the training set has one intent and two slots, for instance, then a hypothesis that gets the intent and one slot right but misses the other slot would have a semantic-error score of 1/3.

Our first loss function is called expected semantic error. It imposes a penalty on rankings in which hypotheses with high semantic error rank ahead of hypotheses with low semantic error.

The second loss function is a so-called cross-entropy function. It eschews the comparatively fine-grained semantic-error measure in favor of a simple one-bit tag that indicates whether a given hypothesis is true or not. But it imposes a particularly stiff penalty on incorrect hypotheses that get high confidence scores, and it confers a large bonus on true hypotheses that get high confidence scores.

This is the loss function we introduce to calibrate scores across domains. It inclines toward weights that correct for overconfidence, but by rewarding the ability to distinguish true hypotheses so lavishly, it also prevents overcompensation.

Our third loss function simply combines the first two — the expected-semantic-error function and the cross-entropy function. We also compare systems trained on all three loss functions to one that uses no domain-specific weighting at all.

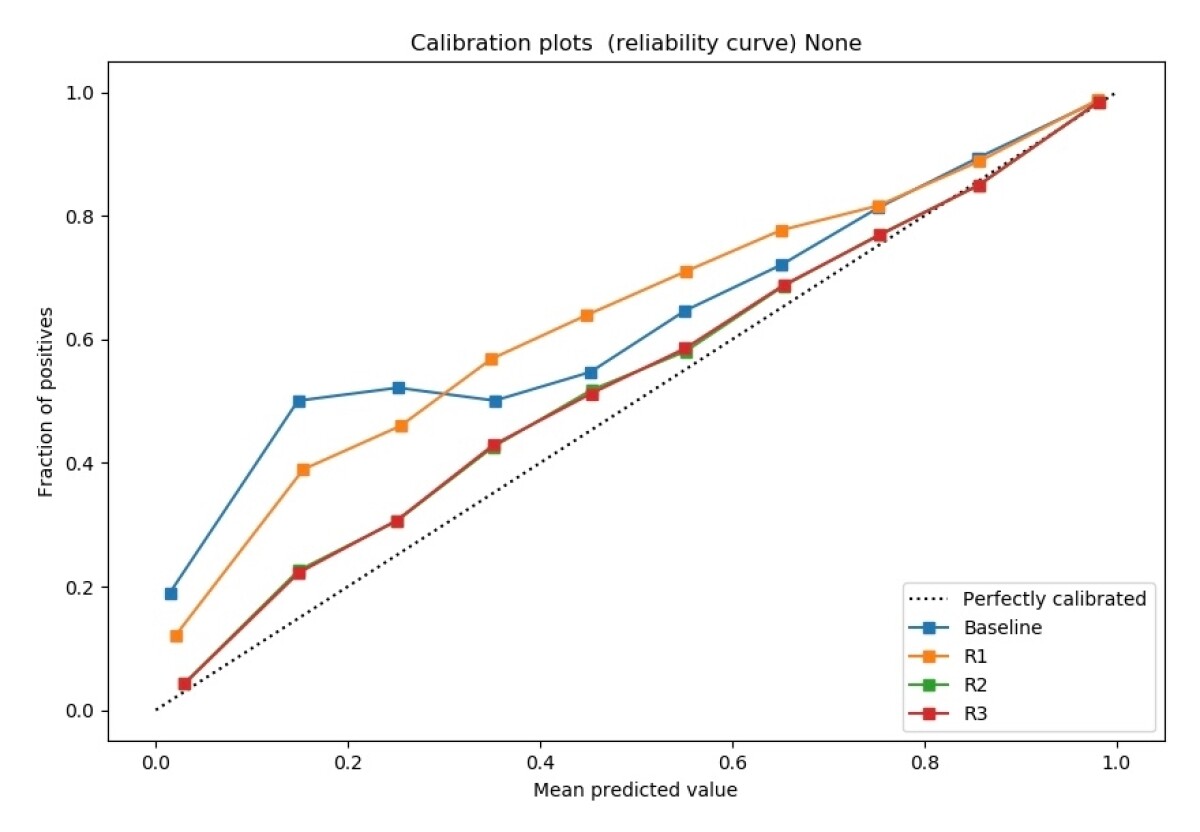

The graph below summarizes our results. The dotted line represents perfect calibration of confidence scores across all domains. The better our re-rankers approximate that line, the better calibration they afford.

Our first observation is that the unweighted baseline system is already fairly well calibrated, a tribute to the quality of the domain-specific NLU models. Our second is that, while the combination of expected semantic error and cross-entropy (R3) does indeed yield the best calibration, it’s only slightly better than cross-entropy alone (R2), which is significantly better than expected semantic error alone (R1). In production we use the combined loss function to ensure the best possible experience for our customers.

Acknowledgments: Rahul Gupta, Shankar Ananthakrishnan, Spyros Matsoukas