In recent years, generative adversarial networks (GANs) have demonstrated a remarkable ability to synthesize realistic visual images from scratch.

But controlling specific features of a GAN’s output — lighting conditions or viewing angle, for instance, or whether someone is smiling or frowning — has been difficult. Most approaches depend on trial-and-error exploration of the GAN’s parameter space. A recent approach to controlling synthesized faces involves generating 3-D archetypes with graphics software, a cumbersome process that offers limited control and is restricted to a single image category.

At this year’s International Conference on Computer Vision (ICCV), together with Amazon distinguished scientist Gérard Medioni, we presented a new approach to controlling GANs’ output that allows numerical specification of image parameters — viewing angle or the age of a human figure, for instance — and is applicable to a variety of image categories.

Our approach outperformed its predecessors on several measures of control precision, but we also evaluated it in user studies. Users found images generated with our approach more realistic than images generated by its two leading predecessors, by a two-to-one margin.

Latent spaces

The training setup for a GAN involves two machine learning models: the generator and the discriminator. The generator learns to produce images that will fool the discriminator, while the discriminator learns to distinguish synthetic images from real ones.

During training, the model learns a probability distribution over a learned set of image parameters (in the StyleGAN family of models, there are 512 parameters). That distribution describes the range of parameter values that occur in real images. Synthesizing a new image is then a matter of picking a random point from that distribution and passing it to the generator.

The image parameters define a latent space (with, in StyleGAN, 512 dimensions). Variations in image properties — high to low camera angle, young to old faces, left to right lighting, and so on — might lie along particular axes of the space. But because the generator is a black-box neural network, the structure of the space is unknown.

Previous work on controllable GANs involved exploring the space in an attempt to learn its structure. But that structure can be irregular, so that learning about one property tells you little about the others. And properties can be entangled, so that changing one also changes others.

Recent work adopted a more principled approach, in which the input to the generator specifies properties of an image of a human face, and the generator is evaluated on how well its output matches a 3-D graphics model with the same properties.

This approach has some limitations, however. One is that it works only with faces. Another is that it can yield output images that look synthetic, since the generator learns to match properties of synthetic training targets. And finally, it’s hard to capture more holistic properties, like a person’s age, with a graphics model.

Controllable GANs

In our paper, we present an approach to controlling GANs that requires only numerical inputs, modifies a wide range of image properties, and applies to a large variety of image categories.

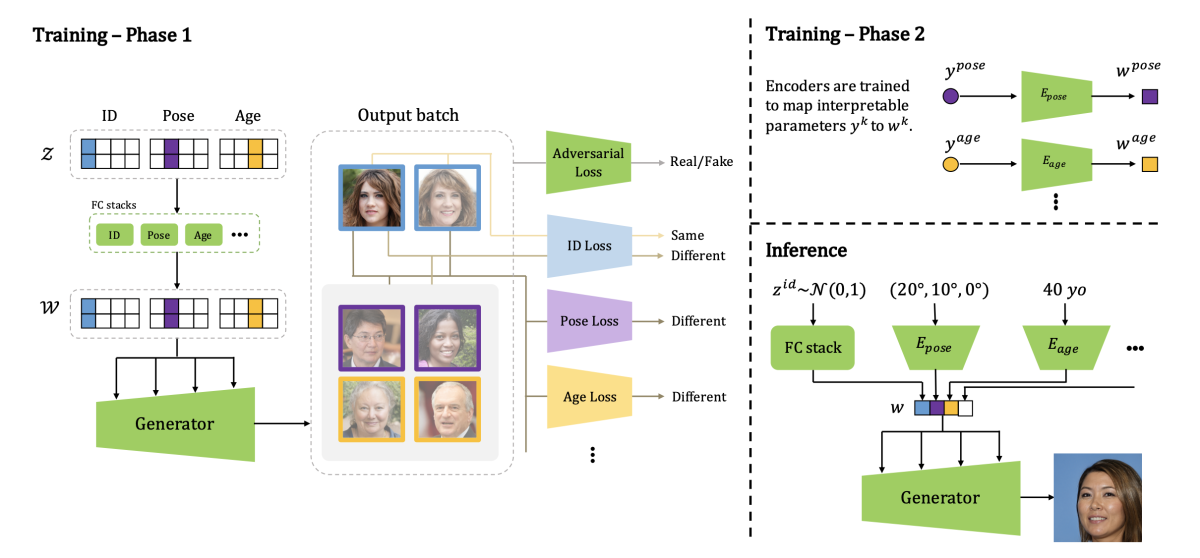

First, we use contrastive learning to structure the latent space so that the properties we’re interested in lie along different dimensions — that is, they’re not entangled. Then we learn a set of controllers that can modify those properties individually.

To start, we select a set of image properties we wish to control and construct a representational space such that each dimension of the space corresponds to one of the properties (Z in the image above). Then we select pairs of points in that space that have the same value in one dimension but different values in the other dimensions.

During training, we pass these point pairs through a set of fully connected neural-network layers that learn to map points in our constructed space onto points in a learned latent space (W in the figure). The points in the learned space will act as controllers for our generator.

Then, in addition to the standard adversarial loss, which penalizes the generator if it fails to fool the discriminator, we compute a set of additional losses, one for each property. These are based on off-the-shelf models that compute the image properties — age, expression, lighting direction, and so on. The losses force images that share properties closer together in the latent space, while forcing apart images that don’t share properties.

Once we’ve trained the generator, we randomly select points in the latent space, generate the corresponding images, and measure their properties. Then we train a new set of controllers that take the measured properties as input and output the corresponding points in the latent space. When those controllers are trained, we have a way to map specific property measurements to points in the latent space.

Evaluation

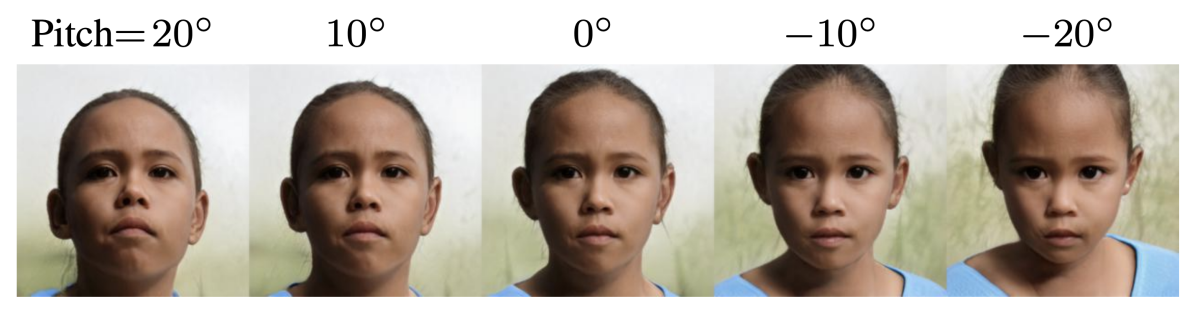

To evaluate our method, we compared it to two of the prior methods that used 3-D graphics models to train face generators. We found that faces generated using our method better matched the input parameters than faces generated with the earlier methods.

We also asked human subjects to rate the realism of images produced by our method and the two baselines. In 67% of cases, subjects found our images more natural than either baseline. The better of the two baselines had a score of 22%.

Finally, we asked our human subjects whether they agreed or disagreed that our generated human faces exhibited properties we’d controlled for. For five of those properties, the agreement ranged from 87% to 98%. On the sixth property — elevated camera angle — the agreement was only about 66%, but at low angles, the effect may have been too subtle to discern.

In these evaluations, we necessarily restricted ourselves to generating human faces, since that’s the only domain for which strong baselines were available. But we also experimented with images of dogs’ faces and synthetic paintings, neither of which prior methods could handle. The results can be judged in the images below: