Content owners make a lot of effort to eliminate bad content that may adversely affect their customers. Bad content can take many forms, such as fake news, paid reviews, spam, offensive language, etc. We call such data items (documents) forbidden docs, or f-docs, for short.

Any data-cleaning process, however, is susceptible to errors. No matter how much effort goes into the cleaning process, some bad content might remain. This week at the annual meeting of the ACM Special Interest Group on Information Retrieval (SIGIR), the Alexa Shopping research team presented a paper on information retrieval (IR) in the presence of f-docs. In particular, we’re trying to optimize the twin demands of retrieving content relevant to customer requests and filtering out f-docs.

For example, consider a question posed on a community question-answering (CQA) site, where our goal is to rank answers according to their quality and relevance while filtering out bad ones. The next table presents some answers to the question “Is the Brand X sports watch waterproof?” While some of the answers are helpful, or at least fair, there are a few that should not be exposed to our users as they significantly hurt the search experience.

Filtering algorithms, however, are prone to two types of errors: (1) false positives (i.e., filtering non-f-docs) and (2) false negatives (i.e., including f-docs in the results).

Typically, ranking quality and filtering accuracy are measured independently. However, the number of f-docs left in the ranked list after filtering and their ranking positions heavily affect both the ranking score and the filtering score. Therefore, it is desirable to evaluate the system’s ranking quality as filtering decisions are being made.

The right metric

We look for an evaluation metric that reinforces a ranker according to three criteria: it (1) prunes as many f-docs from the retrieved list as possible; (2) does not prune non-f-docs from the list; and (3) ranks remaining docs according to their relevance to the query while pushing f-docs down the list.

In our paper, my colleagues Nachshon Cohen, Amir Ingber, Elad Kravi, and I analyze the types of metrics that can be used to measure the ranking and filtering quality of the search results. The natural choice is normalized discounted cumulative gain (nDCG), a metric that discounts the relevance of results that appear further down the list; that is, it evaluates a ranking algorithm according to both relevance and rank ordering.

With nDCG, relevant labels are associated with positive scores, non-relevant labels with a zero score, and the “forbidden labels” with negative scores. The nDCG score sums the scores of the individual list items, so the score for a ranked list containing f-docs will reflect the number of f-docs in the list, their relative positions in the ranking, and their degree of forbiddenness.

NDCG differs from the ordinary DCG (discounted cumulative gain) score in that the results are normalized by the DCG score of the ideal ranked list — the list ranked according to the ground truth labels. It can be interpreted as a distance between the given rank and the ideal rank.

When all label scores are non-negative — i.e,. no f-docs are among the top k documents in the results — nDCG is bounded in the range [0, 1], where 0 means that all search results are non-relevant, while 1 means that the ranking is ideal.

However, in the presence of negatively scored labels, nDCG is unbounded and therefore unreliable. For instance, unboundedness may lead to extreme over- or undervaluation on some queries, with disproportionate effect on the average metric score.

The nDCGmin metric, a modification of nDCG suggested by Gienapp et al. at CIKM’20, solves this unboundedness problem for the case of negatively scored labels. It measures the DCG scores of both the worst possible ranked list (the reverse of the ideal ranked list) and the ideal list and then performs min-max normalization with these two extreme scores.

However, we show in our paper that when ranking and filtering are carried out together — i.e., when the ranker is allowed to retrieve (and to rank) a sublist of the search results — nDCGmin becomes unbounded. As an alternative, we propose nDCGf, a modification of nDCGmin that solves this second unboundedness problem by modifying the normalization scheme in order to handle sublist retrieval.

In particular, nDCGf measures the DCG score of the ideal and the worst sublists over all possible sublists of the results list and then uses the extreme scores of these sublists for min-max normalization.

We show both theoretically and empirically that while nDCGmin is not suitable for the evaluation task of simultaneous ranking and filtering, nDCGf is a reliable metric. Reliability is a standard measure of a metric’s ability to capture the actual difference in performance among rankers, by measuring deviation stability over a test-set of queries.

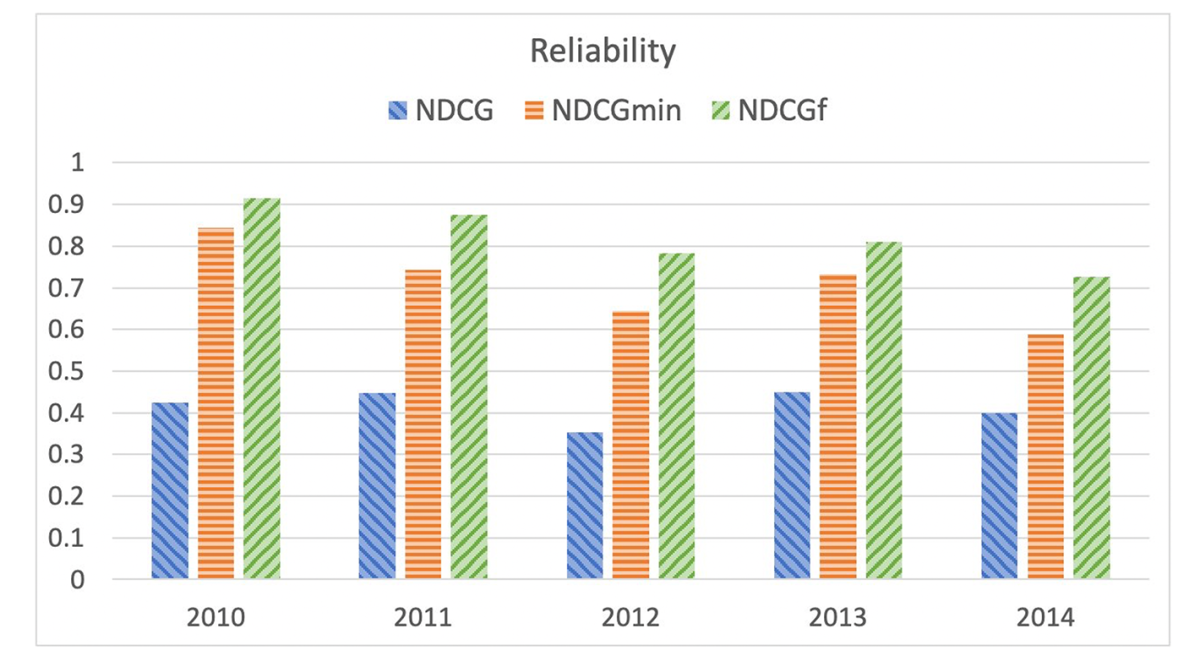

The next figure shows the reliability of nDCG, nDCGmin, and nDCGf over datasets released for the web-track information retrieval challenge at the Text Retrieval Conference (TREC) for the years 2010-2014. For all years, the reliability of nDCG and nDCGmin is significantly lower than that of nDCGf, due to their improper normalization when negative labels and partial retrieval are allowed.

Model building

After establishing the relevant metric, our paper then shifts focus to jointly learning to rank and filter (LTRF). We assume an LTRF model that optimizes the ranking of the search results while also tuning a filtering threshold such that any document whose score is below this threshold is filtered out.

We experiment with two tasks for which both ranking and filtering are required, using two datasets we compiled: PR (for product reviews) and CQA (for community question answering). We have publicly released the CQA dataset to support further research by the IR community on LTRF tasks.

In the PR dataset, our task is to rank product reviews according to their helpfulness while filtering those marked as spam. Similarly, in the CQA dataset our task is to rank lists of human answers to particular questions while filtering bad answers. We show that both ranking only and filtering only fail to provide high-quality ranked-and-filtered lists, measured by nDCGf score.

A key component for model training in any learning-to-rank framework is the loss function to be optimized, which determines the “loss” of the current model with respect to an optimal model. We experiment with several loss functions for model training for the two tasks, demonstrating their success in producing effective LTRF models for the simultaneous-learning-and-filtering task.

LTRF is a new research direction that poses many challenges that deserve further investigation. While our LTRF models succeed at ranking and filtering, the volume of f-docs in the retrieved lists is still too high. Improving the LTRF models is an open challenge, and we hope that our work will encourage other researchers to tackle it.