To be as useful as possible to customers, Alexa should be able to make educated guesses about the meanings of ambiguous utterances. If, for instance, a customer says, “Alexa, play the song ‘Hello’”, Alexa should be able to infer from the customer’s listening history whether the song requested is the one by Adele or the one by Lionel Richie.

One natural way to resolve such ambiguities is through collaborative filtering, the technique that Amazon.com uses to recommend products: Alexa would simply choose the song that the customer is likely to enjoy more. But voice-service customers tend not to explicitly rate individual instances of the content they receive, in the way that Amazon.com customers rate individual products. So a collaborative-filtering algorithm would have little data from which to deduce customer preferences. Moreover, customers on Amazon.com click to view items proactively, whereas voice-service customers often receive resolutions of requests passively. So a playback record does not necessarily indicate a customer preference for the played item.

In a paper titled “Play Duration based User-Entity Affinity Modeling in Spoken Dialog System”, which we’re presenting at Interspeech 2018, my colleagues and I demonstrate how to use song-play duration as an implicit rating system, on the assumption that customers will frequently cancel the playback of songs they don’t want while permitting the playback of songs they do.

We use machine learning to analyze playback duration data to infer song preference, and we use collaborative-filtering techniques to estimate how a particular customer might rate a song that he or she has never requested. Although we tested our approach on music-streaming records, it generalizes easily to any other streaming-data service, such as video or audiobooks.

Amazon has long been a leader in the field of collaborative filtering. Indeed, last year, as part of its 20th-anniversary celebration, the journal IEEE Internet Computing chose a 2003 paper on collaborative filtering by three Amazon scientists as the one paper in its publication history that had best withstood the “test of time”.

In our work, we frame the problem in the same way that the earlier paper does, but we use more contemporary techniques for solving it.

Customers’ ratings for all the songs in a music service’s catalogue could be represented as an enormous grid, or matrix. Each row represents a customer, each column represents a song, and the value entered at the intersection of any row and any column indicates the customer’s “affinity” for a particular song.

With tens of millions of customers and tens of millions of songs, however, that matrix swells to unmanageable size. So our system learns how to factorize the matrix into two much smaller matrices, one with only about 50 columns and the other with only about 50 rows. Multiplying the two matrices, however, yields a good approximation of the values in the full matrix.

As we train our machine-learning model, we divide playbacks into two categories: those that lasted less than 30 seconds and those that lasted more. The short playbacks are assigned a score of -1, the long playbacks a score of 1.

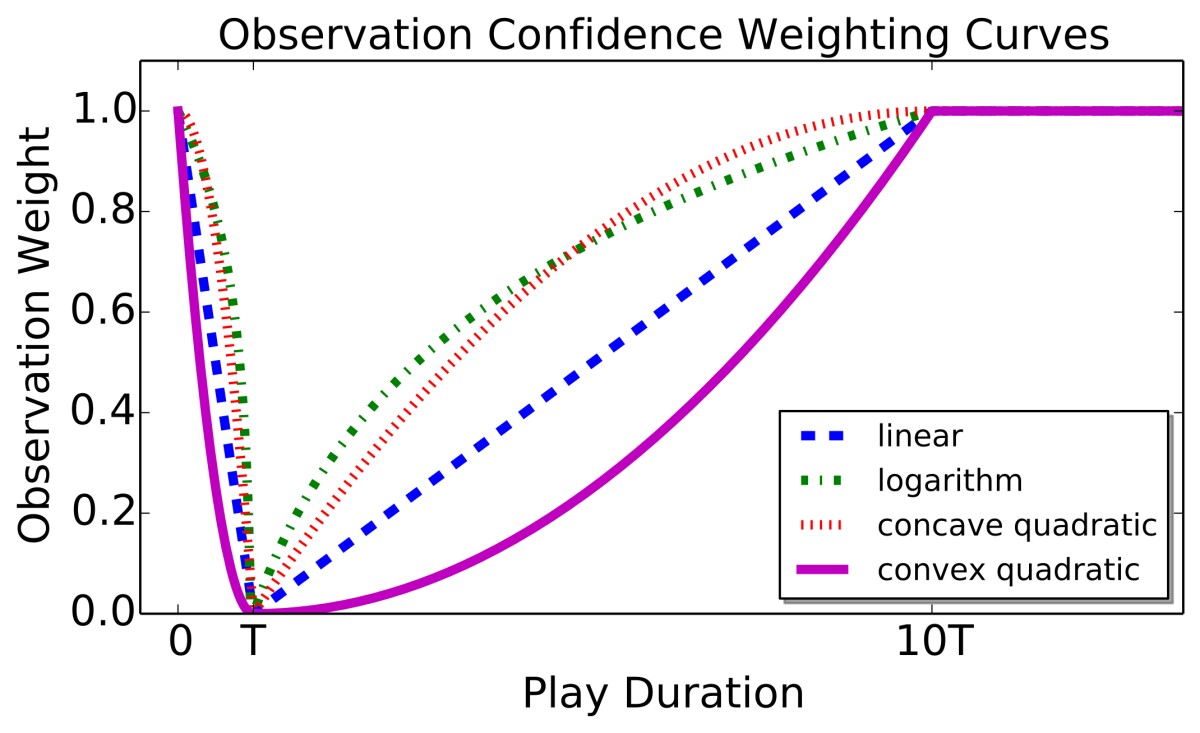

Of course, playback duration is not a perfect proxy for affinity: a knock at the door might force a customer to turn off a beloved song just as it’s beginning, while a child’s shouts might pull a customer out of the room even though the song that’s playing is the wrong one. So we also add a weighting function, which gives the binary scores more or less weight depending on playback duration. For instance, during training, a score of -1 will receive a greater weight if it’s based on a one-second playback than if it’s based on a 25-second playback, and a score of 1 will receive a greater weight if it’s based on a three-minute playback than if it’s based on a 35-second playback.

We experimented with several different weighting functions and found that a convex quadratic function gave better results than a concave quadratic function, a linear function, or a logarithmic function. That is, a function that gives extra weight to particularly short durations below the 30-second threshold and particularly long durations above it works better than those that spread weights out more evenly.

Another wrinkle to our system is that it doesn’t try to learn a matrix factorization that will exactly reproduce the values in the giant affinity grid; instead, it learns the factorization that will best preserve the relative values of any two entries in the grid. This approach is known to help the system generalize better and avoid overobsessing about noisy observations.

Because we don’t have a ground truth against which to measure our system’s predictions — such as customers’ ratings of the audio content they’ve streamed — we evaluated its performance by correlating the inferred affinity scores with the playback durations. For evaluation, we used data collected on dates other than those we had used to train the model. The correlation was strong enough to demonstrate the effectiveness of the modeling approach, given the challenge of only implicit observations. In the future, we plan to incorporate lexical information about customer requests into the model and to move the technique into production.

Paper: “Play Duration based User-Entity Affinity Modeling in Spoken Dialog System”

Acknowledgements: Nicholas Monath, Shankar Ananthakrishnan, Abishek Ravi