Echo devices have already attracted tens of millions of customers, but in the Alexa AI group, we’re constantly working to make Alexa’s speech recognition systems even more accurate.

In the home, the sounds most likely to be misinterpreted as Alexa commands come from media devices, such as TVs, radios, or music players. So learning to recognize the distinguishing characteristics of those sounds is a good way to ensure a low false-positive rate for Alexa’s speech recognizers.

Next week, at Interspeech, together with colleagues at Amazon, Imperial College London, and Dartmouth University, we will present a new machine learning system that distinguishes sounds produced by media devices from those produced by people engaging in household activities.

Our system learns the frequency characteristics of different types of sounds, but it also analyzes sounds’ arrival times at multiple microphones within an Echo device. That lets it distinguish moving sound sources, as people tend to be, from stationary ones, as media devices tend to be. In tests, we found that adding location information improves the accuracy of media audio recognition by 8% to 37%, depending on the type of audio.

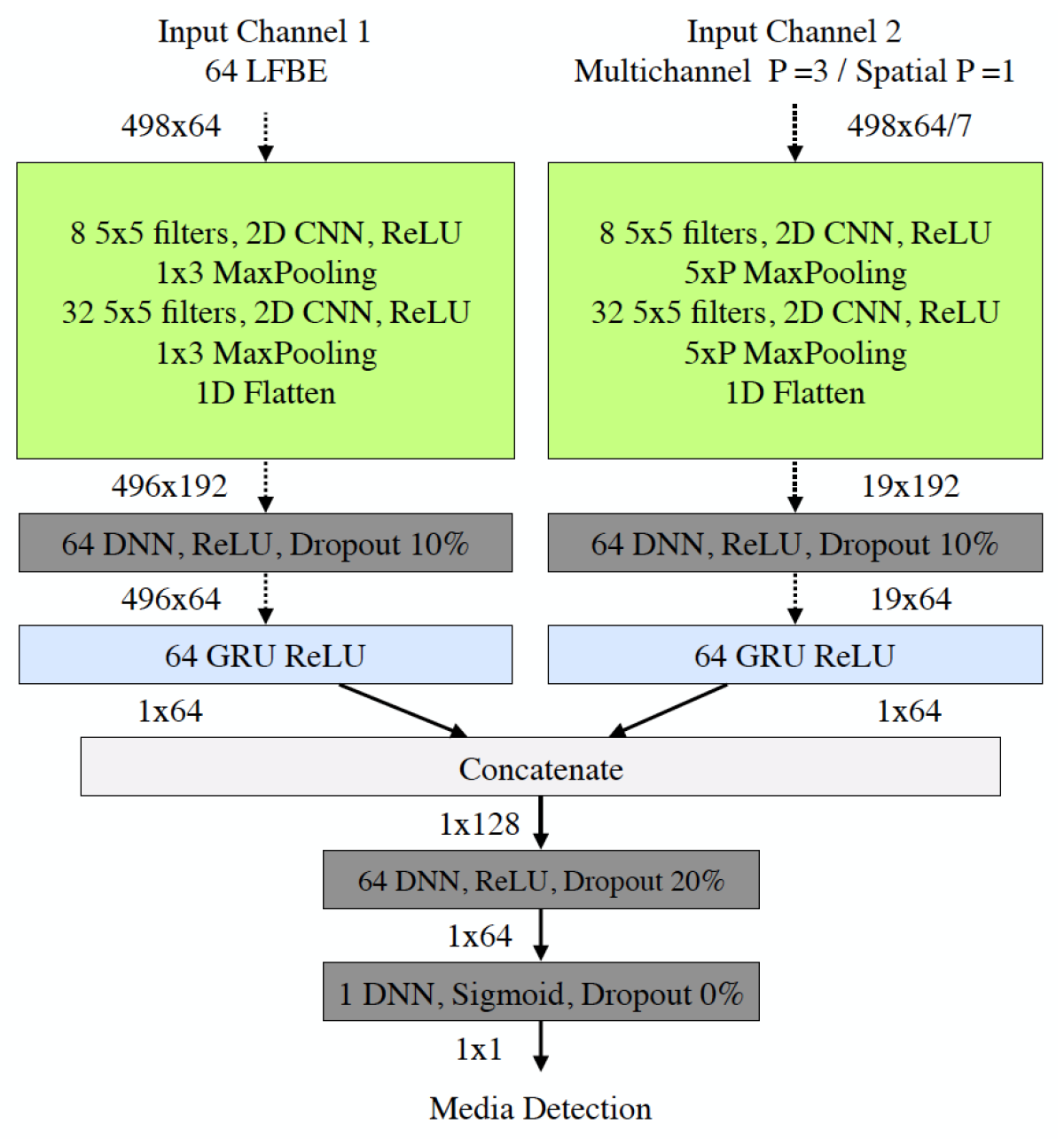

Our system has two inputs. One is the log filter-bank energies (LFBEs) of the sounds in the environment. A log filter-bank splits the sound spectrum into different frequency bands, but the bands aren’t of equal size: they get bigger as the frequencies increase. (The band sizes are defined by a logarithmic function.) This is an attempt to coarsely capture the human psychophysics of sound perception. The LFBE is simply the strength of the signal in each of these unevenly sized frequency bands. We used 64 frequency bands between 100 Hz and 7.2 kHz.

The other input is information about the different times at which the same signal reaches the several microphones of an Echo device. In our experiments, we used data collected from an Echo Dot, which has seven microphones arranged in a ring. Sound will of course reach closer microphones sooner than it does more distant microphones, so arrival-time differential indicates the distance and direction of the sound source.

Each of these input streams passes to its own branch of a neural net, where it is separately processed and transformed, so that only the features of the audio signal most useful for differentiating media from household sounds are preserved. Then the two streams are combined, as input to yet another neural net, which learns to output a binary decision about whether or not the input signal includes audio from a media player.

We trained the system using 311 hours of data collected from volunteers participating in a data collection program. Only 12% of that data included media audio, so we augmented it with another 67 hours of media audio collected by single-microphone systems, which we algorithmically offset to simulate data collected by a seven-microphone system.

Media audio samples were labeled as speech, singing, or “other,” which took in background music, sound effects, and the like. The same sample could have multiples labels — someone speaking while music played in the background, for instance.

In tests, our system performed best on such multiply labeled data, recognizing media audio that involved any combination involving singing with perfect accuracy, and recognizing the combination of speech and “other” with 97% accuracy.

It struggled more with speech and “other” in isolation, where its accuracy was 76% and 75%, respectively. But those figures still represent an improvement over a system that used only LFBEs, without time-of-arrival data, where the accuracy fell to 68% and 62%. We believe that our results demonstrate that using cross-correlations between multiple microphones is well worth exploring as a way to help reduce false wakes still further.

Acknowledgments: Constantinos Papayiannis, Justice Amoh, Chao Wang