Human speech conveys the speaker’s sentiment and emotion through both the words that are spoken and the manner in which they are spoken. In speech-based computing systems such as voice assistants and in human-human interactions such as call-center sessions, automatically understanding speech sentiment is important for improving customer experiences and outcomes.

To that end, the Amazon Chime software development kit (SDK) team recently released a voice tone analysis model that uses machine learning to estimate sentiment from a speech signal. We adopted a deep-neural-network (DNN) architecture that extracts and jointly analyzes both lexical/linguistic information and acoustic/tonal information. The inference runs in real time on short signal segments and returns a set of probabilities as to whether the segment expresses positive, neutral, or negative sentiment.

DNN architecture and two-stage training

Prior approaches to voice-based sentiment and emotion analysis typically consisted of two steps: estimating a predetermined set of signal features, such as pitch and spectral-energy fluctuations, and classifying sentiment based on those features. Such methods can be effective for classification of relatively short emotional utterances, but their performance degrades for natural conversational speech signals.

In a natural conversation, lexical features play an important role in conveying sentiment or emotion. As an alternative to acoustic-feature methods, some sentiment analysis approaches focus purely on lexical features by analyzing a transcript of the speech.

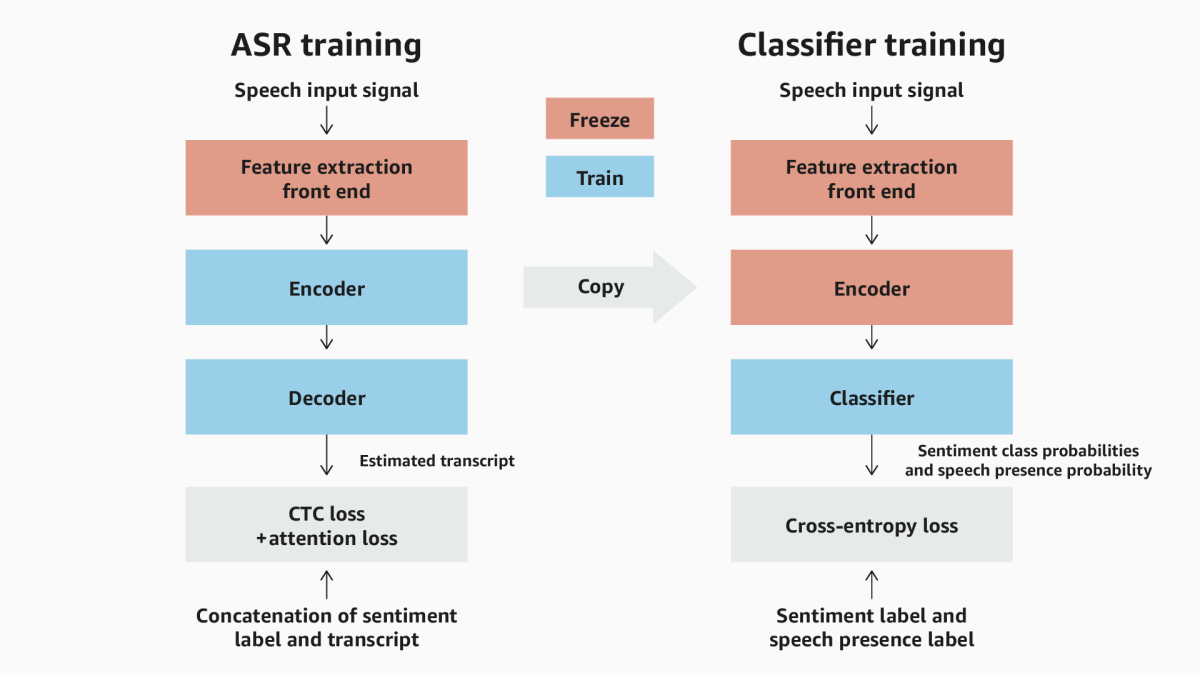

Amazon’s voice tone analysis takes a hybrid approach. To ensure that our model incorporates acoustic features as well as linguistic information, we begin with an automatic-speech-recognition (ASR) model with an encoder-decoder architecture. We train the model to recognize both sentiment and speech, and then we freeze its encoder for use as the front end of a sentiment classification model.

The ASR branch and the classification branch of our model are both fed by a deep-learning front end that is pretrained using self-supervision to extract meaningful speech signal features, rather than the predetermined acoustic features used in prior voice-based approaches.

The encoder of the ASR branch corresponds to what is often referred to as an acoustic model, which maps acoustic signal features to abstract representations of word chunks or phonemes. The decoder corresponds to a language model that assembles these chunks into meaningful words.

In the ASR training, the front end is held fixed and the ASR encoder and decoder parameters are trained using a transcript with a prepended sentiment label — for instance, “Positive I am so happy.” The loss function scores the model on how well it maps input features to both the sentiment label and the transcript. The encoder thus learns both tonal information and lexical information.

After the ASR training is complete, the classifier branch is constructed using the pretrained front end and the trained ASR encoder. The encoder output is connected to a lightweight classifier, and the parameters of the front end and the ASR encoder are both frozen. Then, using sentiment-labeled speech inputs, we train the classifier to output the probabilities of positive, neutral, and negative sentiments. It’s also trained to detect speech presence — i.e., whether the input contains any speech at all.

The purpose of training a second branch for classification is to focus on those outputs of the ASR encoder that are important for estimating sentiment and speech presence and to deemphasize the outputs corresponding to the actual transcript of the input utterance.

Using heterogeneous datasets for training

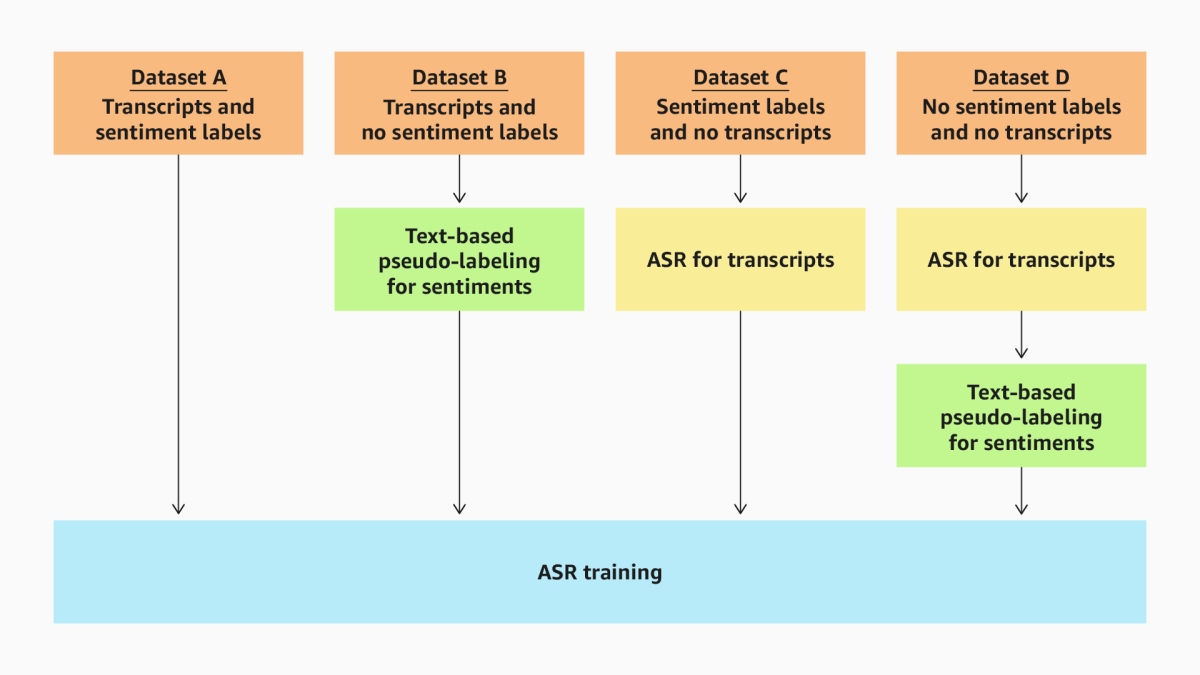

Prior models for voice-based sentiment and emotion analysis have generally been trained using small-scale datasets with relatively short utterances, but this is not effective for analysis of natural conversations. In our work, we instead use a combination of multiple datasets.

Our multitask-learning approach requires that all of the training datasets include transcripts and sentiment labels. However, available datasets do not always have both. For datasets that have transcripts but not sentiment labels, we use Amazon Comprehend to estimate text-based sentiments from the transcripts. For datasets that have sentiment labels but not transcripts, we use Amazon Transcribe to estimate transcripts. For speech datasets that have neither transcripts nor sentiment labels, we use Amazon Transcribe and Comprehend to estimate both.

With this approach, we can carry out the ASR training with multiple datasets. We also use data augmentation so as to ensure robustness with respect to input signal conditions. Our augmentation stack consists of spectral augmentations, speech rate changes (95%, 100%, 105%), reverberation, and additive noise (0dB to 15dB SNR). In addition to training with diverse datasets, we also test with diverse data and assess the model performance for various data cross sections to ensure fairness across demographic groups.

In our tests, we found that our hybrid model outperformed prior methods that relied solely on either text or acoustic data.

Bias reduction for sentiment labels

Since the frequency of the sentiment labels in the training datasets is not uniform, we used several bias reduction techniques. For the ASR training stage, we sample the data so that the distribution of sentiment labels is uniform. In the classifier training stage, we weight the loss function component of each sentiment class so that it is inversely proportional to the frequency of the corresponding sentiment label.

Generally, there is a trend in datasets such that neutral sentiments are more prevalent than positive or negative ones. Our sampling and loss-function-weighting techniques can reduce bias in the detection rates for the classes neutral, positive, and negative, improving model accuracy.

Real-time inference

The inference model for our voice tone analysis consists of the pretrained front end, the trained ASR encoder, and the trained classifier. It is worth noting that our classifier is much less computationally costly than an ASR decoder, meaning that our voice tone analysis system can run inference at lower cost than one that requires a complete text transcript.

Our voice tone analysis model has been deployed as a voice analytics capability in Amazon Chime SDK call analytics, and in its production configuration, it is run on five-second voice segments every 2.5 seconds to provide real-time probability estimates for speech presence and sentiment. The model is configured to use the short-term sentiment probabilities to compute sentiment estimates over the past 30 seconds of active speech as well as over the full duration of the speech signal.