At Prime Video, we’re delivering content to millions of customers on more than 8,000 device types, such as gaming consoles, TVs, set-top boxes, and USB-powered streaming sticks. When we want to do an update, every one of those devices requires a separate native release, posing a difficult trade-off between updatability and performance.

In the past year, we’ve been using WebAssembly (Wasm), a framework that allows code written in high-level languages to run efficiently on any device, to help resolve that trade-off. Because we are excited to contribute to the Wasm ecosystem, Amazon has joined the Bytecode Alliance, a consortium dedicated to developing secure, efficient, modular, and portable runtime environments built atop standards such as Wasm.

By using Wasm instead of JavaScript for certain elements of the Prime Video app, we’ve reduced the average frame times on a mid-range TV from 28 milliseconds to 18. The worst-case frame times also decreased from 40 milliseconds to 25. And in ongoing work we’re driving the frame time down still further.

Division of labor

To enable efficient updates on a wide variety of devices while still maintaining performance, the Prime Video app has two parts: a high-performance engine written in C++ that is stored on-device and an easy-to-update component that is downloaded every time the app launches.

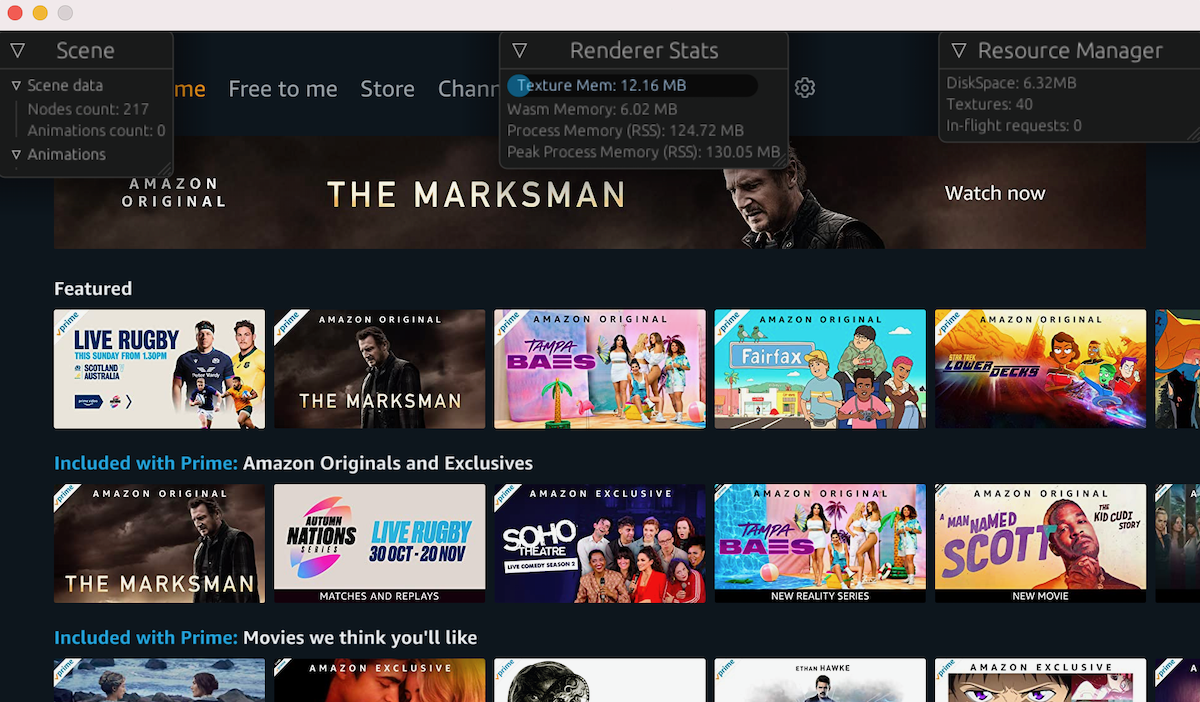

In the figure above, the stuff on device is a thin C++ layer that includes a JavaScript virtual machine (VM) and the components required to run the Prime Video application, which handle input, the media pipeline, and such processes as such as network access, image decoding, and window events handling.

The stuff we download (at run time) includes the application code, along with low-level components that handle scene management, the animation system, graphics rendering, layout, and resource management, among other things. Historically, these components all used JavaScript

This architecture split allows us to deliver new features and bug fixes without having to go through the very slow process of updating the C++ layer. The downloadable code is delivered through a fully automated continuous integration and delivery pipeline that can release updates as often as every few hours. However, we have a constant tension between writing code that’s performant (C++) and writing less-performant code that we can update with ease (JavaScript).

WebAssembly

Wasm provides a compilation target for programming languages that offer more expressivity than JavaScript does, such as C or Rust. Like JavaScript code, compiled Wasm binaries run on a VM that provides a uniform interface between code and hardware, regardless of device.

Wasm was initially intended for web browsers, but there are now standalone applications of Wasm VMs outside the browser, such as running Internet-of-things software, game mods, and server-side workloads.

Our Wasm investigations started in August 2020, when we built some prototypes to compare the performance of Wasm VMs and JavaScript VMs in simulations involving the type of work our low-level JavaScript components were doing. In those experiments, code written in Rust and compiled to Wasm was 10 to 25 times as fast as JavaScript.

We can’t just rewrite the Prime Video application in Rust and run it on a Wasm VM, however, as it still needs to run on legacy devices and browsers that don’t have Wasm support. We also don’t want to create a new app only for the new architecture, as we value deploying the same application across environments.

This is why we moved only the low-level systems from JavaScript to Wasm. In this way, we still bring performance benefits to the application, without the application teams’ having to know or care that we run certain systems on a Wasm VM.

This is what our new architecture looks like:

The Wasm binaries are deployed with the JavaScript code, through the same fully automated pipeline that can take a program from code commit to running on customers’ devices in a few hours.

The switch

The figure above shows the new architecture with a Wasm VM and a JavaScript VM running in separate threads. But how did we transition from the first architecture to the second one without rewriting the app?

The first step is updating the stuff on device to include the Wasm VM, so it can now run both versions of a given software component (JavaScript only or JavaScript and Wasm). This allows us to gradually release the Wasm components to a subset of customers.

We had to modify how the Prime Video application communicates with these components. At a high level, the application works by creating a scene — a representation of a visual scene — which consists of nodes whose implementations are device specific. A host node (e.g., view, image, text) is a data structure that has all the necessary information to update and render a component of the visual scene.

At startup, we check if we’re running on a device that has Wasm available. If it does, we create lightweight host nodes in JavaScript that don’t do anything other than send commands to the Wasm VM. The “real” host nodes are created in Wasm when these commands are handled.

We use messages to communicate between the two VMs because we don’t want the JavaScript VM work to interrupt the Wasm VM work. The job of the Wasm components is to update nodes and pump frames out to the screen as fast as possible without any interruptions.

The hard part was doing this switch in a way that preserves the behavior of the JavaScript systems. We sometimes had to duplicate the “incorrect” behavior of the JavaScript renderer in the new Wasm version, because the app relied on it for some edge cases. Making sure the JavaScript VM code never calls any dangerous function on the wrong thread has also added extra difficulties.

Results

As I mentioned, the switch to Rust and Wasm has improved the applications’ frame rate stability and speed. To reach our goal of reliable 60-frame-per-second frame generation and improve input latency, we will move more systems to Wasm, such as focus management and layout.

The total memory consumption for the Wasm VM, including the module instance, environment, and the module itself is at most 7.5 megabytes. By moving these systems to Wasm, we have saved a total of 30 megabytes of JavaScript heap memory. Memory is a scarce resource on most of the devices we deploy on, so this is a welcome reduction.

The binary size of our Wasm module is 150 kilobytes when compressed (750 kilobytes uncompressed, after symbol stripping). The module’s small size, coupled with the fast VM start time, means that the addition of Wasm doesn’t affect the app start-up time.

Using Rust has enabled programmers of all experience levels to contribute code without requiring reviewers to carefully scrutinize every line for safety pitfalls. We trust the compiler, and we can focus our code reviews on functionality, not language corner cases.

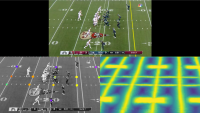

Another benefit of using Rust is having access to an ecosystem of high-quality libraries. For instance, we built an application that overlays debugger information on an application scene render using egui, a Rust GUI library. Integrating egui with our Wasm renderer took a couple of hours of work and offers us an easy way to gain insights into the engine’s internals.

Overall, we think that this investment in Rust and WebAssembly has paid off: after a year and 37,000 lines of Rust code, we have significantly improved performance, stability, and CPU consumption and reduced memory utilization.