The expanding use of generative-AI applications has increased the demand for accurate, cost-effective large language models (LLMs). LLMs’ costs vary significantly based on their size, typically measured by the number of parameters: switching to the next smaller size often results in a 70%–90% cost savings. However, simply using smaller, lighter-weight LLMs is not always a viable option due to their diminished capabilities compared to state-of-the-art "frontier LLMs."

While reduction in parameter size usually diminishes performance, evidence suggests that smaller LLMs, when specialized to perform tasks like question-answering or text summarization, can match the performance of larger, unmodified frontier LLMs on those same tasks. This opens the possibility of balancing cost and performance by breaking complex tasks into smaller, manageable subtasks. Such task decomposition enables the use of cost-effective, smaller, more-specialized task- or domain-adapted LLMs while providing control, increasing troubleshooting capability, and potentially reducing hallucinations.

However, this approach comes with trade-offs: while it can lead to significant cost savings, it also increases system complexity, potentially offsetting some of the initial benefits. This blog post explores the balance between cost, performance, and system complexity in task decomposition for LLMs.

As an example, we'll consider the case of using task decomposition to generate a personalized website, demonstrating potential cost savings and performance gains. However, we'll also highlight the potential pitfalls of overengineering, where excessive decomposition can lead to diminishing returns or even undermine the intended benefits.

I. Task decomposition

Ideally, a task would be decomposed into subtasks that are independent of each other. That allows for the creation of targeted prompts and contexts for each subtask, which makes troubleshooting easier by isolating failures to specific subtasks, rather than requiring analysis of a single, large, black-box process.

Sometimes, however, decomposition into independent subtasks isn’t possible. In those cases, prompt engineering or information retrieval may be necessary to ensure coherence between subtasks. However, overengineering should be avoided, as it can unnecessarily complicate workflows. It also runs the risk of sacrificing the novelty and contextual richness that LLMs can provide by capturing hidden relationships within the complete context of the original task.

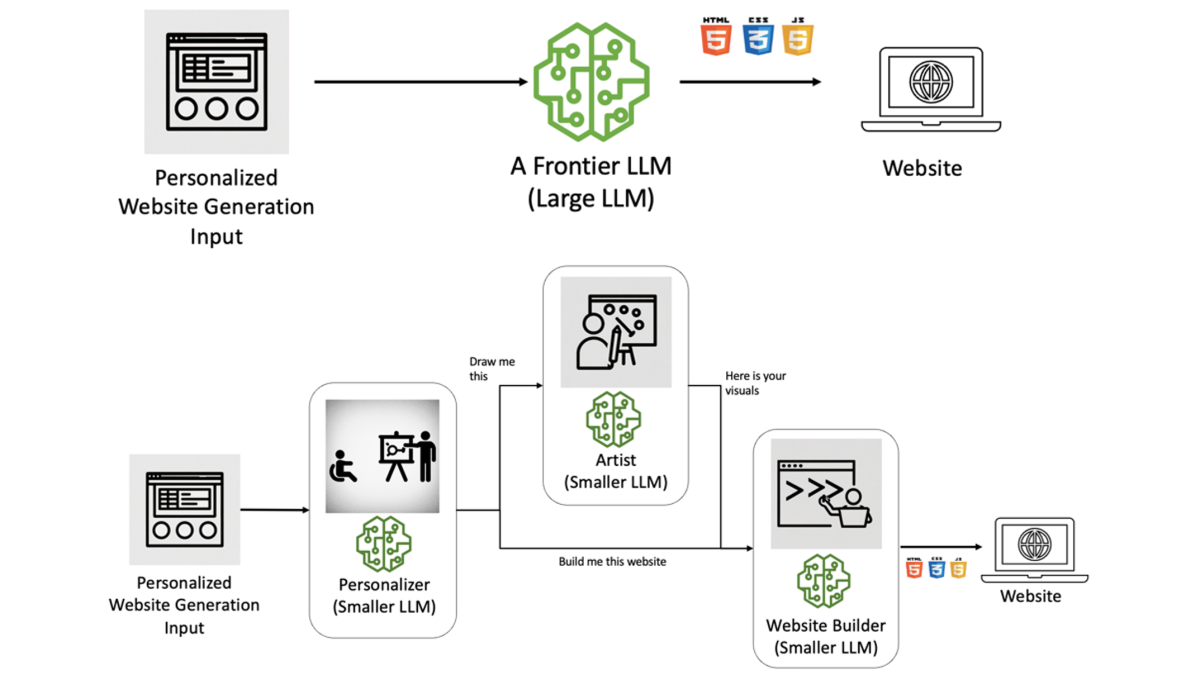

But we’ll address these points later. First, let us provide an example where the task of personalized website generation is decomposed into an agentic workflow. The agents in an agentic workflow might be functional agents, which perform specific tasks (e.g., database query), or persona-based agents that mimic human roles in an organization (e.g., UX designer). In this post, I'll focus on the persona-based approach.

A simple example: Creating a personalized website

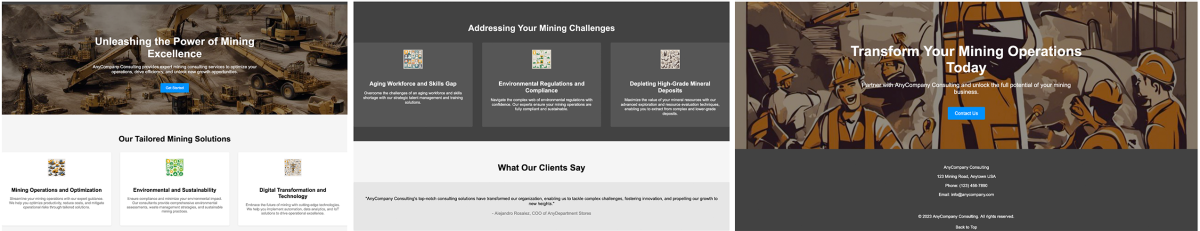

In our scenario, a business wants to create a website builder that generates tailored web experiences for individual visitors, without human supervision. Generative AI's creativity and ability to work under uncertainty make it suitable for this task. However, it is crucial to control the workflow, ensuring adherence to company policies, best practices, and design guidelines and managing cost and performance.

This example is based on an agentic-workflow solution we published on the Amazon Web Services (AWS) Machine Learning Blog. For that solution, we divided the overall process into subtasks of a type ordinarily assigned to human agents, such as the personalizer (UX/UI designer/product manager), artist (visual-art creator), and website builder (front-end developer).

The personalizer agent aims to provide tailored experiences for website visitors by considering both their profiles and the company's policies, offerings, and design approaches. This is an average-sized text-to-text LLM with some reasoning skills. The agent also incorporates retrieval-augmented generation (RAG) to leverage vetted "company research".

Here’s a sample prompt for the personalizer:

You are an AI UI/UX designer tasked with creating a visually appealing website. Keep in mind the industry pain points [specify relevant pain points — RAG retrieved] to ensure a tailored experience for your customer [provide customer profile — JSON to natural language]. In your response, provide two sections: a website description for front-end developers and visual elements for the artists to follow. You should follow the design guidelines [include relevant design guidelines].

The artist agent's role is to reflect the visual-elements description in a well-defined image, whether it's a background image or an icon. Text-to-image prompts are more straightforward, starting with "Create an [extracted from personalizer response]."

The final agent is the front-end developer, whose sole responsibility is to create the front-end website artifacts. Here, you can include your design systems, code snippets, or other relevant information. In our simple case, we used this prompt:

You are an experienced front-end web developer tasked with creating an accessible, [specify the website's purpose] website while adhering to the specified guidelines [include relevant guidelines]. Carefully read the 'Website Description' [response from personalizer] provided by the UI/UX designer AI and generate the required HTML, CSS, and JavaScript code to build the described website. Ensure that [include specific requirements].

Here, you can continue the approach with a quality assurance (QA) agent or perform a final pass to see if there are discrepancies.

II. The big trade-off and the trap of overengineering

Task decomposition typically introduces additional components (new LLMs, orchestrators), increasing complexity and adding overhead. While smaller LLMs may offer faster performance, the increased complexity can lead to higher latency. Thus, task decomposition should be evaluated within the broader context.

Let's represent the task complexity as O(n), where n is the task size. With a single LLM, complexity grows linearly with task size. On the other hand, in parallel task decomposition with k subtasks and k smaller language models, the initial decomposition has a constant complexity — O(1). Each of the k language models processes its assigned subtask independently, with a complexity of O(n/k), assuming an even distribution.

After processing, the results from the k language models need coordination and integration. This step's complexity is O(km), where fully pairwise coordination gives m = 2, but in reality, 1 < m ≤ 2.

Therefore, the overall complexity of using multiple language models with task decomposition can be expressed as

Ok-LLMs = O(1) + k (O(n/k)) + O(km) → O(n) + O(km)

While the single-language-model approach has a complexity of O(n), the multiple-language-model approach introduces an additional term, O(km), due to coordination and integration overhead, with 1 < m ≤ 2.

For small k values and pairwise connectivity, the O(km) overhead is negligible compared to O(n), indicating the potential benefit of the multiple-language-model approach. However, as k and m grow, the O(km) overhead becomes significant, potentially diminishing the gains of task decomposition. The optimal approach depends on the task, the available resources, and the trade-off between performance gains and coordination overhead. Improving technologies will reduce m, lowering the complexity of using multiple LLMs.

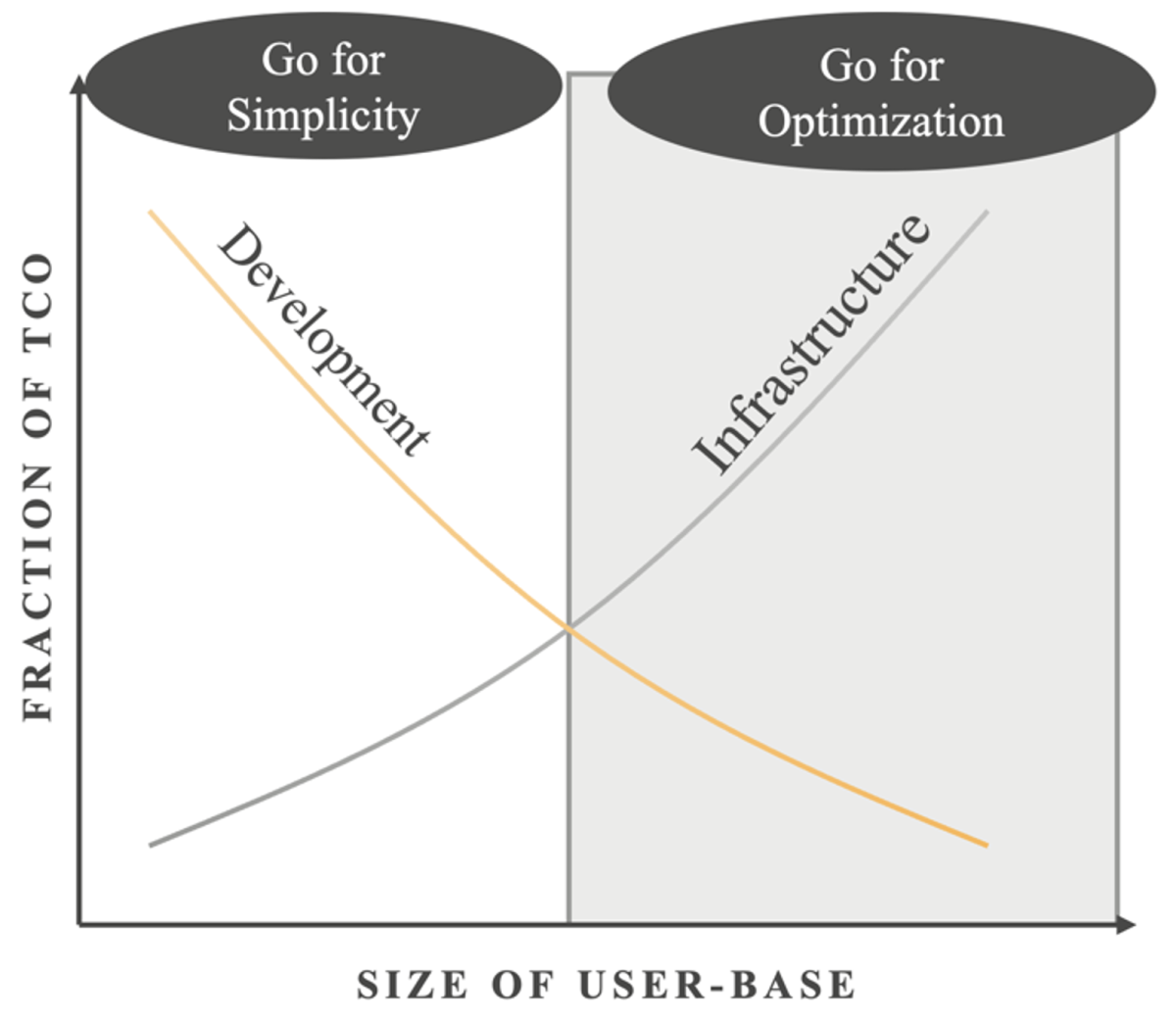

A mental model for cost and complexity

A helpful mental model for deciding whether to use task decomposition is to consider the estimated total cost of ownership (TCO) of your application. As your user base grows, infrastructure cost becomes dominant, and optimization methods like task decomposition can reduce TCO, despite the upfront engineering and science costs. For smaller applications, a simpler approach, such as selecting a large model, may be more appropriate and cost effective.

Overengineering versus novelty and simplicity

Task decomposition and the creation of agentic workflows with smaller LLMs can come at the cost of the novelty and creativity that larger, more powerful models often display. By “manually” breaking tasks into subtasks and relying on specialized models, the overall system may fail to capture the serendipitous connections and novel insights that can emerge from a more holistic approach. Additionally, the process of crafting intricate prompts to fit specific subtasks can result in overly complex and convoluted prompts, which may contribute to reduced accuracy and increased hallucinations.

Task decomposition using multiple, smaller, fine-tuned LLMs offers a promising approach to improving cost efficiency for complex AI applications, potentially providing substantial infrastructure cost savings compared to using a single, large, frontier model. However, care must be taken to avoid overengineering, as excessive decomposition can increase complexity and coordination overhead to the point of diminishing returns. Striking the right balance between cost, performance, simplicity, and retaining AI creativity will be key to unlocking the full potential of this promising approach.

![At left is a neural network, labeled "pre-edit model", each of whose input nodes receives a single token from the string "<CLS> The high minded dismissal [SEP] A dismissal of a higher mind". The output of the model is the prediction "Contradict". Encodings from the network pass to a block labeled "SaLEM", in which gradients for each input token are calculated and the most-salient layer identified. The outputs of the block are edits to the layer weights. At right is another version of the neural network at left, labeled "post-edit model". Here, the output is "Entailed" rather than "Contradict".](https://assets.amazon.science/dims4/default/bcef437/2147483647/strip/true/crop/3982x2250+18+0/resize/200x113!/quality/90/?url=http%3A%2F%2Famazon-topics-brightspot.s3.amazonaws.com%2Fscience%2Ffc%2F84%2F2aa85e0645d9b139cedded97c66b%2Fsalem-architecture.png)