Most of the major advances in artificial intelligence in the past decade, in both industry and academia, have been the result of supervised deep learning, in which neural networks learn to perform tasks by analyzing thousands or even millions of training examples labeled by human annotators.

At Amazon, we’ve been exploring a range of ways to reduce human involvement in machine learning, such as semi-supervised learning. Another promising approach, from my Alexa AI team, is something we call self-learning.

An Alexa customer who’s unsatisfied with the response to a request may interrupt the response and rephrase the request. With our self-learning system, Alexa uses this type of implicit signal to deduce — without any human involvement — how to respond correctly to the initial request.

We deployed this system more than a year ago, and it’s currently correcting millions of misheard or misspoken requests every week. For instance, Alexa has learned to overwrite the request “play Sirius XM Chill” with “play Sirius Channel 53”.

Next month, at the annual meeting of the Association for the Advancement of Artificial Intelligence in New York, we’ll present a paper that explains how the system works.

Our fundamental insight: model sequences of rephrased requests as absorbing Markov chains. A Markov chain models a dynamic system as a sequence of states, each of which has a certain probability of transitioning to any of several other states. (Often, Markov chains aren’t really chains; the sequences of states can branch out to form more complex networks of transitions.)

The game Chutes and Ladders (a.k.a. Snakes and Ladders), for instance, can be modeled using a Markov chain. From any position on the board, there is a set of fixed probabilities (determined by die rolls) of moving to a finite number of other positions.

Absorbing states

An absorbing Markov chain has two distinctive properties: (1) it has a final state, with zero probability of transitioning to any other, and (2) the final state is accessible from any other system state. The Markov chain describing Chutes and Ladders is in fact an absorbing Markov chain.

We model sequences of rephrased requests as absorbing Markov chains. There are two absorbing states, success and failure, and our system identifies them from a variety of clues. For instance, if the customer says “Alexa, stop” and issues no further requests, or if Alexa returns a generic failure response, such as, “Sorry, I don’t know that one”, the absorbing state is a failure. If, on the other hand, the final request is to play a song, which is then allowed to play for a substantial amount of time, the absorbing state is a success.

To standardize our representations of system states, we used the output of Alexa’s natural-language-understanding models, which describe utterances according to

- domains, such as music;

- intents, such as playing music; and

- slots, such as the names of particular albums or songs.

So the customer utterances “Play ‘Despicable Me’” and “Put on ‘Despicable Me’” produce the same system state, which is specified completely by assigning the value “Despicable Me” to the slot SongName.

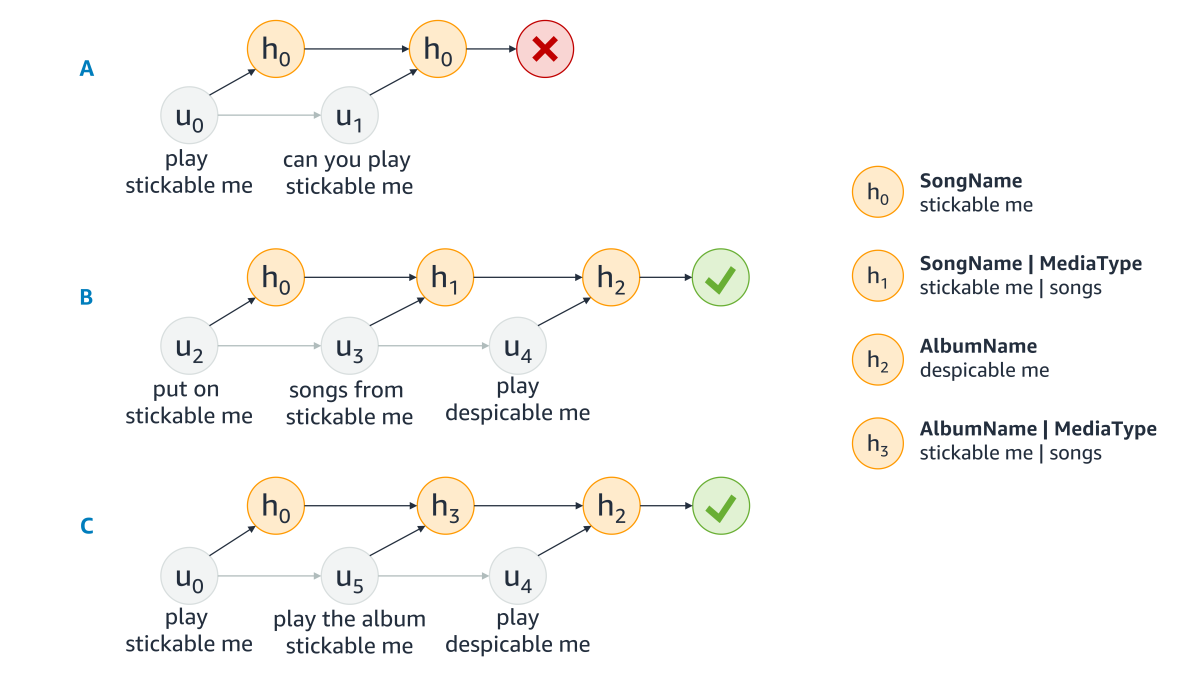

To test our approach, we first constructed separate absorbing Markov chains for millions of customer interactions with Alexa over a three-month period. Because we standardized the representation of system states, many of these chains involved the same relatively small pool of states, as in the figure above.

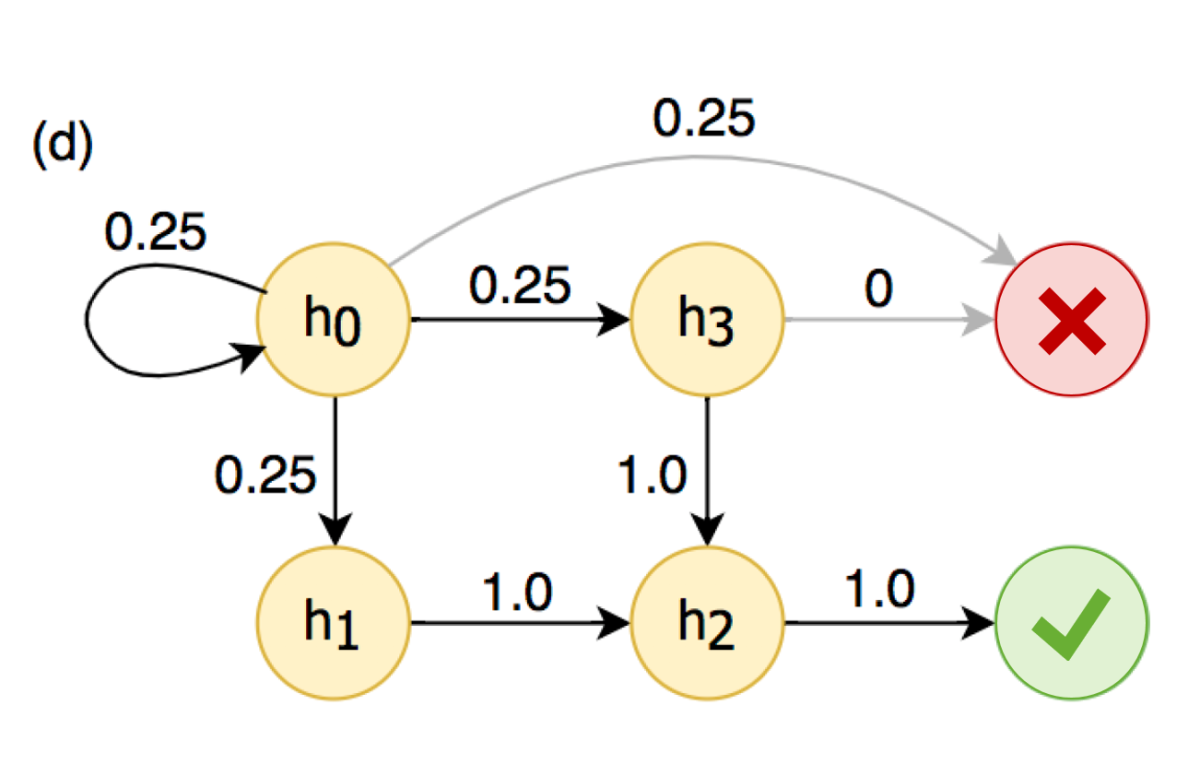

Next, we calculated the frequency with which any given state would follow any other, across all the absorbing Markov chains. In the example at left, for instance, h0 has a 25% chance of being followed by each of h0, h1, h3, and the absorbing failure state.

Once we had collected these summary statistics for a given group of interrelated system states, we produced a new Markov chain that aggregated our findings. In the aggregated chain, the transition probabilities between states are defined by the frequency with which a given state follows another in our data.

From each state in the aggregate chain, we simply identify the path that leads with highest probability to the success state. The penultimate state in the path — the one right before the success state — is the one that the system should use to overwrite the first state in the path. In the example above, for instance, the system would learn to overwrite h0, h1, and h3, all of which misinterpret the customer as having said “stickable me”, with h2 (AlbumName = “Despicable Me”).

Because this procedure is wholly automated, it may sometimes learn invalid substitutions — for instance, if customers unable to coax Alexa to play a desired song frequently bail out to the same chart-topping alternative. But based on user feedback, we also developed a blacklist of substitutions that the system should not perform. Our analyses show that for every invalid substitution the system learns, it learns 12 valid ones, a trade-off worth making.