Dominik Janzing, a principal research scientist with Amazon Web Services, is a coauthor on four of Amazon’s 18 papers at this year’s International Conference on Machine Learning (ICML), and all four of those papers, like most of Janzing’s papers, have the word “causal” in the title.

Our 2012 paper ‘On causal and anticausal learning’ just received a Test of Time Honorable Mention at @icmlconf #ICML2022: https://t.co/gc1FZYSOyP. I am really grateful, and would like to use this occasion for some thoughts on causality and machine learning:

— Bernhard Schölkopf (@bschoelkopf) July 20, 2022

“It's still a small fraction of papers that refer to causality,” Janzing says, “but it is increasing. If you look at the long-term trend, it's clearly increasing, and I strongly believe that this trend will continue for a while. My prediction is causality will play an even bigger role than now.”

The burgeoning interest in causality among machine learning researchers grew out of related work in neighboring fields, Janzing explains.

“If one looks at the traditional questions of causality, these were about the causal effect of a certain intervention,” Janzing says. “For instance, there’s a patient; the patient gets a drug or not. What's the influence on the recovery, given that there are further influencing factors, called covariates?” That’s the sense of causality that has been central to experimental design and economics.

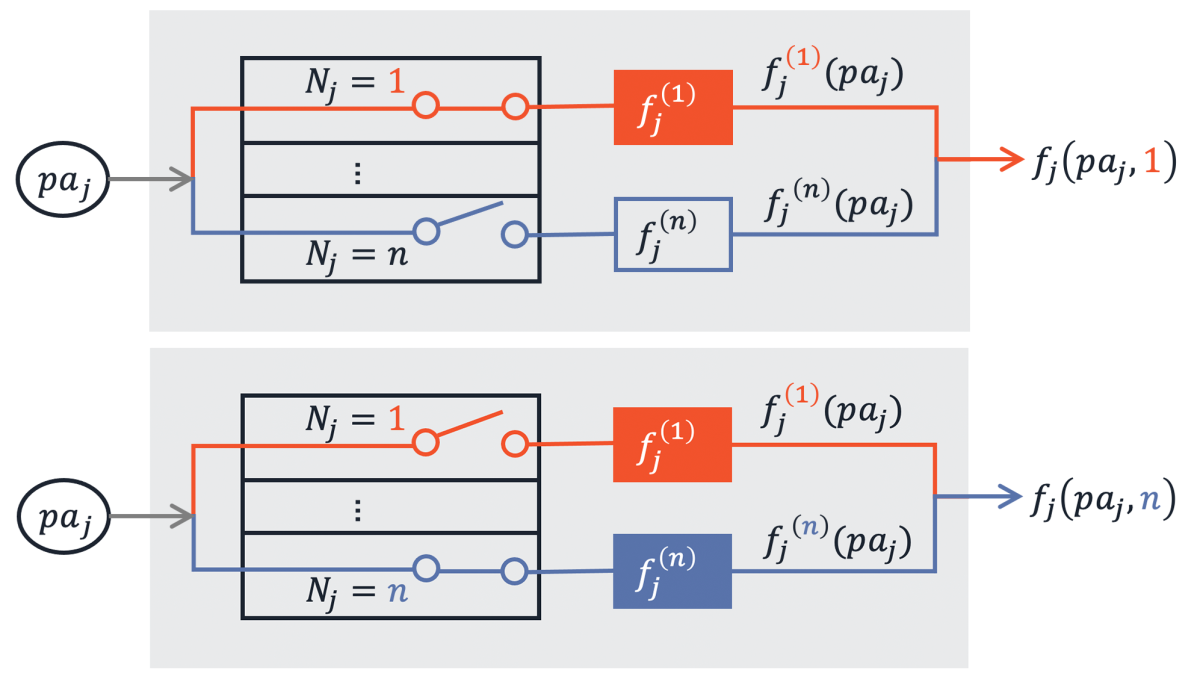

“Then there was a different community, the graphical-models community, that already modeled more complex systems,” Janzing continues. “The graphical model on a large number of variables can be used to compute the average effect of one specific variable on another one. But it also has the more general goal of decomposing complex systems into understandable mechanisms. I looked at, for instance, problems of causal discovery — how to infer the graphical model from passive observations. That’s still a very ambitious goal. I am optimistic that for this problem also, progress will come from stronger connections to machine learning.”

Enter machine learning

Sometime around 2010, Janzing says, “it became more apparent that causality matters for a lot of different machine learning problems, because it can make a difference whether one just wants to infer statistical relations or whether one wants to infer the generating process.”

There are several hot topics in machine learning whose relations to causality are currently being explored, Janzing says. These include explainable AI, fairness, and learning data representations that are robust to distribution shifts.

“Does explainable AI entail causal explanations by definition?” Janzing asks. “Are semantically meaningful representations necessarily causal representations? If yes, in what sense?

“While causal questions enter all these discussions, we may also better understand what causality means. Sometimes people speak about `the true causal graph’ as some absolute truth that is set in stone. I believe that causality is often something context dependent, in particular in domains where variables come from strong aggregation — like macroeconomic quantities. I feel that the aphorism ‘all models are wrong, but some are useful’ is not yet properly appreciated when researchers talk about causal models, probably because the purpose of a graphical model is not necessarily to be useful for one specific task, but for a general understanding of what goes on in a complex system.

Definitions

The problem of understanding what causality means is not just philosophical, Janzing explains. It also has immediate consequences for research.

“Whenever we work on applications, we clearly see there is a concept that needs to be defined,” he says. “My students are sometimes surprised that these concepts don't exist yet, because it sounds so obvious that they should exist. But they don't. Which shows that the field is young.”

For instance, one of Janzing’s papers at ICML, “Causal structure-based root cause analysis of outliers”, presents a method for quantifying the extent to which different root causes contribute to an outcome. But first it presents a formal definition of the root cause of an extreme event — “which we didn’t find anywhere,” Janzing says.

In the same way that the field’s fundamental concepts still require further definitions — “Mostly on top of the graphical-model framework,” Janzing says — it remains to be seen which mathematical tools will prove most useful for causal analysis. Work on causal machine learning so far has involved statistics, functional analysis (especially kernel methods), linear algebra, Shannon information theory, algorithmic information theory, Fourier analysis, group theory, and game theory.

“If I look at the mathematical methods applied in causal inference, then I would say, nobody knows which mathematical methods will mainly be used in causality in 10 years,” Janzing says. “I don't see any math to be irrelevant for that. So it seems to me that the field is still so open and far from settling already to some specific topics, type of questions, and methods.”