Although deep neural networks have enabled accurate large-vocabulary speech recognition, training them requires thousands of hours of transcribed data, which is time-consuming and expensive to collect. So Amazon scientists have been investigating techniques that will let Alexa learn with minimal human involvement, techniques that fall in the categories of unsupervised and semi-supervised learning.

At this year’s International Conference on Acoustics, Speech, and Signal Processing, my colleagues and I are presenting a semi-supervised-learning approach to improving speech recognition performance — especially in noisy environments, where existing systems can still struggle.

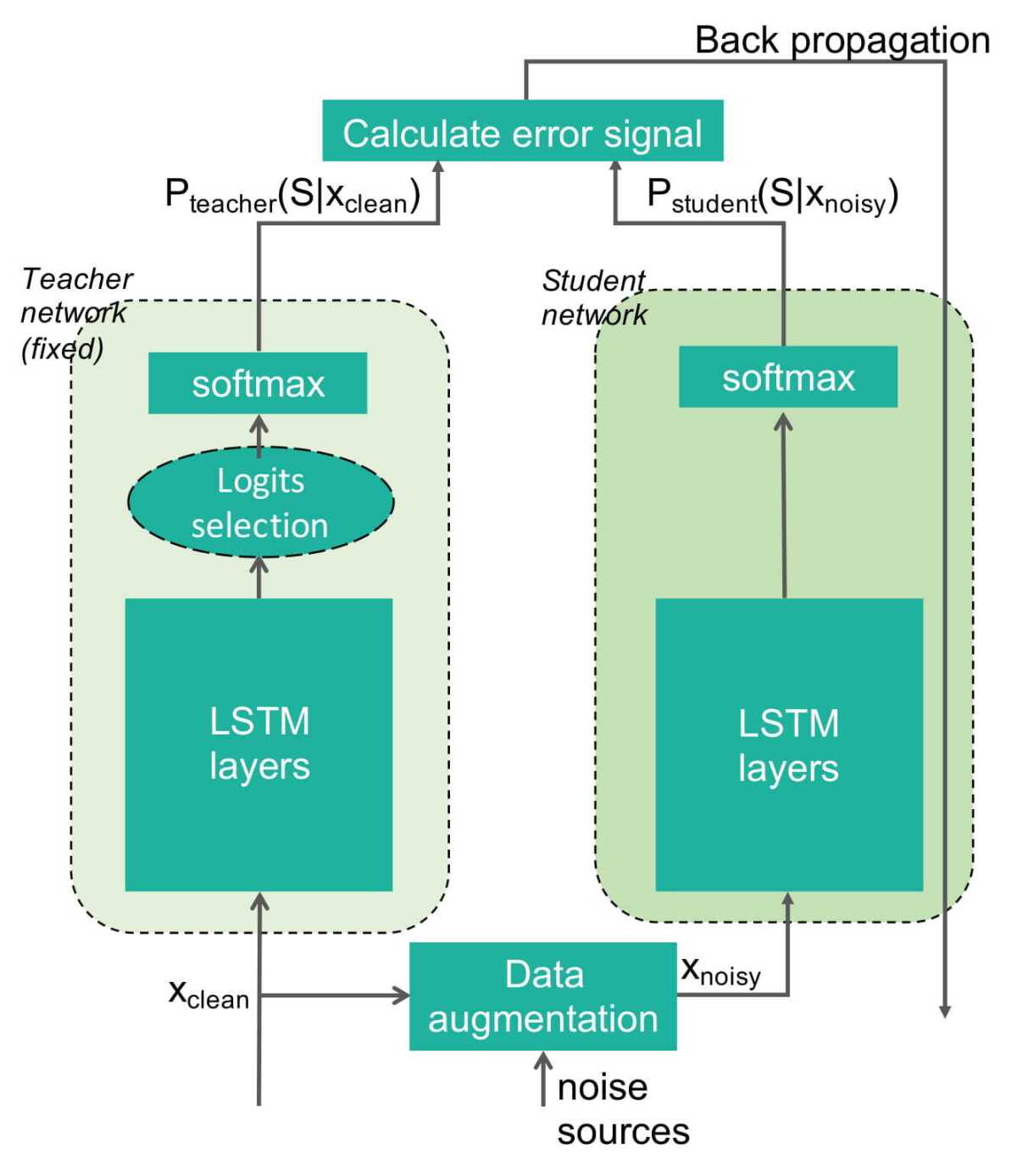

We first train a speech recognizer — the “teacher” model — on 800 hours of annotated data and use it to “softly” label another 7,200 hours of unannotated data. Then we artificially add noise to the same dataset and use that, together with the labels generated by the teacher model, to train a second speech recognizer — the “student” model. We hope to make the behavior of the student model in the noisy domain approach that of the teacher model in the clean domain, and thus improve the noise robustness of the speech recognition system.

On test data that we produced by simultaneously playing recorded speech and media sounds through loudspeakers and re-recording the combined acoustic signal, our system shows a 20% relative reduction in terms of word error rate versus a system trained only on the clean, annotated data.

An automatic speech recognition system has three main components: an acoustic model, a pronunciation model, and a language model. The inputs to the acoustic model are short snippets of audio called frames. For every input frame, the output is thousands of probabilities. Each probability indicates the likelihood that the frame belongs to a low-level phonetic representation called a senone.

In training the student model, we keep only the highest-confidence senones from the teacher, which turns out to be a quite effective approach.

The outputs of the acoustic model pass to the pronunciation model, which converts senone sequences into possible words, and those pass to the language model, which encodes the probabilities of word sequences. All three components of the system work together to find the most likely word sequence given the audio input.

Both our teacher and student models are acoustic models, and we experiment with two criteria for optimizing them. With the first, the models are optimized to maximize accuracy on a frame-by-frame basis, at the level of the acoustic model. The other training criterion is sequence-discriminative: both the teacher and student models are further optimized to minimize error across sequences of outputs, at the levels of not only the acoustic model but the pronunciation model and language model as well.

We find that sequence training makes the teacher models more accurate, apart from the performance of the student models. It also slightly increases the relative improvement offered by the student models.

To add noise to the training data, we used a collection of noise samples, most of which involved media playback — such as music or television audio — in the background. For each speech example in the training set, we randomly selected one to three noise samples to add to it. Those samples were processed to simulate closed-room acoustics, with the properties of the simulated room varying randomly from one training example to the next.

For every frame of audio data that passes to an acoustic model, most of the output probabilities are extremely low. So when we use the teacher’s output to train the student, we keep only the highest probabilities. We experimented with different numbers of target probabilities, from five to 40.

Intriguingly, this modification by itself improved the performance of the student model relative to the teacher, even on clean test data. Training the student to ignore improbable hypotheses enabled it to devote more resources to distinguishing among probable ones.

In addition to limiting the number of target probabilities, we also applied a smoothing function to them, which evened them out somewhat, boosting the lows and trimming the highs. The degree of smoothing is defined by a quantity called temperature. We found that a temperature of 2, together with keeping the 20 top probabilities, yielded the best results.

Apart from the data set produced by re-recording overlapping audio, we used two other data sets to test our system. One was a set of clean audio samples, and the other was a set of samples to which we’d added noise through the same procedure we used to create the training data.

Our best-performing student model was first optimized according to the per-frame output from the teacher model, using the entire 8,000 hours of data with noise added, then sequence-trained on the 800 hours of annotated data. Relative to a teacher model sequence-trained on 800 hours of hand-labeled clean data, it yielded a 10% decrease in error rate on the clean test data, a 29% decrease on the noisy test data, and a 20% decrease on the re-recorded noisy data.

Acknowledgments: Ladislav Mosner, Anirudh Raju, Sree Hari Krishnan Parthasarathi, Kenichi Kumatani, Shiva Sundaram, Roland Maas, Björn Hoffmeister