The Earth is a complex system. Variabilities ranging from regular events like temperature fluctuations to extreme events like drought, hailstorms, and the El Niño–Southern Oscillation (ENSO) phenomenon can influence crop yields, delay airline flights, and cause floods and forest fires. Precise and timely forecasting of these variabilities can help people take necessary precautions to avoid crises or better utilize natural resources such as wind and solar energy.

The success of transformer-based models in other AI domains has led researchers to attempt applying them to Earth system forecasting, too. But these efforts have encountered several major challenges. Foremost among these is the high dimensionality of Earth system data: naively applying the transformer’s quadratic-complexity attention mechanism is too computationally expensive.

Most existing machine-learning-based Earth systems models also output single, point forecasts, which are often averages across wide ranges of possible outcomes. Sometimes, however, it may be more important to know that there’s a 10% chance of an extreme weather event than to know the general averages across a range of possible outcomes. And finally, typical machine learning models don’t have guardrails imposed by physical laws or historical precedents and can produce outputs that are unlikely or even impossible.

In recent work, our team at Amazon Web Services has tackled all these challenges. Our paper “Earthformer: Exploring space-time transformers for Earth system forecasting”, published at NeurIPS 2022, suggests a novel attention mechanism we call cuboid attention, which enables transformers to process large-scale, multidimensional data much more efficiently.

And in “PreDiff: Precipitation nowcasting with latent diffusion models”, to appear at NeurIPS 2023, we show that diffusion models can both enable probabilistic forecasts and impose constraints on model outputs, making them much more consistent with both the historical record and the laws of physics.

Earthformer and cuboid attention

The heart of the transformer model is its “attention mechanism”, which enables it to weigh the importance of different parts of an input sequence when processing each element of the output sequence. This mechanism allows transformers to capture spatiotemporally long-range dependencies and relationships in the data, which have not been well modeled by conventional convolutional-neural-network- or recurrent-neural-network-based architectures.

Earth system data, however, is inherently high-dimensional and spatiotemporally complex. In the SEVIR dataset studied in our NeurIPS 2022 paper, for instance, each data sequence consists of 25 frames of data captured at five-minute intervals, each frame having a spatial resolution of 384 x 384 pixels. Using the conventional transformer attention mechanism to process such high-dimensional data would be extremely expensive.

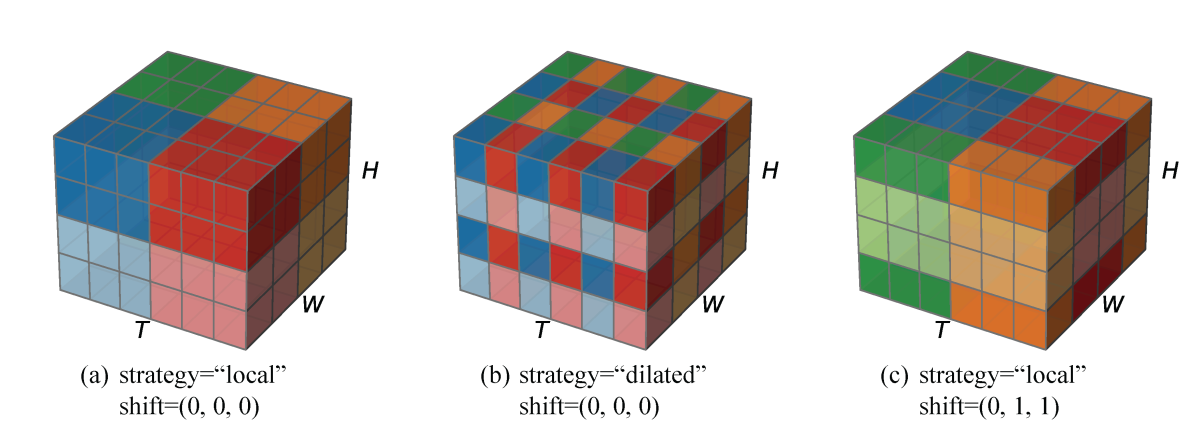

In our NeurIPS 2022 paper, we proposed a novel attention mechanism we call cuboid attention, which decomposes input tensors into cuboids, or higher-dimensional analogues of cubes, and applies attention at the level of each cuboid. Since the computational cost of attention scales quadratically with the tensor size, applying attention locally in each cuboid is much more computationally tractable than trying to compute attention weights across the entire tensor at once. For instance, decomposing along the temporal axis can result in cost reduction by a factor of 3842 for the SEVIR dataset, since each frame has a spatial resolution of 384 x 384 pixels

Of course, such decomposition introduces a limitation: attention functions independently within each cuboid, with no communication between cuboids. To address this issue, we also compute global vectors that summarize the cuboids’ attention weights. Other cuboids can factor the global vectors into their own attention weight computations.

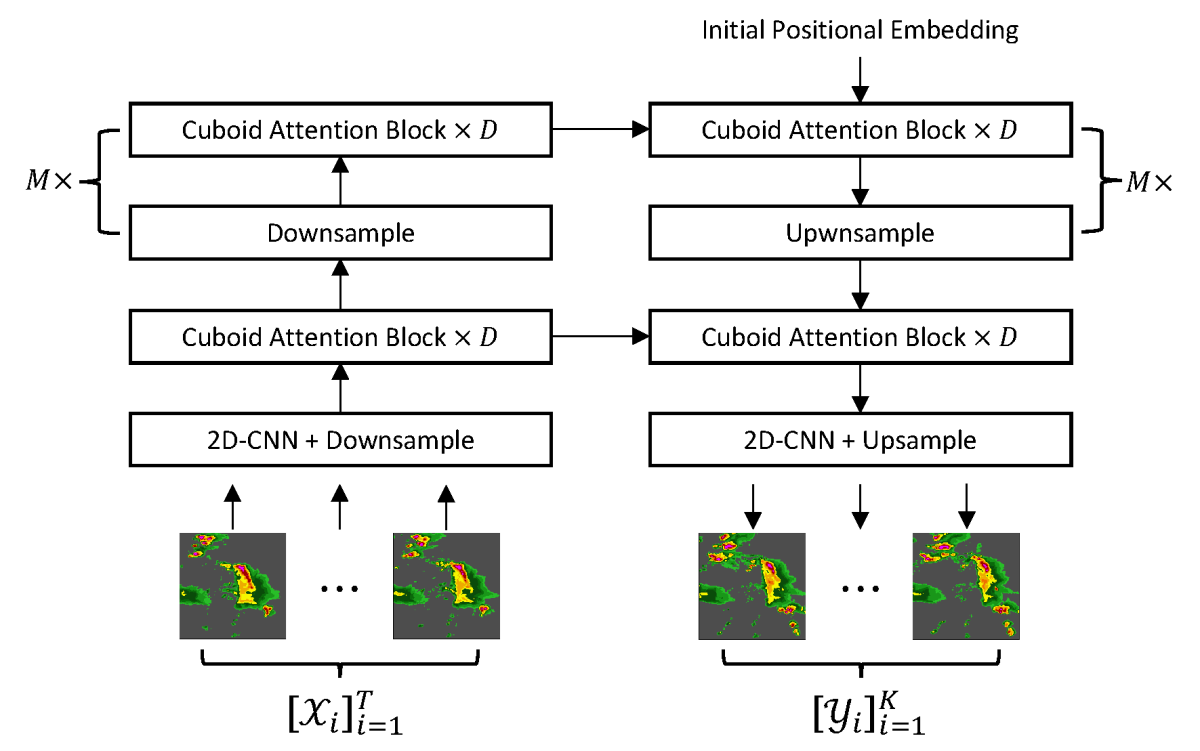

We call our transformer-based model with cuboid attention Earthformer. Earthformer adopts a hierarchical encoder-decoder architecture, which gradually encodes the input sequence to multiple levels of representations and generates the prediction via a coarse-to-fine procedure. Each hierarchy includes a stack of cuboid attention blocks. By stacking multiple cuboid attention layers with different configurations, we are able to efficiently explore effective space-time attention.

We experimented with multiple methods for decomposing an input tensor into cuboids. Our empirical studies show that the “axial” pattern, which stacks three unshifted local decompositions along the temporal, height, and width axes, is both effective and efficient. It achieves the best performance while avoiding the exponential computational cost of vanilla attention.

Experimental results

To evaluate Earthformer, we compared it to six state-of-the-art spatiotemporal forecasting models on two real-world datasets: SEVIR, for the task of continuously predicting precipitation probability in the near future (“nowcasting”), and ICAR-ENSO, for forecasting sea surface temperature (SST) anomalies.

On SEVIR, the evaluation metrics we used were standard mean squared error (MSE) and critical success index (CSI), a standard metric in precipitation nowcasting evaluation. CSI is also known as intersection over union (IoU): at different thresholds, it's denoted as CSI-thresh; their mean is denoted as CSI-M.

On both MSE and CSI, Earthformer outperformed all six baseline models across the board. Earthformer with global vectors also uniformly outperformed the version without global vectors.

Model |

#Params.(M) |

GFLOPS |

Metrics |

|||

CSI-M↑ |

CSI-219↑ |

CSI-181↑ |

MSE(10-3)↓ |

|||

Persistence |

- |

- |

0.2613 |

0.0526 |

0.0969 |

11.5338 |

UNet |

16.6 |

33 |

0.3593 |

0.0577 |

0.1580 |

4.1119 |

ConvLSTM |

14.0 |

527 |

0.4185 |

0.1288 |

0.2482 |

3.7532 |

PredRNN |

46.6 |

328 |

0.4080 |

0.1312 |

0.2324 |

3.9014 |

PhyDNet |

13.7 |

701 |

0.3940 |

0.1288 |

0.2309 |

4.8165 |

E3D-LSTM |

35.6 |

523 |

0.4038 |

0.1239 |

0.2270 |

4.1702 |

Rainformer |

184.0 |

170 |

0.3661 |

0.0831 |

0.1670 |

4.0272 |

Earthformer w/o global |

13.1 |

257 |

0.4356 |

0.1572 |

0.2716 |

3.7002 |

Earthformer |

15.1 |

257 |

0.4419 |

0.1791 |

0.2848 |

3.6957 |

On ICAR-ENSO, we report the correlation skill of the three-month-moving-averaged Nino3.4 index, which evaluates the accuracy of SST anomaly prediction across a certain area (170°-120°W, 5°S-5°N) of the Pacific. Earthformer consistently outperforms the baselines in all concerned evaluation metrics, and the version using global vectors further improves performance.

Model |

#Params.(M) |

GFLOPS |

Metrics |

||

C-Nino3.4-M↑ |

C-Nino3.4-WM↑ |

MSE(10-4)↓ |

|||

Persistence |

- |

- |

0.3221 |

0. 447 |

4.581 |

UNet |

12.1 |

0.4 |

0.6926 |

2.102 |

2.868 |

ConvLSTM |

14.0 |

11.1 |

0.6955 |

2.107 |

2.657 |

PredRNN |

23.8 |

85.8 |

0.6492 |

1.910 |

3.044 |

PhyDNet |

3.1 |

5.7 |

0.6646 |

1.965 |

2.708 |

E3D-LSTM |

12.9 |

99.8 |

0.7040 |

2.125 |

3.095 |

Rainformer |

19.2 |

1.3 |

0.7106 |

2.153 |

3.043 |

Earthformer w/o global |

6.6 |

23.6 |

0.7239 |

2.214 |

2.550 |

Earthformer |

7.6 |

23.9 |

0.7329 |

2.259 |

2.546 |

PreDiff

Diffusion models have recently emerged as a leading approach to many AI tasks. Diffusion models are generative models that establish a forward process of iteratively adding Gaussian noise to training samples; the model then learns to incrementally remove the added noise in a reverse diffusion process, gradually reducing the noise level and ultimately resulting in clear and high-quality generation.

During training, the model learns a sequence of transition probabilities between each of the denoising steps it incrementally learns to perform. It is therefore an intrinsically probabilistic model, which is well suited for probabilistic forecasting.

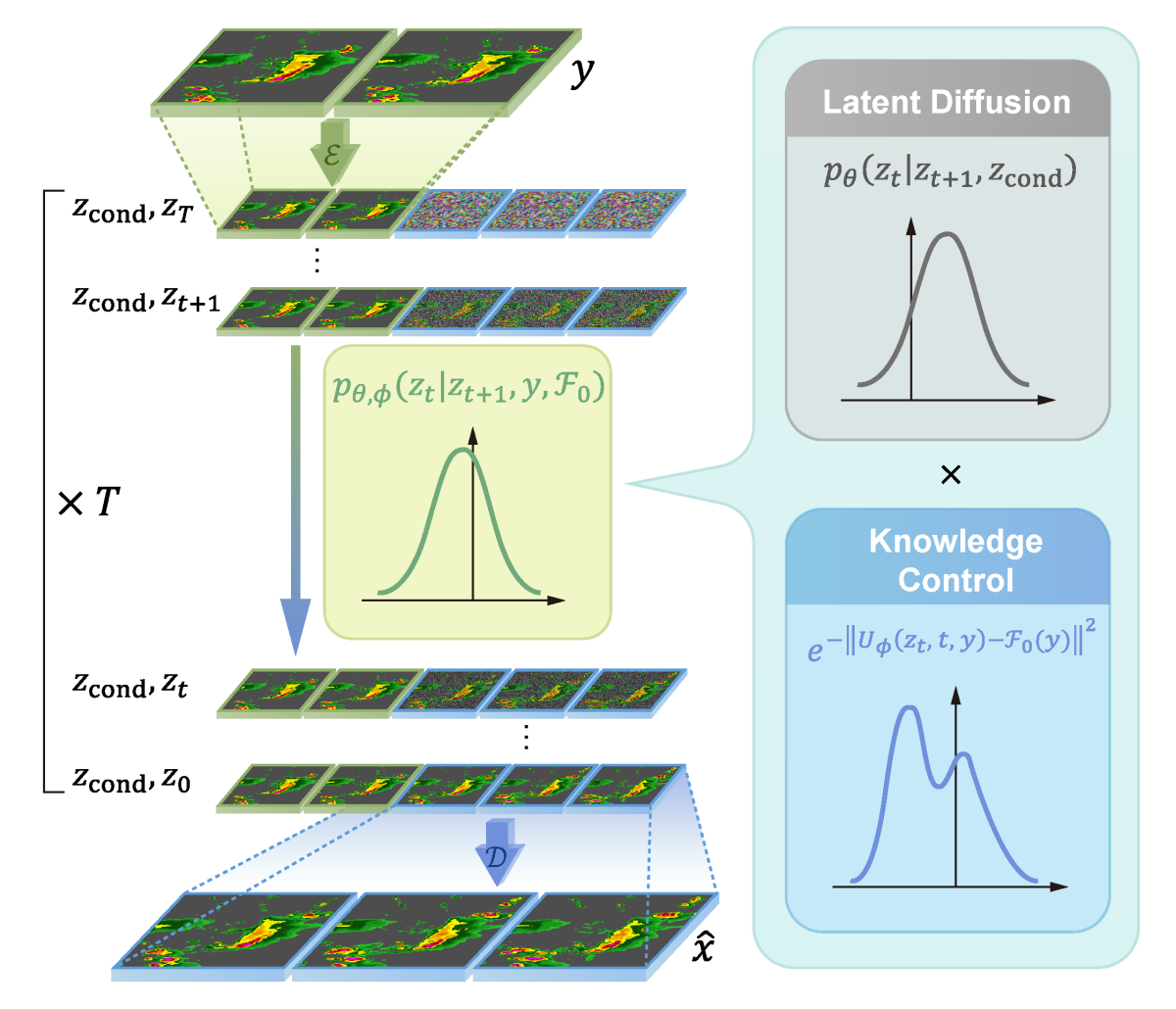

A recent variation on diffusion models is the latent diffusion model: before passing to the diffusion model, an input is first fed to an autoencoder, which has a bottleneck layer that produces a compressed embedding (data representation); the diffusion model is then applied in the compressed space.

In our forthcoming NeurIPS paper, “PreDiff: Precipitation nowcasting with latent diffusion models”, we present PreDiff, a latent diffusion model that uses Earthformer as its core neural-network architecture.

By modifying the transition probabilities of the trained model, we can impose constraints on the model output, making it more likely to conform to some prior knowledge. We achieve this by simply shifting the mean of the learned distribution, until it complies better with the constraint we wish to impose.

Results

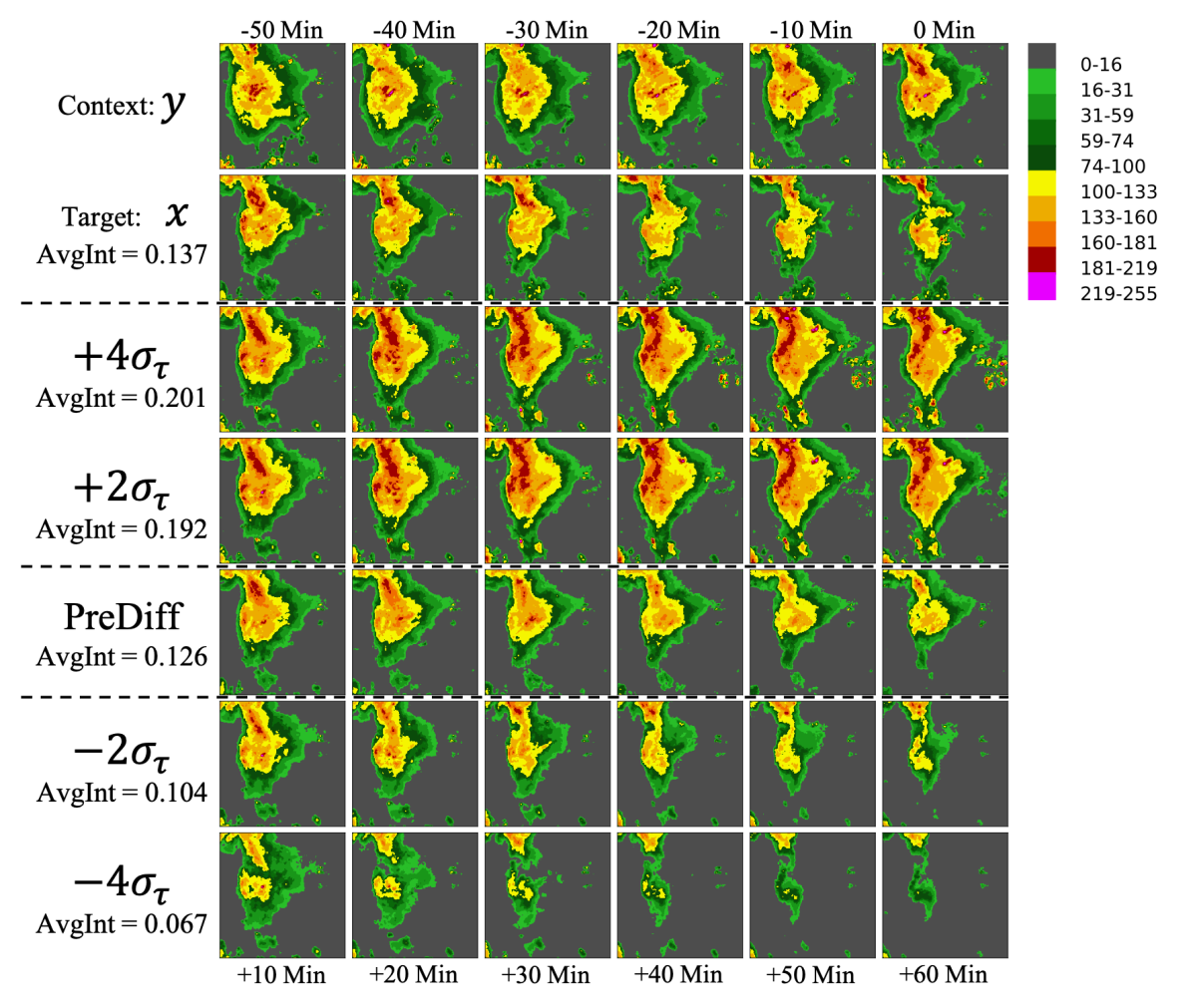

We evaluated PreDiff on the task of predicting precipitation intensity in the near future (“nowcasting”) on SEVIR. We use anticipated precipitation intensity as a knowledge control to simulate possible extreme weather events like rainstorms and droughts.

We found that knowledge control with anticipated future precipitation intensity effectively guides generation while maintaining fidelity and adherence to the true data distribution. For example, the third row of the following figure simulates how weather unfolds in an extreme case (with probability around 0.35%) where the future average intensity exceeds μτ + 4στ. Such simulation can be valuable for estimating potential damage in extreme-rainstorm cases.