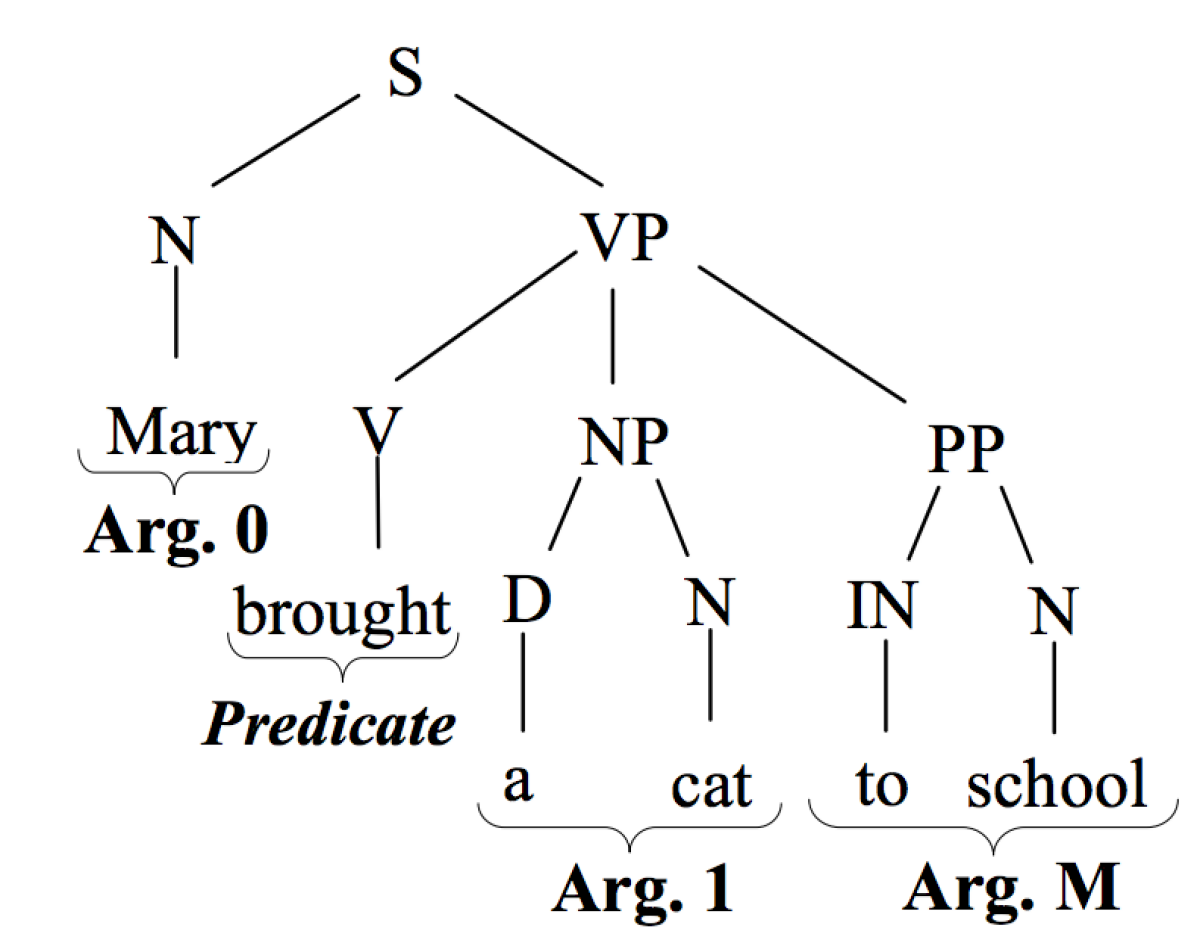

Machine learning systems often act on “features” extracted from input data. In a natural-language-understanding system, for instance, the features might include words’ parts of speech, as assessed by an automatic syntactic parser, or whether a sentence is in the active or passive voice.

Some machine learning systems could be improved if, rather than learning from extracted features, they could learn directly from the structure of the data they’re processing. To determine parts of speech, for instance, a syntactic parser produces a tree of syntactic relationships between parts of a sentence. That tree encodes more information than is contained in simple part-of-speech tags, information that could prove useful to a machine learning system.

The problem: comparing data structures is much more time consuming than comparing features, which means that the resulting machine learning systems are frequently too slow to be practical.

In a paper we’re presenting at the 33rd conference of the Association for the Advancement of Artificial Intelligence (AAAI), my colleagues at the University of Padova and the Qatar Computing Research Institute and I present a technique for making the direct comparison of data structures much more efficient.

In experiments involving a fundamental natural-language-understanding (NLU) task called semantic-role labeling, with syntactic trees as inputs, we compared our technique to the standard technique for doing machine learning on data structures. With slightly over four hours of training, a machine learning system using our technique achieved higher accuracy than a system trained for 7.5 days with the standard technique.

Our technique enables training using so-called mapping kernels. A kernel is a mathematical function for computing the similarity between two objects. The main idea behind mapping kernels is to decompose each object into substructures and then sum the contributions of kernel evaluations on a subset of the substructures. In our case, that means splitting syntactic trees into their constituent trees.

Typically, learning from structural examples requires that a machine learning system compare every training example it sees to all the others, in an attempt to identify structural features useful for the task at hand.

We show that, when training with tree structures, it’s possible to instead compress the complete set of constituent trees — the “forest” — into a single data structure called a direct acyclic graph (DAG).

Our analysis shows that many substructures repeat many times in the tree forest, and counts of those repetitions are encoded into the DAG. Then we can compute the similarity between the DAG and any other tree in one shot.

A tree is a data structure that starts with a root node, usually depicted as a circle. The root can have any number of children, also depicted as circles, to which it is connected by edges, usually depicted as line segments. The children can have children, which can have children, and so on.

What defines a tree is that every node has exactly one parent. (Put differently, between any two nodes, there is exactly one path.) In NLU, trees often indicate syntactic relationships between words of a sentence.

In our paper, we define several different kernels for comparing trees. One involves comparing partial trees, or any collections of a tree’s nodes and edges that are themselves trees. One involves comparing elastic trees, or trees that indicate the paths of descent between pairs of nodes (between, say, great-grandparent nodes and great-grandchildren nodes), without representing the intervening nodes. But the kernels we used in our experiments are called subset trees.

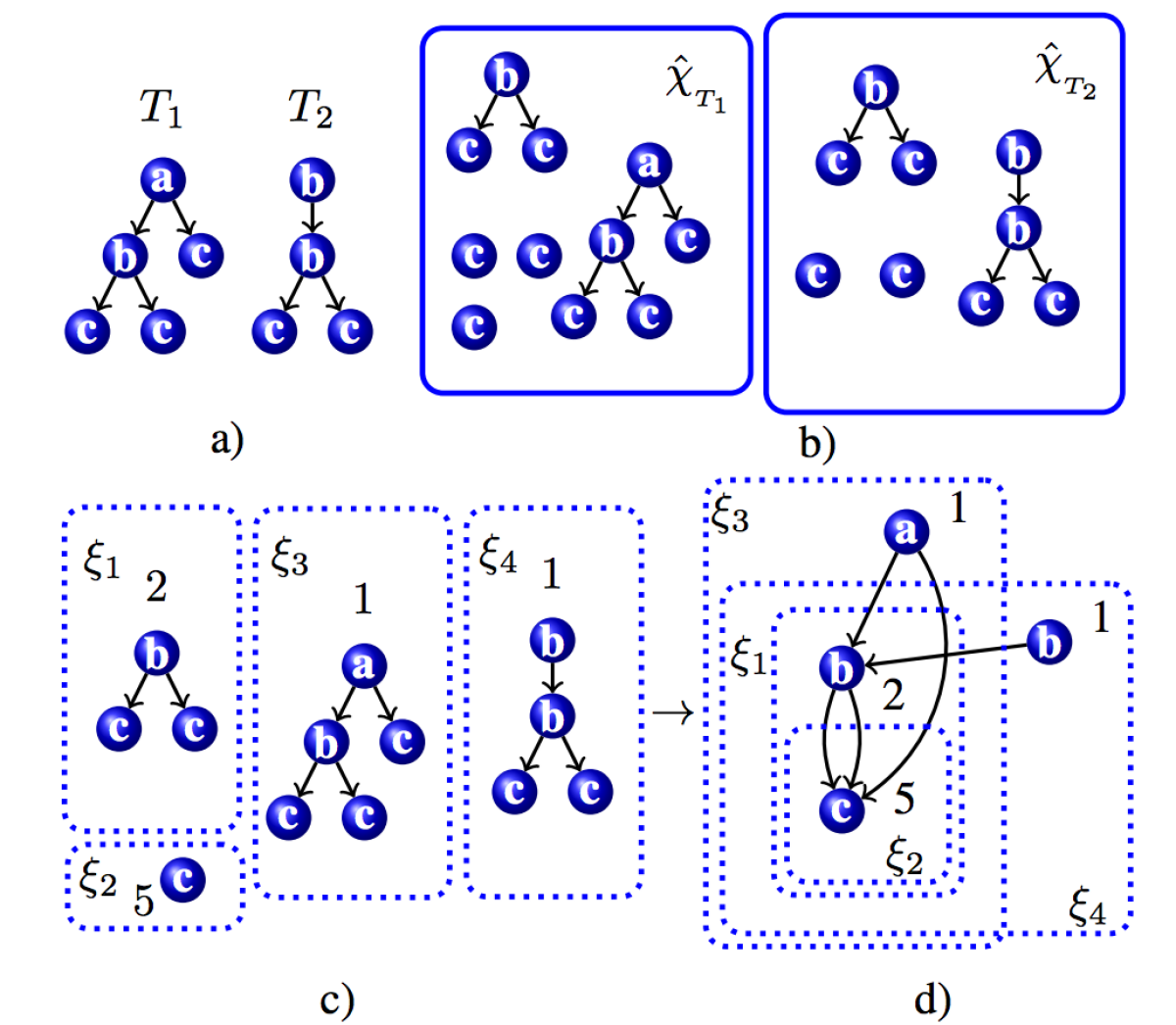

To create the set of subset trees for a given tree, you first lop off the root node and add the resulting tree or trees to your collection. Then you lop off the roots of each of those trees and add the results to your collection, and so on, until you’re left with the individual nodes of the tree’s last layer, which also join the collection (see section (b) of the first figure, above).

With our method, we pool the subset trees of all the trees we wish to compare, then select a single representative example of each subset tree in the pool. A given example may be unique to one tree, or it may be common to many. Along with each example, we also record the number of times it occurs in all of our sample trees (section (c), above).

Finally, we decompose the representative examples, too, into their constituent parts and record the frequency with which any node descends from any other (section (d), above). The result is a comparatively simple model that captures the statistical relationships between all the nodes in all the examples our machine learning system has seen.

With this approach, we can train a semantic-role-labeling system on two million examples in 4.3 hours. With the standard approach, using the same kernels, it takes more than a week to train a system on half as many examples.

Acknowledgments: Giovanni Da San Martino, Alessandro Sperduti, Fabio Aiolli