Reinforcement learning (RL), in which an agent learns to maximize some reward through trial-and-error exploration of its environment, is a hot topic in AI. In recent years, it’s led to breakthroughs in robotics, autonomous driving, and game playing, among other applications.

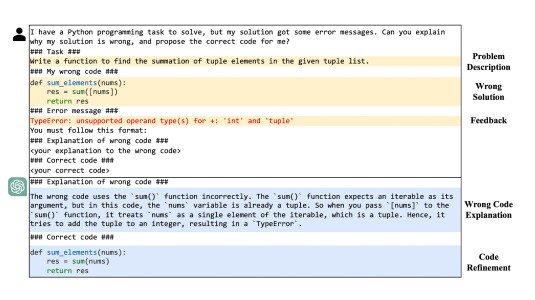

Often, RL agents are trained in simulations before being released into the real world. But simulations are rarely perfect, and an agent that doesn’t know how to explicitly model its uncertainty about the world will often flounder outside the training environment.

Such uncertainty has been nicely handled in the case of single-agent RL. But it hasn’t been as thoroughly explored in the case of multi-agent RL (MARL), where multiple agents are trying to optimize their own long-term rewards by interacting with the environment and with each other.

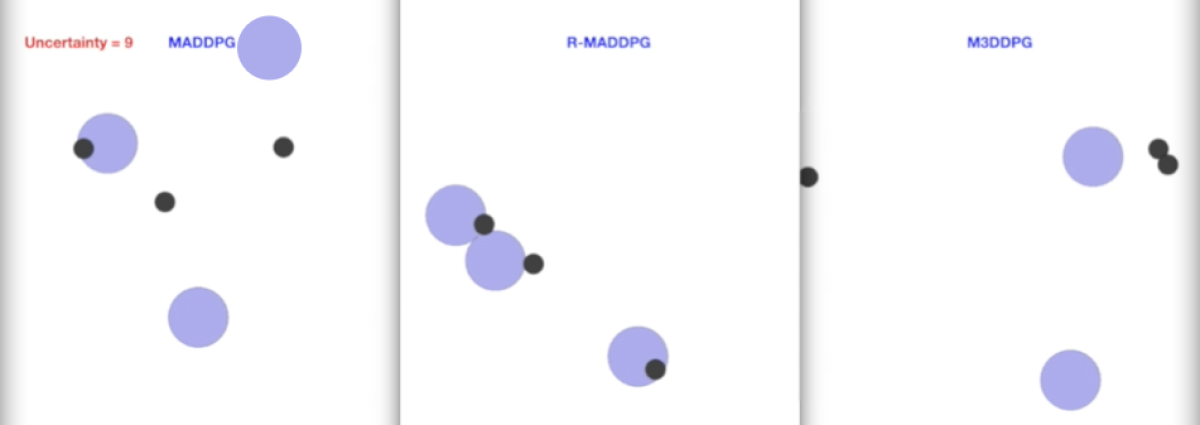

In a paper we are presenting at the 34th Conference on Neural Information Processing Systems, we propose a MARL framework that is robust to the possible uncertainty of the model. In experiments that used state-of-the-art systems as benchmarks, our approach accumulated higher rewards at higher uncertainty.

For example, in cooperative navigation, in which three agents locate and occupy three distinct landmarks, our robust MARL agents perform significantly better than state-of-the-art system when uncertainty was high. In the predator-prey environment, in which predator agents attempt to “catch” (touch) prey agents, our robust MARL agents outperform the baseline agents regardless of whether they are predator or prey.

Markov games

Reinforcement learning is typically modeled using a sequential decision process called a Markov decision process, which has several components: a state space, an action space, transition dynamics, and a reward function.

At each time step, the agent takes an action and transitions to a new state, according to a transition probability. Each action incurs a reward or penalty. By trying out sequences of actions, the agent develops a set of policies that optimize its cumulative reward.

Markov games generalize this model to the multi-agent setting. In a Markov game, state transitions are the result of multiple actions taken by multiple agents, and each agent has its own reward function.

To maximize its cumulative reward, a given agent must navigate not only the environment but also the actions of its fellow agents. So in addition to learning its own set of policies, it also tries to infer the policies of the other agents.

In many real-world applications, however, perfect information is impossible. If multiple self-driving cars are sharing the road, no one of them can know exactly what rewards the others are maximizing or what the joint transition model is. In such situations, the policy a given agent adopts should be robust to the possible uncertainty of the MARL model.

In the framework we present in our paper, each player considers a distribution-free Markov game — a game in which the probability distribution that describes the environment is unknown. Consequently, the player doesn’t seek to learn specific reward and state values but rather a range of possible values, known as the uncertainty set. Using uncertainty sets means that the player doesn’t need to explicitly model its uncertainty with another probability distribution.

Uncertainty as agency

We treat uncertainty as an adversarial agent — nature — whose policies are designed to produce the worst-case model data for the other agents at every state. Treating uncertainty as another player allows us to define a robust Markov perfect Nash equilibrium for the game: a set of policies such that — given the possible uncertainty of the model — no player has an incentive to change its policy unilaterally.

To prove the utility of this adversarial approach, we first propose using a Q-learning-based algorithm, which, under certain conditions, is guaranteed to converge to the Nash equilibrium. Q-learning is a model-free RL algorithm, meaning that it doesn’t need to learn explicit transition probabilities and reward functions. Instead, it attempts to learn the expected cumulative reward for each set of actions in each state.

If the space of possible states and actions becomes large enough, however, learning the cumulative rewards of all actions in all states becomes impractical. The alternative is to use function approximation to estimate state values and policies, but integrating function approximation into Q-learning is difficult.

So in our paper, we also develop a policy-gradient/actor-critic-based robust MARL algorithm. This algorithm doesn’t provide the same convergence guarantees that Q-learning does, but it makes it easier to use function approximation.

This is the MARL framework we used in our experiments. We tested our approach against two state-of-the-art systems, one that was designed for the adversarial setting and one that wasn’t, on a range of standard MARL tasks: cooperative navigation, keep-away, physical deception, and the predator-prey environments. In settings with realistic degrees of uncertainty, our approach outperformed the others across the board.