The annual meeting of the North American chapter of the Association for Computational Linguistics (NAACL) introduced an industry track in 2018, and at this year’s conference, which begins next week, one of the industry track chairs is Amazon principal research scientist Rashmi Gangadharaiah.

“The NAACL industry track inspired industry tracks at other conferences such as COLING and EMNLP,” Gangadharaiah says. “The industry track provides a forum for researchers in the industry to exchange ideas and discuss successful deployments of ML [machine learning] and NLP [natural-language processing] technologies, as well as share challenges that arise in deploying such systems in real-world settings.”

For instance, Gangadharaiah explains, “academic research is often done in very controlled settings. It's not a negative thing: people have to do research, and it's useful to start in a controlled setting. But when we put such systems in real-world situations, we typically have to worry about latency, memory, and space. It's not always accuracy that we go for. It's a balance of latency, memory, space, and accuracy — and a question of how we measure accuracy. So I think it makes it more interesting that way.”

Similarly, Gangadharaiah explains, industry track papers often report negative results. “There are lots of papers that get published in academia, but when we try to put it in real-world settings, we notice that many of these methods don't work well,” she says. “So we do have papers on negative results. And it's crucial, because we do want to show that these are the methods that we tried, and they didn't work.”

The case for simplicity

At Amazon, Gangadharaiah’s own research is on dialogue systems, and in her field, she says, a common reason that methods reported in academic papers prove impractical in real-world settings is that they require excessive hyperparameter optimization.

Hyperparameters are features of neural networks — such as the number of network layers, the number of nodes per layer, and the learning rate during training — whose variation can make a large difference in model performance. If the range of possible hyperparameter values is too great, and the range of values for which performance is good too narrow, hyperparameter optimization can prove prohibitively time consuming.

“In real-world applications, conversations can go really, really wild,” Gangadharaiah explains. “When you're trying to mimic the hyperparameters that are provided in academic settings, they usually don't work that well. So the best is to always go for a much simpler model. This is something that I have noticed: in industry, simple models perform way better, especially when you don't have to do so much tweaking of the models themselves.”

Hierarchical thinking

Of course, not all industry papers report negative results, and in some cases, Gangadharaiah says, industry research has pointed in directions where academic research has followed.

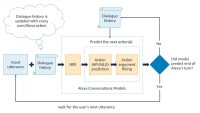

Again, her own research provides an example. The dialogue systems that Gangadharaiah works on are goal directed, meaning that the purpose of each dialogue is that an AI agent should identify and fulfill the goal of a human speaker. Such systems rely on natural-language-understanding models to make sense of customer utterances, but they also include state trackers that assess progress toward the speaker’s goal.

There is some need of semantic parsing in dialogue systems. ... I think the industry kind of motivated all that work.

“If you consider restaurant booking, you might say that you want to book a restaurant for six people, and then you might change your mind and say, ‘Hey no, now I want it for eight people,’” Gangadharaiah explains. “The system will have to make appropriate changes.

“We can introduce more complexity. So, for example, if you're ordering a pizza, maybe you would start with toppings of olives, and then you might go to pepperoni. In this case, you're not asking the system to replace olives with pepperoni; multiple values are being provided for the toppings itself.”

In natural-language understanding, categories such as “pizza topping” are often referred to as slots, and specific instances of the categories, such as “pepperoni” and “olives”, are referred to as slot values.

“We did have a few papers on why it makes sense to have some form of hierarchical structure to represent what the user really wants,” Gangadharaiah says. “So you have this high-level slot, which is called ‘toppings’, and under toppings, you have olives, pepperoni, and many other things. Above ‘toppings’, there might be a high-order intent like ‘pizza ordering’, and under ‘pizza ordering’, you would need ‘toppings’, but you also want to know the type of pizza, the size of the pizza, and so on.

“What this is saying is that there is some hierarchical representation, and there is some need of semantic parsing in dialogue systems. Some of these things have been pointed out in the industry, and now people are moving in that direction. So I think the industry kind of motivated all that work.”

Large language models

Recently, the big story in natural-language processing (NLP) has been the power and adaptability of large language models, such as BERT and GPT-3, that encode the probabilities of long sequences of words and can be fine-tuned on particular NLP tasks. They have applications in dialogue management, too, Gangadharaiah says.

“We’ve successfully deployed such models in Amazon,” she says, “and we’ve been actively exploring how to improve these models in order to make our chatbots — such as AWS Chatbot, LEX, and Alexa — more powerful. For example, I can take these large language models and then fine-tune them on, let's say, a restaurant domain, where I want to book certain seats in a certain restaurant for a certain number of people, and so on.

“I think the crucial part is the dialogue history. These models are still not perfect at handling dialogue history, and we still do not know the best strategy to handle dialogue history. Should I just send the model everything that was said in the previous turns? Or do I come up with a better representation — a state representation — to feed that as input? This is where it becomes really critical to explore more and see what works best for dialogue systems.”

Dialogue management systems have been in the headlines recently, with the commotion about an engineer who believed a chatbot had become sentient. But, Gangadharaiah says, “I consider goal-oriented dialogue as more complex because it has to be more than non-goal-oriented. Not only does the system have to be fluent and coherent, like non-goal-oriented systems, but it also has to interact with multiple databases in order to achieve the end goal, which could be making a reservation at a restaurant or booking a flight. And these could be skill commands, too. I guess people can argue both ways, but I think in general goal-oriented dialogue systems are more complex.”