In the past few years, we’ve published a number of papers about extreme multilabel classification (XMC), or classifying input data when the number of candidate labels is huge.

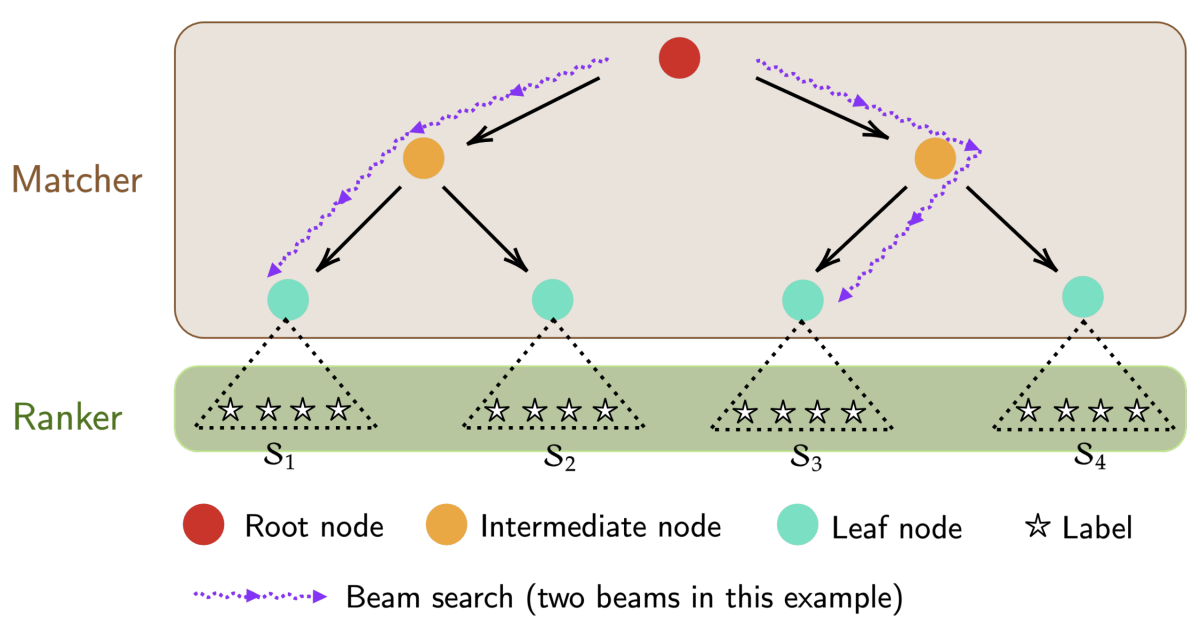

Earlier this year, we publicly released the code for our own XMC framework, PECOS, which makes XMC more efficient through label partitioning. With label partitioning, labels are first grouped into clusters, and a matcher model is trained to assign inputs to clusters. Then, a ranker is trained to select a single label from the designated group for a given input.

At this year’s Conference on Neural Information Processing Systems (NeurIPS), we’re presenting two papers that extend the range of label-partitioning frameworks — including but not limited to PECOS — and improve their classification accuracy.

In “Label disentanglement in partition-based extreme multilabel classification”, we consider the case in which the same label belongs to multiple clusters: for instance, the label “apple” might properly belong to one cluster designating computing devices and another designating fruits. We demonstrate a method for assigning labels to multiple clusters that improves classification accuracy with a negligible effect on efficiency.

In “Fast multi-resolution transformer fine-tuning for extreme multi-label text classification”, we propose a new method for training Transformer-based matching models that reduces training time by 95% while actually increasing accuracy.

Label disentanglement

PECOS is a flexible framework that allows variation in the implementation of XMC models, and one common approach to label clustering is to use a hierarchical tree, where the labels are first divided into a few, coarse-grained groups, then successively subdivided into finer- and finer-grained groups. The matcher is then trained to traverse the tree to find the lowest-level label cluster.

Typically, the tree is constructed using some fixed measure of label similarity, such as term frequency–inverse document frequency (TD-IDF), which identifies terms that predominate in a given text relative to a larger corpus of texts. In most existing label-partitioning approaches, each label ends up in exactly one low-level cluster.

In addition to the assignment of individual labels to multiple clusters, one of the novelties of our work is that the hierarchical tree itself is learned from data in a supervised manner.

To ensure that every label is assigned to all the clusters to which it properly belongs, we could simply assign every label to every cluster. But of course, that would waste the advantage of doing label partitioning in the first place.

Instead, we limit the number of clusters that a given label can be assigned to — in our experiments, we varied the limit from 1 to 6 — and then treat the cluster assignment as an optimization problem. That is, we learn which assignment of labels to which clusters maximizes the performance of the XMC model.

At the beginning of the training procedure, we create a provisional hierarchical tree using TF-IDF. Then we train a matcher on that tree. On the basis of that matcher, we then reassign labels to multiple clusters in a way that maximizes classification accuracy.

This process could be repeated as many times as necessary, but in our experiments, we found that one repetition was enough to secure most of the approach’s performance gains.

In our experiments, we compared our approach to nine earlier approaches, using six metrics across four datasets. Of the resulting 24 measurements, our approach achieved the highest score on 21, second place on two.

Multiresolution Transformer fine-tuning

PECOS comes with a number of built-in tools for performing all three steps in our XMC pipeline: label partitioning, matching, and ranking. At KDD 2020, we described our Transformer-based approach to matching, X-Transformer, which can be used with PECOS or with other XMC approaches.

The initial PECOS release also came with a recursive linear matching model, XR-linear, which learns to match inputs to clusters using the same iterative strategy that we use to build hierarchical trees: first XR-linear performs a coarse-grained matching, then a finer-grained matching, and so on. The “R” in XR-linear stands for “recursive”.

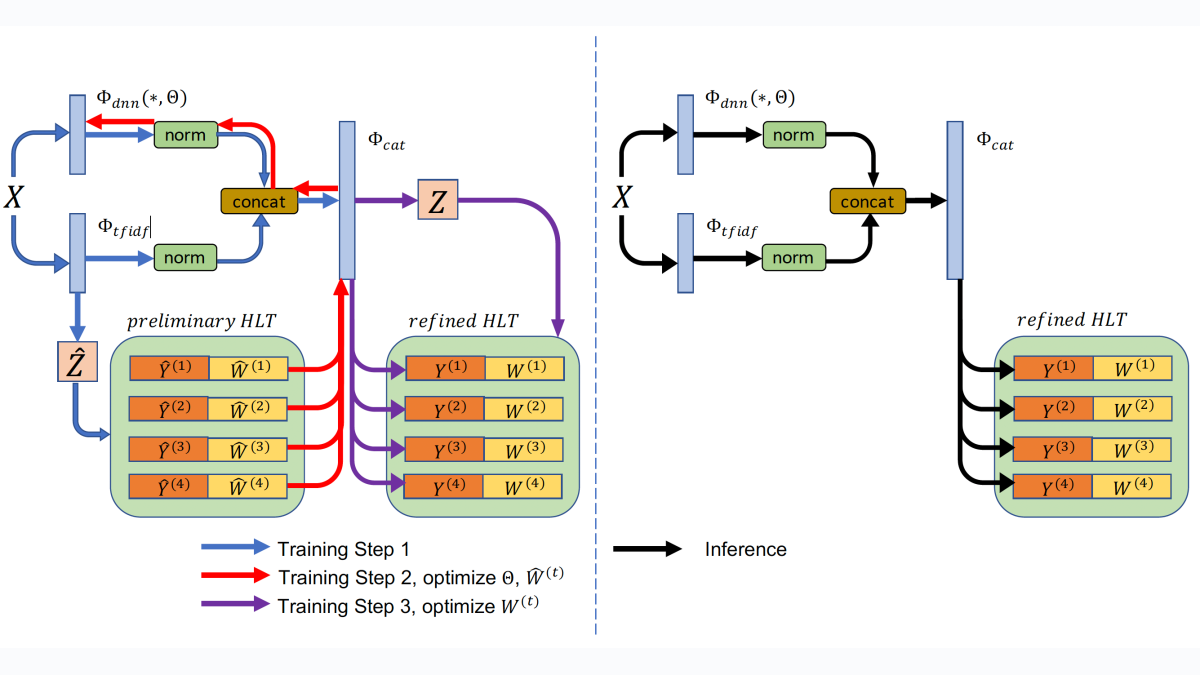

In our second NeurIPS paper, we combine these two approaches to create XR-Transformer, a recursive, Transformer-based matcher. On the Amazon-3M dataset, a standard benchmark in the XMC field that consists of products sorted into three million product categories (the label space), training an X-Transformer matcher takes 23 days on eight GPUs; training an XR-Transformer matcher takes only 29 hours — with a significant improvement in accuracy.

To train an XR-Transformer matcher, we begin as we did in the label disentanglement work, with a hierarchical label tree based on TF-IDF features. For each layer of the tree, we jointly train a Transformer-based encoder and a linear ranker, which uses both the Transformer model embedding and the TF-IDF features as the basis for assigning an input to a particular cluster in the next layer down the tree.

Once the Transformer-based encoder has been trained at every level of the tree, we concatenate its final label embeddings with the TF-IDF features and, on that basis, produce a new label tree. Then, for each level of the tree, we train new linear rankers with the concatenated features as inputs.

We tested our approach on six public datasets, whose output space ranged from about 4,000 labels to the three million of Amazon-3M. We compared our approach to 11 predecessors on three metrics (precision at 1, 3, and 5, or the number of relevant results among the top one, three, and five labels returned).

On the three datasets with 4,000 to 31,000 labels, XR-Transformer achieved the highest score on five of nine measures. But on the three datasets with 500,000 or more labels, it achieved the highest scores across the board, by a significant margin.