Question answering is a popular task in natural-language processing, where models are given questions such as “What city is the Mona Lisa in?” and trained to predict correct answers, such as “Paris”.

One way to train question-answering models is to use a knowledge graph, which stores facts about the world in a structured format. Historically, knowledge-graph-based question-answering systems required separate semantic-parsing and entity resolution models, which were costly to train and maintain. But since 2020, the field has been moving toward differentiable knowledge graphs, which allow a single end-to-end model to take questions as inputs and directly output answers.

At this year’s Conference on Empirical Methods in Natural Language Processing (EMNLP), we presented two extensions to end-to-end question answering using differentiable knowledge graphs.

In “End-to-end entity resolution and question answering using differentiable knowledge graphs”, we explain how to integrate entity resolution into a knowledge-graph-based question-answering model, so that it will be performed automatically. This is a potentially labor-saving innovation, as some existing approaches require hand annotation of entities.

And in “Expanding end-to-end question answering on differentiable knowledge graphs with intersection”, we explain how to handle queries that involve multiple entities. In experiments involving two different datasets, our approach improved performance on multi-entity queries by 14% and 19%.

Traditional approaches

In a typical knowledge graph, the nodes represent entities, and the edges between nodes represent relationships between entities. For example, a knowledge graph could contain the fact “Mona Lisa | exhibited at | Louvre Museum”, which links the entities “Mona Lisa” and “Louvre Museum” with the relationship “exhibited at”. Similarly, the graph could link the entities “Louvre Museum” and “Paris” with the relationship “located in”. A model can learn to use the facts in the knowledge graph to get to answer questions.

Traditional approaches to knowledge-graph-based question answering use a pipeline of models. First, the question goes into a semantic-parsing model, which is trained to predict queries. Queries can be thought of as instructions of what to do in the knowledge graph — for example, “find out where the ‘Mona Lisa’ is exhibited, then look up what city it’s in.”

Next, the question goes into an entity resolution model, which links parts of the sentence, like “Mona Lisa”, to IDs in the knowledge graph, like Q12418.

While this pipeline approach works, it does have some flaws. Each model is trained independently, so it has to be evaluated and updated separately, and each model requires annotations for training, which are time-consuming and expensive to collect.

End-to-end question answering

End-to-end question answering is a way to rectify these flaws. During training, an end-to-end question answering model is given the question and the answer but no instructions about what to do in the knowledge graph. Instead, the model learns the instructions based on the correct answer.

In 2020, Cohen et al. proposed a way to perform end-to-end question answering using differentiable knowledge graphs, which represent knowledge graphs as tensors and queries as differentiable mathematical operations. This allows for fully differentiable training, so that the answer alone provides a training signal to update all parts of the model.

“End-to-end entity resolution and question answering using differentiable knowledge graphs”

In our first paper, we extend end-to-end models to include the training of an entity resolution component. Previous work left the task of entity resolution (linking “Mona Lisa” to Q12418) out of the scope of the model, relying instead on a separate entity resolution model or hand annotation of entities.

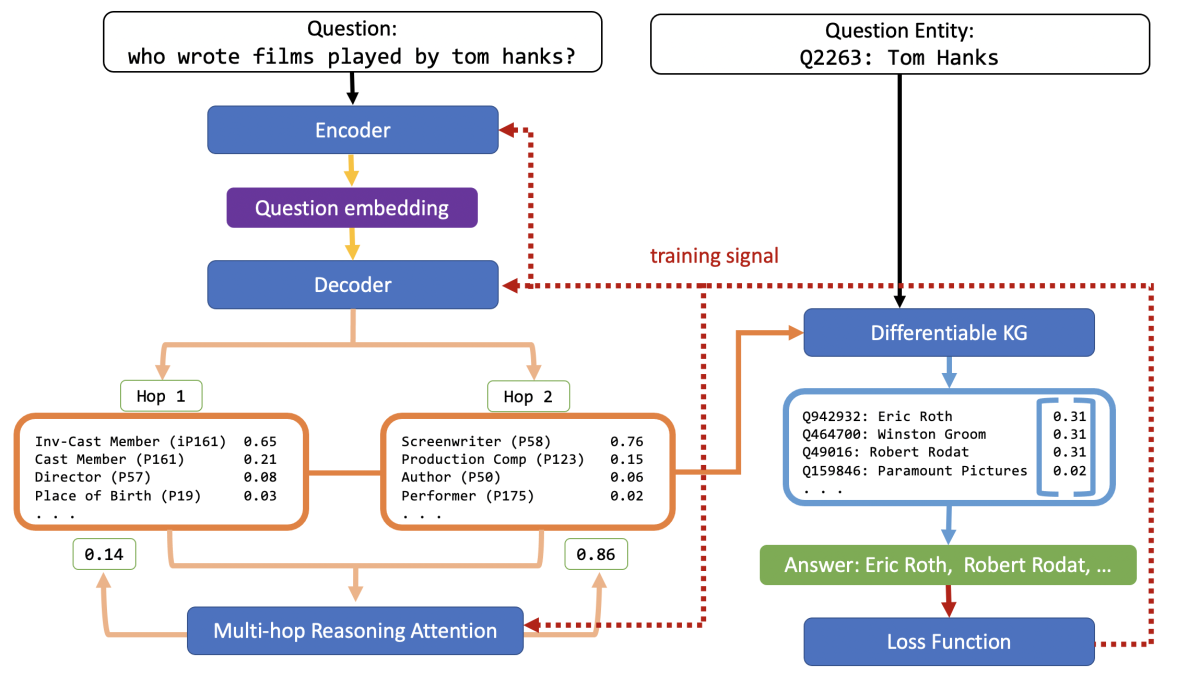

We propose a way to train entity resolution as part of the question-answering model. To do this, we start with a baseline model similar to the implementation by Cohen et al., which has an encoder-decoder structure and an attention mechanism that takes in a question and returns predicted answers with probabilities.

We add entity resolution to this baseline model by first introducing a span detection component. This identifies all the possible parts of the sentence (spans) that could refer to an entity. For example, in the question “Who wrote films starring Tom Hanks?”, there are multiple spans, such as “films” or “Tom”, and we want the model to learn to give a higher score to the correct span, “Tom Hanks”.

Then for each of the identified spans, our model ranks all the possible entities in the knowledge graph that the span could refer to. For example, “Tom Hanks” is also the name of a seismologist and a theologian, but we want the model to learn to give the actor a higher score.

Span detection and entity resolution happen jointly in a new entity resolution component, which returns possible entities with scores. Finding the entities in the knowledge graph and following the paths from the inference component yields the predicted answers. We believe that this joint modeling is responsible for our method’s increase in efficiency.

In our experiments, we use two English question-answering datasets. We find that although our ER and E2E models perform slightly worse than the baseline, they do come very close, with differences of about 7% and 5% on the two datasets.

This is an impressive result, since the baseline model uses hand-annotated entities from the datasets, while our model is learning entity resolution jointly with question answering. With these findings, we demonstrate the feasibility of learning to perform entity resolution and multihop inference in a single end-to-end model.

“Expanding end-to-end question answering on differentiable knowledge graphs with intersection”

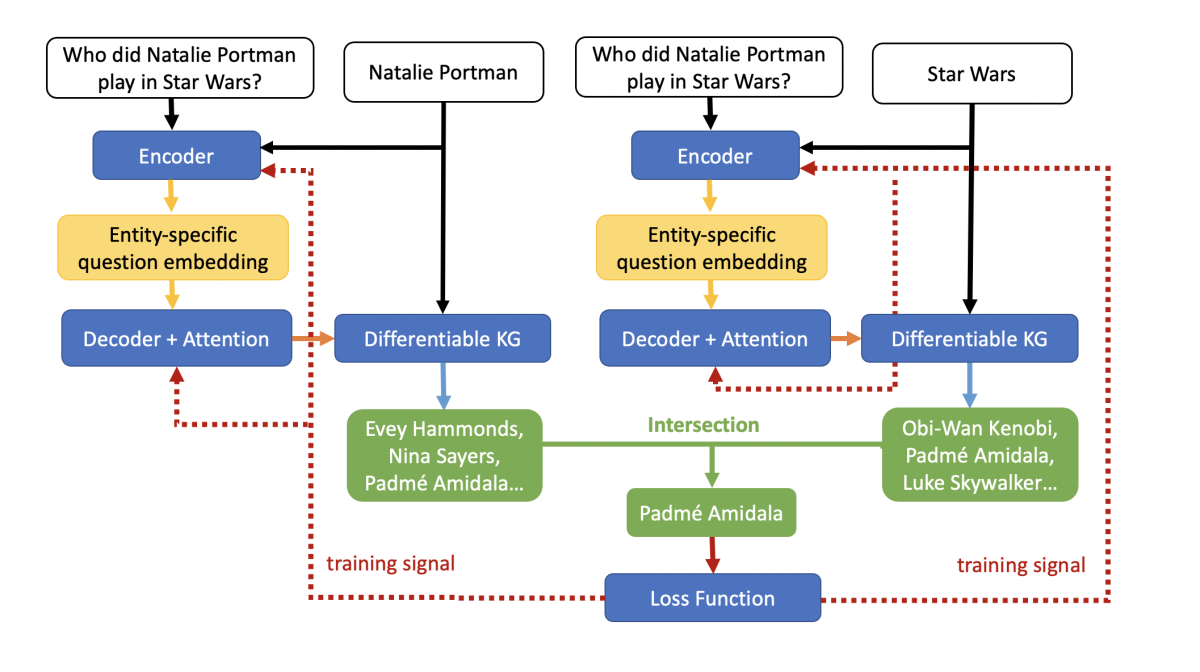

In our second paper, we extend end-to-end models to handle more complex questions with multiple entities. Take for example the question “Who did Natalie Portman play in Star Wars?”. This question has two entities, Natalie Portman and Star Wars.

Previous end-to-end models were trained to follow paths originating with one entity in a knowledge graph. However, this is not enough to answer questions with multiple entities. If we started at “Natalie Portman” and found all the roles she played, we would get roles that were not in Star Wars. If we started at Star Wars and found all the characters, we would get characters that Natalie Portman didn’t play. What we need is an intersection of the characters played by Natalie Portman and the characters in Star Wars.

To handle questions with multiple entities, we expand an end-to-end model with a new operation: intersection. For each entity in the question, the model follows paths from the entity independently and arrives at an intermediate answer. Then the model performs intersection, which we implemented as the element-wise minimum of two vectors, to identify which entities the intermediate answers have in common. Only entities that appear in all intermediate answers are returned in the final answer.

In our experiments, we use two English question-answering datasets. Our results show that introducing intersection improves performance over the baseline by 3.7% on one and 8.9% on the other.

More importantly, we see that improved performance comes from better handling of questions with multiple entities, where the intersection model surpasses the baseline by over 14% on one dataset and by 19% on the other.

In future work, we plan to continue developing end-to-end models by improving entity resolution, so it’s competitive with hand annotation of entities; integrating entity resolution with intersection; and learning to handle more-complex operations, such as maximums/minimums and counts.