Sound detection is a popular application of today’s smart speakers. Alexa customers who activate Alexa Guard when they leave the house, for instance, receive notifications if their Alexa-enabled devices detect sounds such as glass breaking or smoke detectors going off while they’re away.

Sound detection — or, technically, acoustic-event detection (AED) — needs to run on-device: a home security application, for example, can’t miss a smoke alarm because of a momentary loss of Internet connectivity.

A popular way to fit AED models on-device is to use knowledge distillation, in which a machine learning model with a small memory footprint is trained to reproduce the outputs of a more powerful but also much larger model.

At this year’s Interspeech, we presented a new approach to knowledge distillation for AED systems. In tests, we compared our model to both a baseline model with no knowledge distillation and a model using a state-of-the-art knowledge distillation technique. On a standard metric called area under the precision-recall curve (AUPRC), our model improved on the earlier knowledge distillation model by 27% to 122%, relative to the baseline.

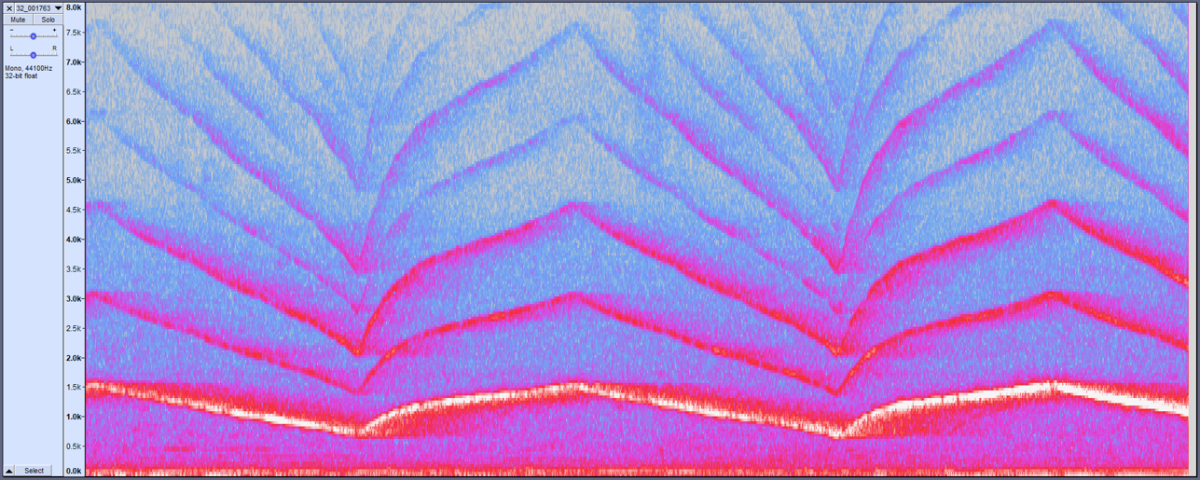

Our technique works by exploiting repetitions in the acoustic signal, which are common in the types of sounds that AED systems are typically trained to detect: the sounds of smoke detector alarms or barking dogs, for instance, have more-or-less recurrent acoustic patterns.

While our system did deliver its greatest improvement over baseline on such repetitive signals, it also improved performance on loud, singular sounds such as engine and machinery impacts.

Deep neural networks, like the ones used in most AED models, are arranged into layers; input data is fed to the bottom layer, which processes it and passes the results to the next layer, which processes them and passes the results to the next layer, and so on.

Past work has improved knowledge distillation by using a technique called similarity-preserving knowledge distillation, which relies on similarities between the outputs of different network layers on training examples that share a label.

For instance, sounds of breaking glass have certain acoustic characteristics not shared by sounds of barking dogs, and the layers’ outputs should reflect that. With similarity-preserving knowledge distillation, similarities inferred by the teacher model help guide the training of the student model.

We vary this approach to enforce similarities between the outputs of network layers for the same training example. That is, the outputs of the network layers should reflect the repetitions in the input signal. We thus call our approach intra-utterance similarity-preserving (IUSP) knowledge distillation.

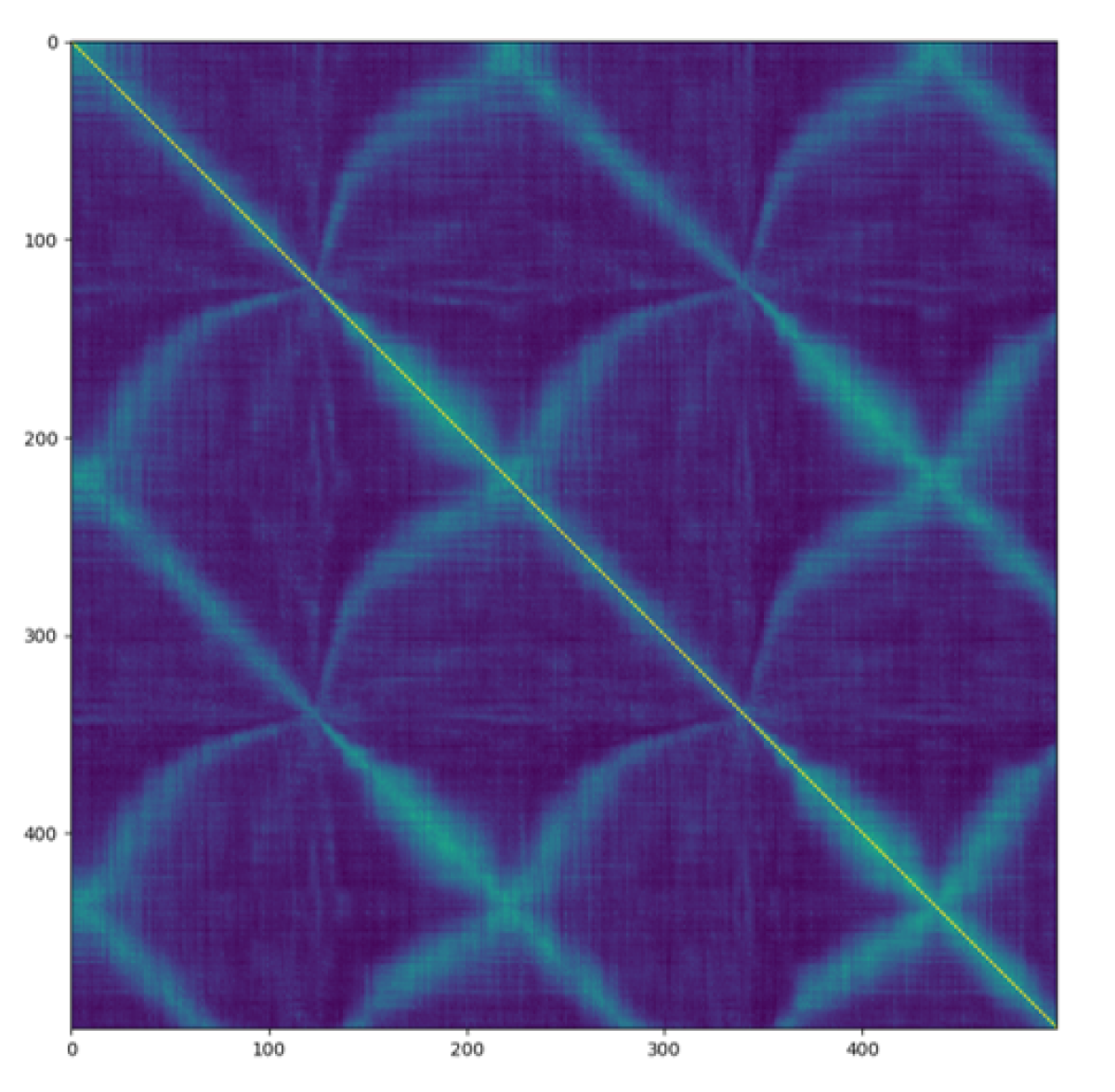

We can enforce similarity between whichever layers of the teacher network — the larger network — and the student network — the smaller network — we want. For a given layer of the teacher model, we produce a matrix that maps its outputs for successive time steps of the input signal against themselves. The values in the matrix cells indicate the correlation between the layer’s outputs at different time steps.

During training, we evaluate the student model not only according to how well its final output matches that of the teacher model, but also according to how well the self-correlation matrices of its normalized outputs match the teacher’s.

Since the goal of knowledge distillation is to shrink the size of the machine learning model, the layers and intermediate features of the student model are often smaller — they have fewer processing nodes — than those of the teacher model.

In that case, we use bilinear interpolation to make the student model’s self-correlation matrices the same size as the teacher’s. That is, we insert additional rows and columns into the matrix, and the value of each added cell is an interpolation between the values of the adjacent cells in the horizontal and vertical directions.

In our experiments, we used a standard benchmark data set that features eight classes of sound, including alarm sounds, dogs barking, impact sounds, and human speech.

As a baseline model, we used a standard AED network with no knowledge distillation. To assess our model, we also compared it to a model trained using similarity-preserving knowledge distillation.

We measured the models’ performance using area under the precision-recall curve, which represents the trade-off between false positives and false negatives, and we experimented with student models of four different sizes. We assessed the knowledge distillation models according to their degree of improvement over the baseline model.

Compared to the other knowledge distillation model, our model’s biggest improvement — a 122% increase in relative AUPRC — came with the smallest student model. The smallest improvement — 27% relative — came with the largest student model. As the purpose of knowledge distillation is to shrink the size of the student model, this indicates that our approach could be of use in real-world settings.