Time series forecasting is often hierarchical: a utility company might, for instance, want to forecast energy consumption or supply at regional, state, and national levels; a retailer may want to forecast sales according to increasingly general product features, such as color, model, brand, product category, and so on.

Previously, the state-of-the-art approach in hierarchical time series forecasting was to learn a separate, local model for each time series in the hierarchy and then apply some postprocessing to reconcile the different levels — to ensure that the sales figure for a certain brand of camera is the sum of the sales figures for the different camera models within that brand, and so on.

This approach has two main drawbacks. It doesn’t allow different levels of the hierarchy to benefit from each other’s forecasts: at lower levels, historical data often has characteristics such as sparsity or burstiness that can be “aggregated away” at higher levels. And the reconciliation procedure, which is geared to the average case, can flatten out nonlinearities that are highly predictive in particular cases.

In a paper we’re presenting at the International Conference on Machine Learning (ICML), we describe a new approach to hierarchical time series forecasting that uses a single machine learning model, trained end to end, to simultaneously predict outputs at every level of the hierarchy and to reconcile them. Contrary to all but one approach from the literature, our approach also allows for probabilistic forecasts — as opposed to single-value (point) forecasts — which are crucial for intelligent downstream decision making.

In tests, we compared our approach to nine previous models on five different datasets. On four of the datasets, our model outperformed all nine baselines, with reductions in error rate ranging from 6% to 19% relative to the second-best performer (which varied from case to case).

One baseline model had an 8% lower error rate than ours on one dataset, but that same baseline’s methodology means that it didn’t work on another dataset at all. And on the other three datasets, our model had an advantage that ranged from 13% to 44%.

Ensuring trainability

Our model has two main components. The first is neural network that takes a hierarchical time series as input and outputs a probabilistic forecast for each level of the hierarchy. Probabilistic forecasts enable more intelligent decision making because they allow us to minimize a notion of expected future costs.

The second component of our model selects a sample from that distribution and ensures its coherence — that is, it ensures that the values at each level of the hierarchy are sums of the values of the levels below.

One technical challenge in designing an end-to-end hierarchical forecasting model is ensuring trainability via standard methods. Stochastic gradient descent — the learning algorithm for most neural networks — requires differentiable functions; however, in our model, the reconciliation step requires sampling from a probability distribution. This is not ordinarily a differentiable function, but we make it one by using the reparameterization trick.

The distribution output by our model’s first component is characterized by a set of parameters; in a normal (Gaussian) distribution, those parameters are mean and variance. Instead of sampling directly from that distribution, we sample from the standard distribution: in the Gaussian case, that’s the distribution with a mean of 0 and a variance of 1.

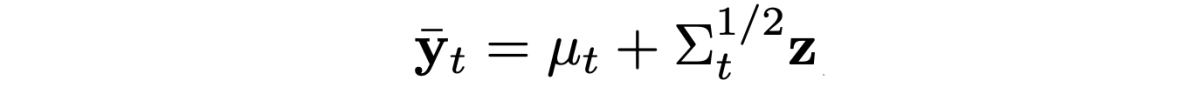

We can convert a sample from the standard distribution into a sample from the learned distribution with a function whose coefficients are the parameters of the learned distribution. Here’s the equation for the Gaussian case, where m and S are the mean and variance, respectively, of the learned distribution, and z is the sample from the standard distribution:

With this trick, we move the randomness (the sampling procedure) outside the neural network; given z, the above function is deterministic and differentiable. This allows us to incorporate the sampling step into our end-to-end network. While we’ve used the Gaussian distribution as an example, the reparametrization trick works for a wider class of distributions.

We incorporate the reconciliation step into our network by recasting it as an optimization problem, which we solve as a subroutine of our model’s overall parameter learning. In our model, we represent the hierarchical relationship between time series as a matrix.

In the space of possible samples from the learned distribution, the hierarchy matrix defines a subspace of samples that meet the hierarchical constraint. After transforming our standard-distribution sample into a sample from our learned distribution, we project it back down to the subspace defined by the hierarchy matrix (see animation, above).

Enforcing the coherence constraint thus becomes a matter of minimizing the distance between the transformed sample and its projection, an optimization problem that we can readily solve as part of the overall parameter learning.

In principle, enforcing coherence could lower the accuracy of the model’s predictions. But in practice, the coherence constraint appears to improve the model’s accuracy: it enforces the sharing of information across the hierarchy, and forecasting at higher levels of the hierarchy is often easier. Because of this sharing, we see consistent improvement in accuracy at the lowest level of the hierarchy.

In our experiments, we used a DeepVAR network for time series prediction and solved the reconciliation problem in closed form. But our approach is more general and can be used with many state-of-the-art neural forecasting networks, prediction distributions, projection methods, or loss functions, making it adaptable to a wide range of use cases.