Developing a new Alexa skill typically means training a machine-learning system with annotated data, and the skill’s ability to “understand” natural-language requests is limited by the expressivity of the semantic representation used to do the annotation. So far, the techniques used to represent natural language have been fairly simple, so Alexa has been able to handle only relatively simple requests.

At the Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT) that begins this weekend, we will present a new, more sophisticated semantic-representation language that we call the Alexa Meaning Representation Language. Data annotated in the language should enable Alexa skills to handle much more complex conversational interactions and to process simple interactions more accurately. This new representation now powers the library of built-in “intents” — actions that skills can perform — available to skill developers to help them bootstrap their natural-language-understanding (NLU) systems.

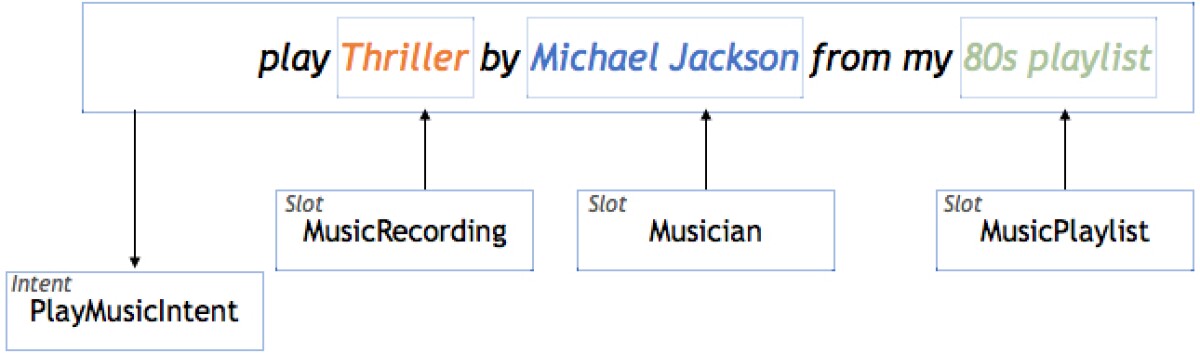

Traditionally, data used to train Alexa skills has been annotated using the flat semantic representation of “domain”, “intent” and “slot”. “Domain” describes the class of skill that the utterance is meant to invoke, such as MusicApp or HomeAutomation. (Each domain may have multiple associated skills. For instance, the skills ClassicMusic and PopMusic might both fall under the MusicApp domain.) “Intent” describes the action that the skill is being asked to perform, such as PlayTune or ActivateAppliance. And “slot” describes the entities and classes of entities on which the action is to operate, such as “song,” “‘Thriller,’” and “Michael Jackson” in the command “Play ‘Thriller’ by Michael Jackson.”

Alexa’s popularity attests to the success of this relatively simple annotation scheme. But to realize the goal of seamless conversational interaction, Alexa skills must be able to both interpret more complex linguistic structures and distinguish between competing interpretations of simple ones.

For instance, Alexa should, ideally, be able to handle utterances like “Alexa, find me a restaurant near the Mariners game,” which spans two domains, local businesses and sporting events. Conversely, Alexa should be able to resolve utterances with similar structures into different domains, as occasion warrants — for instance, “Alexa, order me a cab,” versus “Alexa, order me an Echo Dot.” With the existing, flat annotation scheme, training a machine-learning system to better handle one of these instances will weaken its ability to handle the other. Distinguishing the two use cases in the training data would require such overspecification of intents and slots that the system would lose the ability to exploit the general form of the sentence.

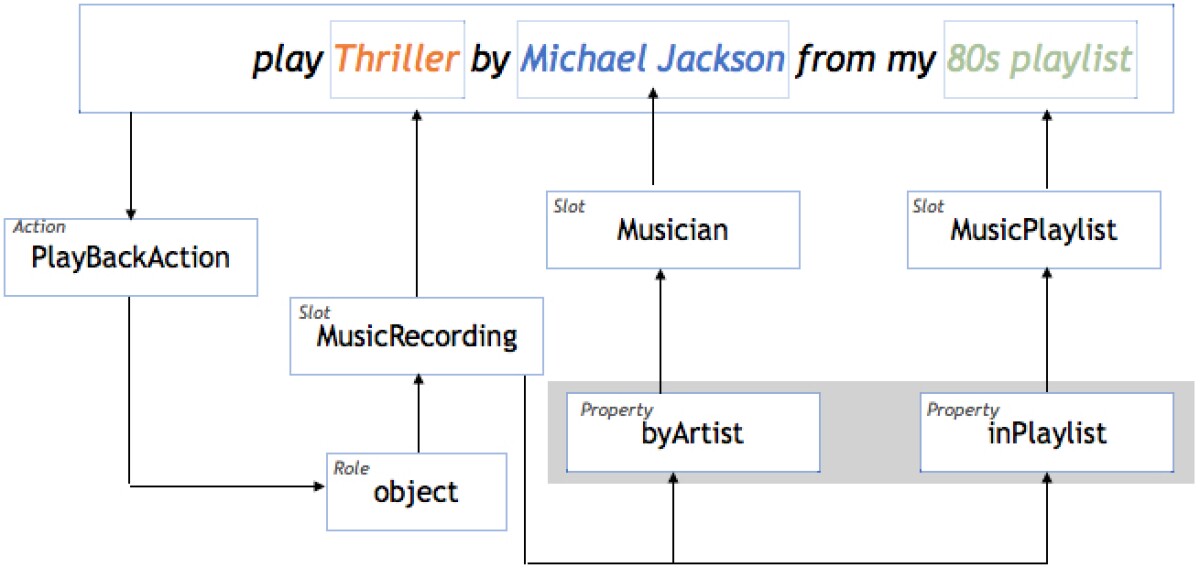

The Alexa Meaning Representation Language (AMRL) addresses these problems. We build on previous work on graph-based semantic representations and adapt them to conversational systems. Our solution consists of two key components:

- A large hierarchical ontology of types, roles, actions, and operators: actions represent a predicate that determines what the agent should do; roles express the arguments to an action; types categorize textual mentions; and properties are relations between type mentions

- A set of conventions that map natural language to a graph-based, domain- and language-agnostic representation suited for conversational agents such as Alexa

AMRL leverages the type hierarchy by allowing fine-grained type and action annotations. When more than one fine-grained type is possible — as in the “order me a cab/Dot” example — the annotation backs off to a coarser-grained type or action in the hierarchy. For example, in the utterance “play ‘Thriller’”, both “MusicRecording” and “MusicVideo” are possible as types, so the annotation guideline prefers the closest common ancestor “MusicCreativeWork”.

A graph is a mathematical structure consisting of nodes — typically depicted as circles — and edges — typically depicted as lines connecting the nodes. In AMRL, nodes are types and edges are properties. So, for instance, the node “MusicRecording” would be connected to the node “Musician” by the edge “byArtist”.

Our paper presents detailed explanations and use cases for handling more complex cross-domain linguistic phenomena such as conjunctions, disjunctions, and negations; handling anaphora (the use of generic pronouns like “that” or “here” to refer to previously used words); handling multiple intents; and conditional statements. We hope these design decisions on how to annotate short text in conversational systems will spur further research in the area of semantic meaning representation for action-oriented systems.

Paper: "The Alexa Meaning Representation Language"

Acknowledgements: Thomas Kollar, Danielle Berry, Lauren Stuart, Karolina Owczarzak, Tagyoung Chung, Michael Kayser, Bradford Snow, Spyros Matsoukas