This blog post is also available in Spanish.

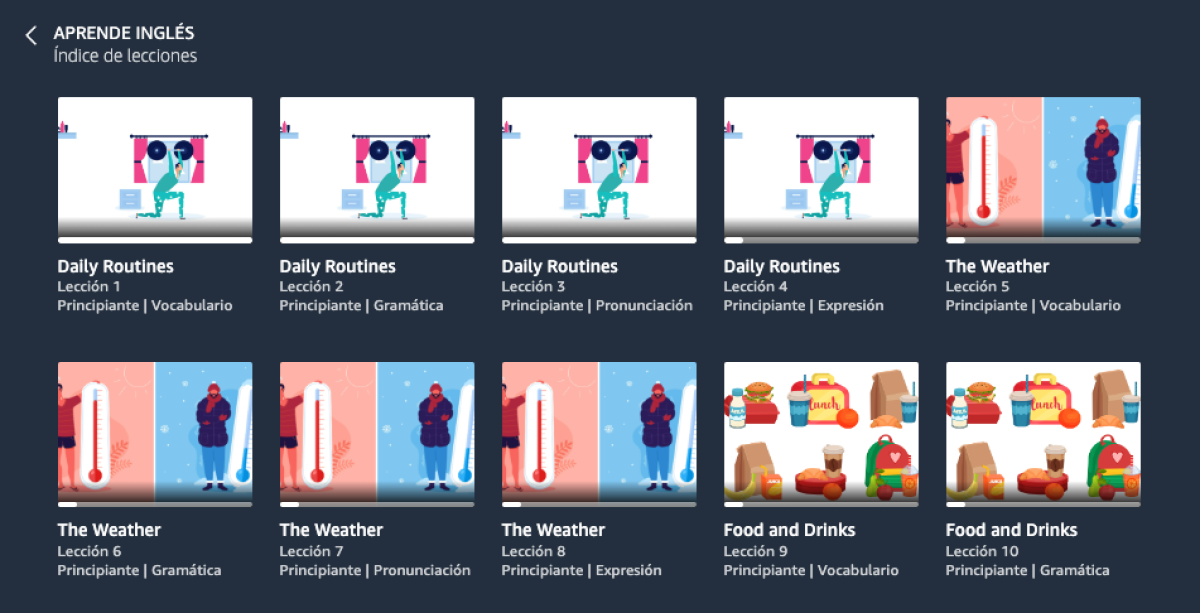

In January 2023, Alexa launched a language-learning experience in Spain that helps Spanish speakers learn beginner-level English. The experience was developed in collaboration with Vaughan, the leading English-language-learning provider in Spain, and it aimed to provide an immersive English-learning program, with particular focus on pronunciation evaluation.

We are now expanding this offering to Mexico and the Spanish-speaking population in the US and will be adding more languages in the future. The language-learning experience includes structured lessons on vocabulary, grammar, expression, and pronunciation, with practice exercises and quizzes. To try it, set your device language to Spanish and tell Alexa “Quiero aprender Inglés.”

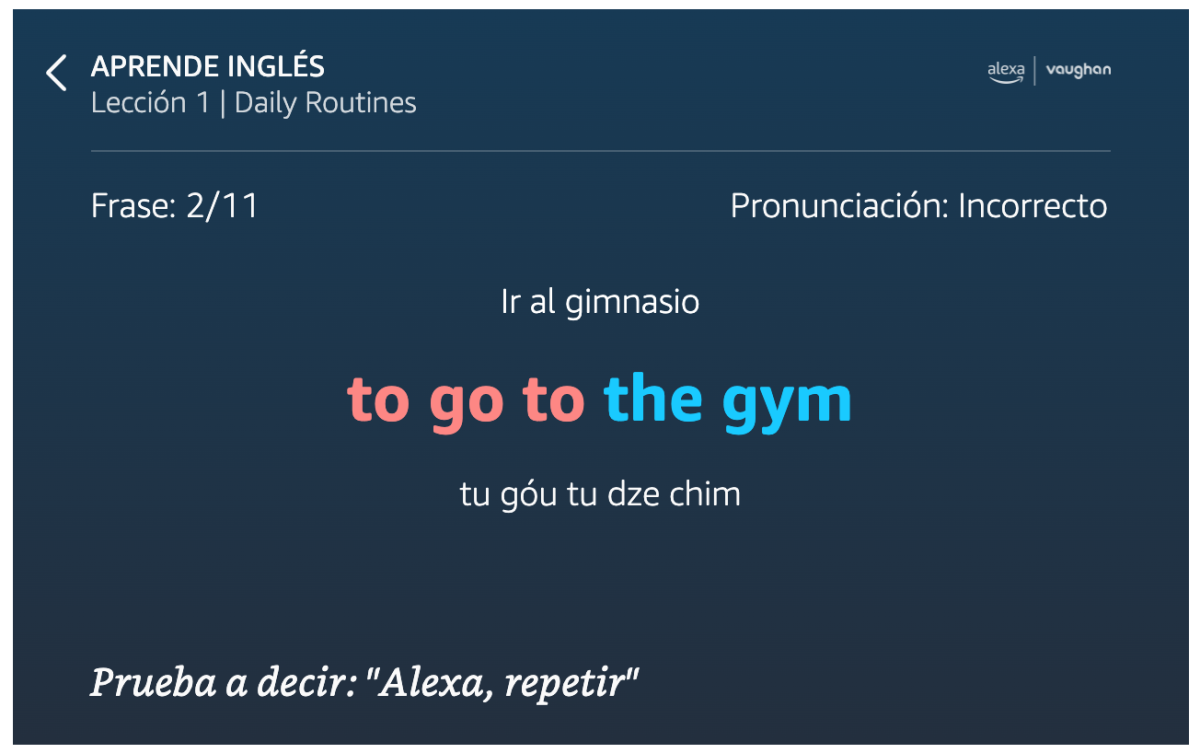

The highlight of this Alexa skill is its pronunciation feature, which provides accurate feedback whenever a customer mispronounces a word or sentence. At this year’s International Conference on Acoustics, Speech, and Signal Processing (ICASSP), we presented a paper describing our state-of-the-art approach to mispronunciation detection.

Our method uses a novel phonetic recurrent-neural-network-transducer (RNN-T) model that predicts phonemes, the smallest units of speech, from the learner’s pronunciation. The model can therefore provide fine-grained pronunciation evaluation, at the word, syllable, or phoneme level. For example, if a learner mispronounces the word “rabbit” as “rabid”, the model will output the five-phoneme sequence R AE B IH D. It can then detect the mispronounced phonemes (IH D) and syllable (-bid) by using Levenshtein alignment to compare the phoneme sequence with the reference sequence “R AE B AH T”.

The paper highlights two knowledge gaps that have not been addressed in previous pronunciation-modeling work. The first is the ability to disambiguate similar-sounding phonemes from different languages (e.g., the rolled “r” sounds in Spanish vs. the “r” sound in English). We tackled this challenge by designing a multilingual pronunciation lexicon and building a massive code-mixed phonetic dataset for training.

The other knowledge gap is the ability to learn unique mispronunciation patterns from language learners. We achieve this by leveraging the autoregressiveness of the RNN-T model, meaning the dependence of its outputs on the inputs and outputs that preceded them. This context awareness means that the model can capture frequent mispronunciation patterns from training data. Our pronunciation model has achieved state-of-the-art performance in both phoneme prediction accuracy and mispronunciation detection accuracy.

L2 data augmentation

One of the key technical challenges in building a phonetic-recognition model for non-native (L2) speakers is that there are very limited datasets for mispronunciation diagnosis. In our Interspeech 2022 paper “L2-GEN: A neural phoneme paraphrasing approach to L2 speech synthesis for mispronunciation diagnosis”, we proposed bridging this gap by using data augmentation. Specifically, we built a phoneme paraphraser that can generate realistic L2 phonemes for speakers from a specific locale — e.g., phonemes representing a native Spanish speaker talking in English.

As is common with grammatical-error correction tasks, we use a sequence-to-sequence model but flip the task direction, training the model to mispronounce words rather than correct mispronunciations. Additionally, to further enrich and diversify the generated L2 phoneme sequences, we propose a diversified and preference-aware decoding component that combines a diversified beam search with a preference loss that is biased toward human-like mispronunciations.

For each input phone, or speech fragment, the model produces several candidate phonemes as outputs, and sequences of phonemes are modeled as a tree, with possibilities proliferating with each new phone. Typically, the top-ranked phoneme sequences are extracted from the tree through beam search, which pursues only those branches of the tree with the highest probabilities. In our paper, however, we propose a beam search method that prioritizes unusual phonemes, or phoneme candidates that differ from most of the others at the same depth in the tree.

From established sources in the language-learning literature, we also construct lists of common mispronunciations at the phoneme level, represented as pairs of phonemes, one the standard phoneme in the language and one its nonstandard variant. We construct a loss function that, during model training, prioritizes outputs that use the nonstandard variants on our list.

In experiments, we saw accuracy improvements of up to 5% in mispronunciation detection over a baseline model trained without augmented data.

Balancing false rejection and false acceptance

A key consideration in designing a pronunciation model for a language-learning experience is to balance the false-rejection and false-acceptance ratio. A false rejection occurs when the pronunciation model detects a mispronunciation, but the customer was actually correct or used a consistent but lightly accented pronunciation. A false acceptance occurs when a customer mispronounces a word, and the model fails to detect it.

Our system has two design features intended to balance these two metrics. To reduce false acceptances, we first combine our standard pronunciation lexicons for English and Spanish into a single lexicon, with multiple phonemes corresponding to each word. Then, we use that lexicon to automatically unannotated speech samples that fall into three categories: native Spanish, native English, and code-switched Spanish and English. Training the model on this dataset enables it to distinguish very subtle differences between phonemes.

To reduce false rejections, we use a multireference pronunciation lexicon where each word is associated with multiple reference pronunciations. For example, the word “data” can be pronounced as either “day-tah” or “dah-tah”, and the system will accept both variations as correct.

In ongoing work, we’re exploring several approaches to further improving our pronunciation evaluation feature. One of these is building a multilingual model that can be used for pronunciation evaluation for many languages. We are also expanding the model to diagnose more characteristics of mispronunciation, such as tone and lexical stress.