Alexa now has more than 100,000 skills, and to make them easier to navigate, we've begun using a technique called dynamic arbitration. For thousands of those skills, it's no longer necessary to remember specific skill names or invocation patterns (“Alexa, tell X to do Y”). Instead, the customer just makes a request, and the dynamic-arbitration system finds the best skill to handle it.

Naturally, with that many skills, there may be several that could handle a given customer utterance. The request “make an elephant sound”, for instance, could be processed by the skills AnimalSounds, AnimalNoises, ZooKeeper, or others.

When Alexa is being trained to match utterances with skills, each training example is typically labeled with the name of only one skill. This doesn’t prevent Alexa from learning to associate multiple skills with each utterance, but it does make it harder. Training on utterances labeled with multiple relevant skills would help ensure that Alexa finds the best match for each utterance.

Accurate multilabel annotation is difficult to achieve, however, because it would require annotators familiar with the functionality of all 100,000-plus Alexa skills. Moreover, Alexa’s repertory of skills changes over time, as do individual skills’ functionality, so labeled data can quickly become out of date.

At this year’s International Conference on Acoustics, Speech, and Signal Processing (ICASSP), we will (virtually) present an automated approach to adding multiple relevant labels to the training data for Alexa’s dynamic-arbitration system.

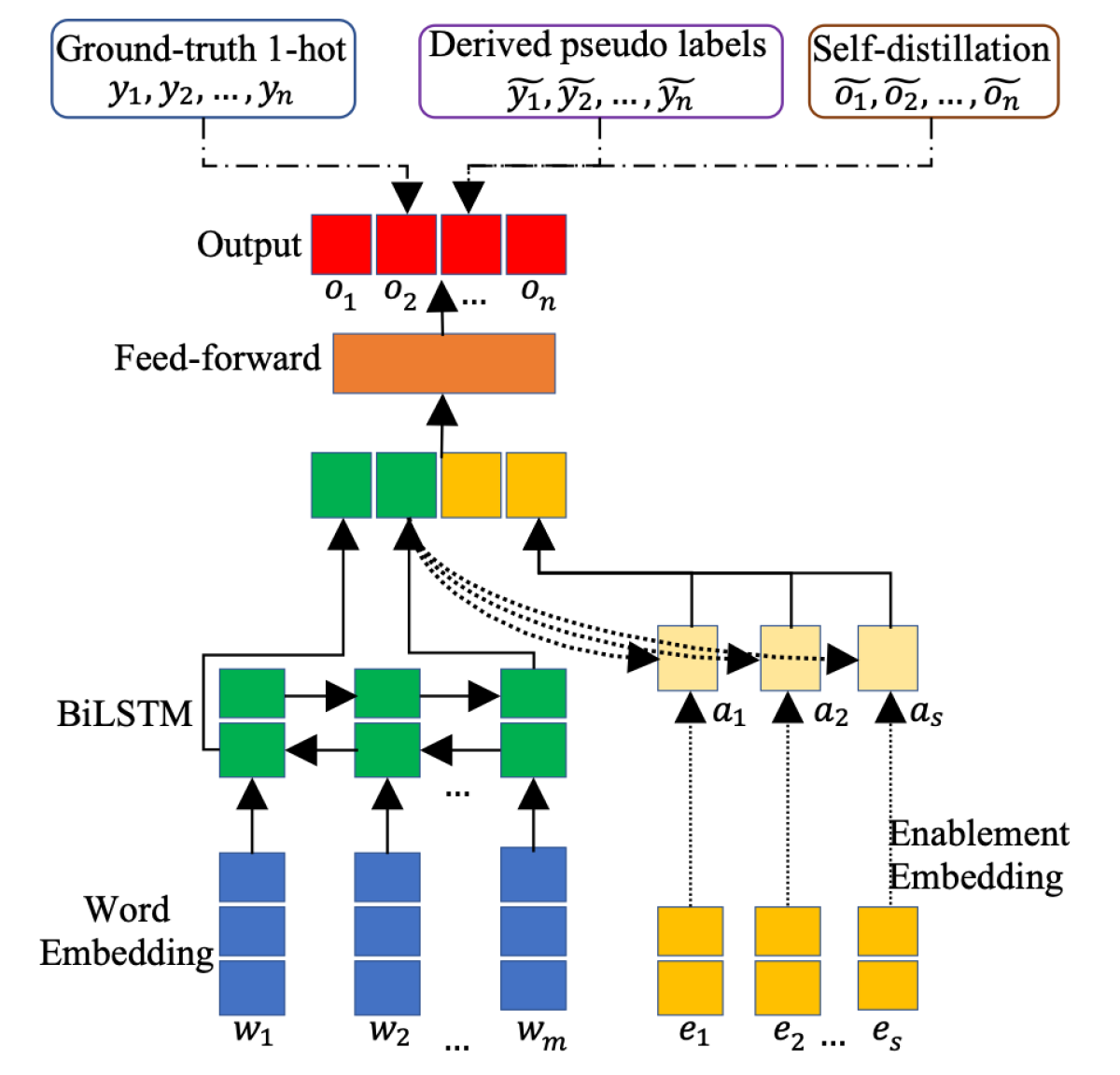

We do this, first, by using a trained dynamic-arbitration system to assign skills to utterances and using highly confident skill assignments as additional labels — or pseudo-labels. Then we use instances of erroneous categorization — as when, for instance, the assigned skill returns the reply “I don’t know that one” — as negative training examples, so that the system will learn to avoid plausible but inaccurate classifications.

Finally, we use a self-distillation technique that we described in earlier work, in which we train the system not only on labels but also on aggregate statistics about its confidence in its previous classifications. This prevents the arbitration system from getting overenthusiastic about a few new examples with strong input-output correlations.

In experiments, we show that a combination of these techniques leads to a 1.25% increase in our dynamic-arbitration system’s F1 score, a metric that factors in both the system’s false-positive and false-negative rates.

As we have reported in previous blog posts, our dynamic-arbitration system consists of two components. The first is called Shortlister, and as its name suggests, it produces a short list of candidate skills to handle a given utterance. The second component, the hypothesis re-ranker (HypRank), sorts the candidates more precisely, using information about the customer’s account settings and the dialogue context.

Interestingly, pseudo-labeling improves HypRank’s performance more than it does Shortlister’s. During training, Shortlister will sometimes output a list of skills that does not include the correct label for the input utterance. Such lists are not used to train HypRank, because there is no ground truth — no correct label — against which to compare HypRank’s output.

Our hypothesis is that when Shortlister is trained on utterances with multiple labels, it increases the likelihood that Shortlister’s output will include at least one correct label. That means that HypRank has more training examples to work with, which better reflect the full range of Shortlister’s output.

Methodology

In our experiments, we first trained Shortlister using the standard, one-skill-per-utterance training data. As is typical in machine learning, we ran through the same set of training data multiple times — in multiple epochs — until the system performance stopped improving. After each epoch, we also had Shortlister classify all the training data.

For each training example, the top p labels that Shortlister predicted with higher probability than the ground-truth label for r consecutive epochs became our pseudo-labels. Empirically, we found that setting p to 2 and r to 4 delivered the greatest improvement.

We then used the pseudo-labeled data, together with the negative examples, to fine-tune the network. Each of the negative examples was labeled with a single skill, which we had inferred to be erroneous. During fine-tuning, we penalized the network if it selected the label from one of the negative examples with high confidence.

After each epoch, we also collect statistics on the system’s classifications of all the examples in the training set. In the next epoch, these statistics are fed to the model together with every example in the training set.

Combining this self-distillation technique with pseudo-labeling and negative examples enabled us to squeeze out another fraction of a percent of F1 score improvement compared to the baseline system. In future work, we would like to further explore the relationship between self-distillation and pseudo-labeling.

We would also like to combine the three techniques we explore in this paper — pseudo-labeling, negative feedback learning, and self-distillation — with standard semi-supervised learning, in which a trained network itself labels a body of unlabeled examples, which are then used to re-train the network. It would be interesting to see if this could lead to further performance improvements.