Anomaly detection seeks to identify behaviors that lie outside statistical norms. Anomalies could indicate some kind of malicious activity, such as attempts to crack a website password, unauthorized credit card purchases, or side-channel attacks on a server. Anomaly detectors are usually models that score inputs according to the likelihood that they’re anomalous, and some threshold value is used to convert the scores into binary decisions. Often, those thresholds are determined by static analysis of historical data.

In many practical settings, where the individual data items are large and arrive rapidly and from varied sources, static analysis is not an option. Moreover, the distribution of data can shift over time — for example, during a holiday shopping event, or when an online service suddenly becomes more popular. In such settings, the anomaly thresholds need to be adjusted automatically. Thus, practical anomaly detection often requires online statistical estimation, the continuous estimation of distributions over a steady stream of data.

At this year’s Conference on Neural Information Processing Systems (NeurIPS), we presented an analytic framework that allows us to characterize an online estimator that can simultaneously handle (1) anomalies, (2) distribution drift, (3) high-dimensional data, and (4) heavy-tailed data and that (5) makes no prior assumptions about the distribution of the data.

Using our analytic framework, we prove that clipped stochastic gradient descent (clipped SGD), which limits the extent to which any one data sample can influence the resultant statistical model, can be used to train such a real-time estimator. We also show how to calculate the per-sample influence cap — the clipping threshold — assuming only that the variance of the data is not infinite. Our algorithm does not require any a priori bounds on or estimates of the data variance; rather, it adapts to the variance.

Finally, we also show how to compute the optimal learning rate for a model in this scenario, which falls between the high learning rate known to be optimal for distribution drift in the absence of noise and the slowly decaying learning rate known to be optimal in the absence of distribution shifts.

Our paper offers the first proof that there exists an estimation algorithm that can handle both anomalies and distribution drift; earlier analyses addressed one or the other, but never both at once. An estimator trained through our approach is used to do anomaly detection in the Amazon GuardDuty threat detection service.

Theoretical framework

We model both anomalies and distribution drift as the work of an adversary, but an “oblivious” adversary that selects interventions and then walks away. Imagine that, before the beginning of our learning game, the adversary selects a sequence of probability distributions and a sequence of corruption functions, which corrupt random samples selected from the distributions. The change of distribution models drift, and the corrupted samples model anomalies.

Of course, if all of the samples are corrupt, or if the data stream fluctuates wildly, there’s no such thing as an anomaly: there’s not enough statistical regularity to deviate from. Real-world data, however, is seldom adversarial, and both the number of corruptions and the magnitude of distribution shift are typically moderate.

We establish a theoretical bound that shows that, under such moderate conditions, clipped SGD performs well. The algorithm requires no a priori information about or bounds on the number of corruptions or magnitude of drift; its performance automatically and smoothly degrades as the complexity of the data stream, as measured through the number of corruptions and the magnitude of distribution shift, increases.

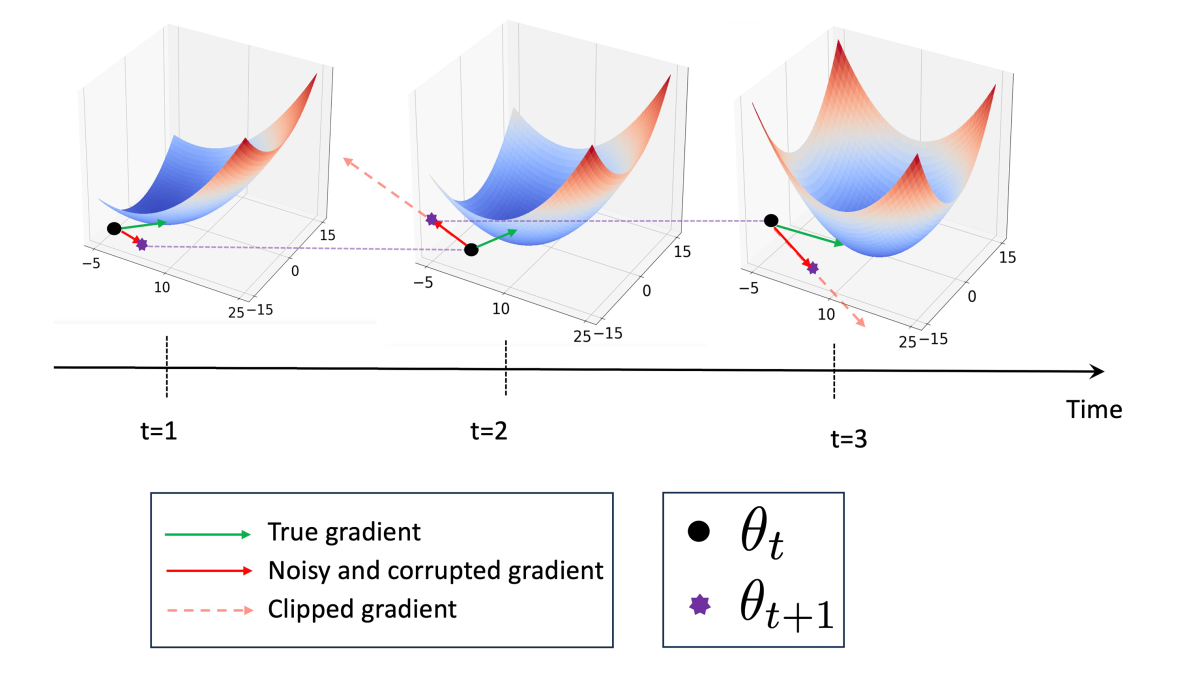

Clipped SGD

The meat of our paper is the proof that clipped SGD will converge on a reliable estimator in this scenario. The proof is inductive. First, we show that, given the error for a particular input, the increase in error for the succeeding input depends only on calculable properties of that input itself. Given that result, we show that if the error for a given input falls below a particular threshold, then if the next input is not corrupt, its error will, with high probability, fall below that threshold, too.

We next show that if the next input is corrupt, then clipping its gradient will ensure that the error will again, with high probability, fall back below the threshold.

We use two principal methods to prove this result. The first is to add a free parameter to the error function and to compute the error threshold accordingly so that we can convert any inequality into a quadratic equation. Proving the inequality is then just a matter of finding positive roots of the equation.

The other method is to use martingale concentration to prove that while the additional error term contributed by a new input may temporarily cause the error to exceed the threshold, it will, with high probability, fall back below the threshold over successive iterations.

This work continues a line of research presented in two previous papers: “FITNESS: (Fine Tune on New and Similar Samples) to detect anomalies in streams with drift and outliers”, which we presented at the International Conference on Machine Learning (ICML) in 2022, and “Online heavy-tailed change-point detection”, which we presented earlier this year at the Conference on Uncertainty in Artificial Intelligence (UAI).

Results

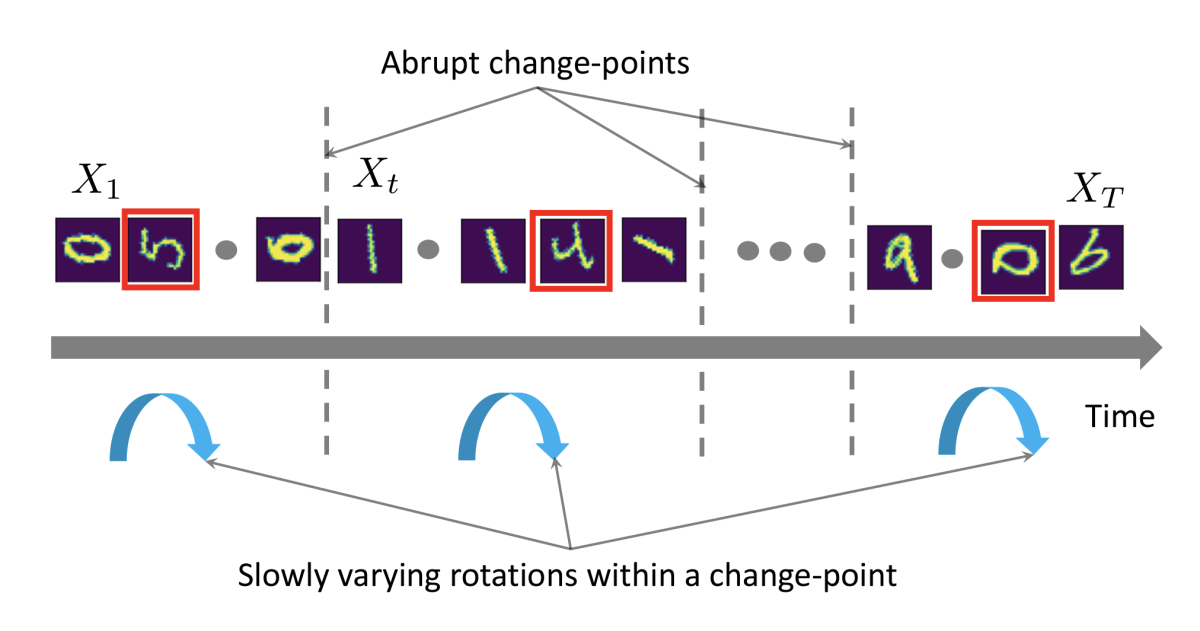

In addition to our theoretical analyses, we also tested our approach on the classic MNIST dataset of handwritten numerals. In our context, written versions of a given numeral — we started with zero — under different rotations constituted ordinary input, and other numerals constituted anomalies. Over time, however, the baseline input switched from the initial numeral (e.g., 0) to a different one (e.g., 1) to represent distribution drift.

Our model was a logistic regression model, a relatively simple model that can be updated after every input. Our experiments showed that, indeed, using clipped SGD to update the model enabled it to both accommodate distribution shifts and recognize anomalies.

One of the results of our theoretical analysis, however, is that, while clipped SGD will with high likelihood converge on a good estimator, its convergence rate is suboptimal. In ongoing work, we’re investigating how we can improve the convergence rate, to ensure even more accurate anomaly detection, with fewer examples of normal samples.