This year, we’ve started to explore ways to make it easier for customers to find and engage with Alexa skills.

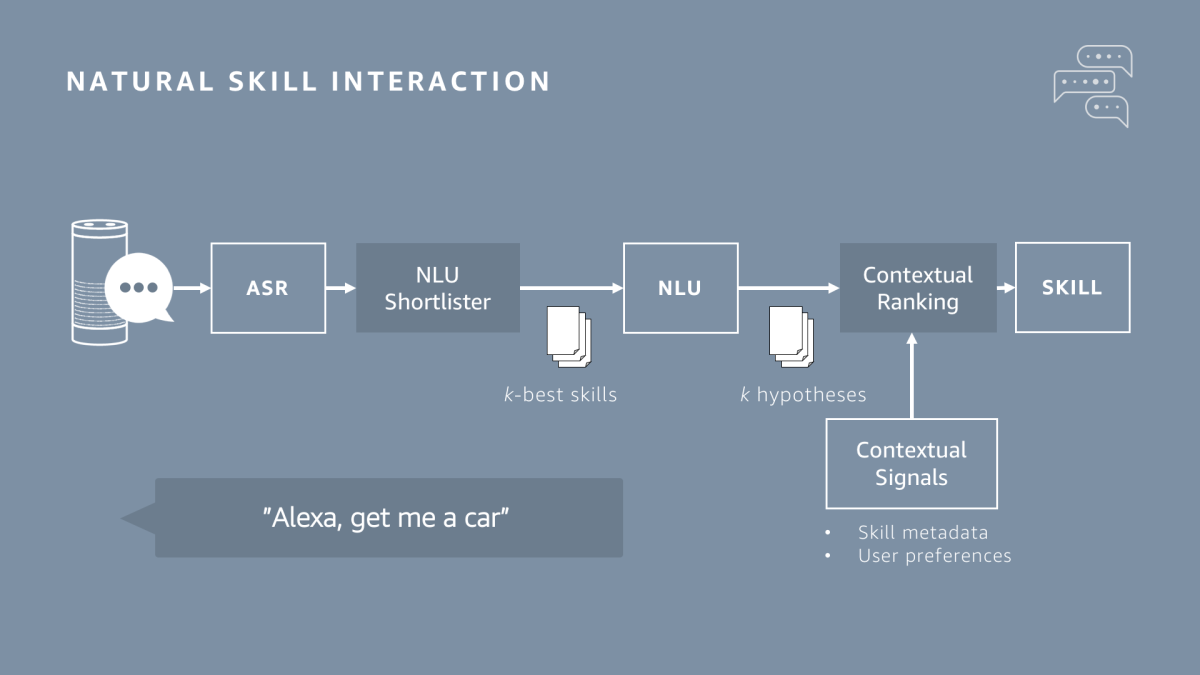

One way we’re doing this is with a machine learning system that lets customers use natural phrases and requests to discover, enable, and launch skills. To order a car, for instance, a customer can now just say “Alexa, get me a car”, instead of having to specify the name of a ride-sharing service.

This requires a system for automatically selecting the best skill to handle a particular request — a challenging task, given that Alexa customers now have access to more than 50,000 skills.

In a pair of papers earlier this year, my colleagues and I described the two-component system that performs name-free skill selection for Alexa.

This week, at the 2018 Conference on Empirical Methods in Natural Language Processing, we will describe some modifications we’ve made to the system, which increase its accuracy still further. Those modifications have been put into production and currently help arbitrate among thousands of skills.

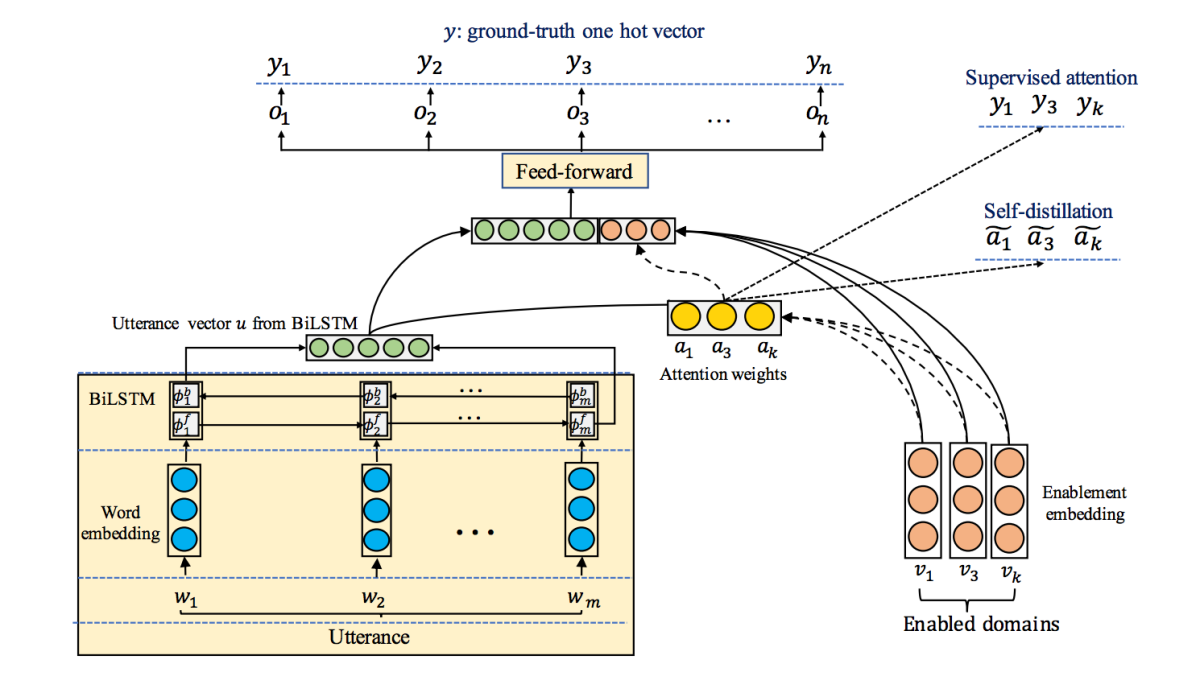

Both components of our system are neural networks, which learn to perform computational tasks by analyzing large sets of training data. The first network produces a shortlist of skills that are candidates to handle a given request. The second network uses more detailed information to choose among the skills on the shortlist. For instance, it considers whether the skills’ developers used the CanFulfillIntentRequest interface to indicate what actions their skills can perform on what types of data.

Our new paper describes several modifications to the first component of the system, the shortlister. One of the shortlister’s primary duties is to decide how heavily to skew its results toward the skills that the customer has explicitly linked to his or her Alexa account. Linking to a skill is a good sign that the customer expects to use it frequently; on the other hand, the system shouldn’t try to steer every request it receives toward the same small group of skills.

A crucial component of the shortlister is an attention mechanism that, on the basis of a customer prompt, dynamically assigns a weight to each of the skills linked to the customer’s account. That weight modifies the probability that the corresponding skill will make it onto the shortlist.

Our previous network was trained end to end, which means that during training, every component of the network was evaluated solely on how much it contributed to the accuracy of the final output — in this case, the list of candidate skills.

In our new paper, however, we add another term to the evaluation metric. Each example in the dataset used to train the network includes a customer utterance and the skill it’s intended to invoke. Sometimes that skill is a linked skill, and sometimes it’s not. We still evaluate the network on its overall accuracy, but we also evaluate the attention mechanism on how heavily it weights linked skills when they are in fact the intended skills. In other words, we explicitly supervise the training of the attention mechanism.

This should lead to a network that more reliably selects linked skills when the customer intends them. But it could also lead to a network that overcompensates, selecting linked skills when they’re not intended. So we adopted another training technique designed to mitigate the potential overweighting of linked skills.

Neural networks are typically trained and retrained on the same data, until they no longer demonstrate any improvement. For example, if you have 1,000 training samples, you feed them sequentially to the network, which adjusts its internal settings with each new example, in an attempt to improve its accuracy. When you’re done, you feed the same 1,000 samples back to the network and see whether accuracy improves.

Each of these rounds of training is called an “epoch”. In our new paper, after each epoch, we collect the statistics on the system’s classifications of all the examples in the training set — the system chose skill A 6% of the time, skill B 2% of the time, and so on.

Then, in the next epoch, these statistics are fed to the model together with every example in the training set. At the end of that epoch, we collect new statistics, which we feed to the model in the next epoch, and so on. This technique is called self-distillation, because it was originally used to reduce the size of neural networks without compromising their performance.

In each epoch, the output statistics from the previous epoch indicate the range of skills that customers tend to invoke, which prevents the system from concentrating too heavily on a few enabled skills.

Our new paper reports one other technique to improve the performance of the attention mechanism. Previously, the attention mechanism used a softmax function to generate the weights it applied to linked skills. With a softmax function, all the weights are between 0 and 1, and they must sum to 1. In our new paper, we instead use sigmoid weights, which also range from 0 and 1 but have no restrictions on their sum. This gives the system flexibility to indicate that none of the linked skills is a strong candidate or that more than one are.

In experiments, we compared systems that used softmax weights, sigmoid weights, sigmoid weights combined with supervised weight learning, and sigmoid weights combined with supervised weight learning and self-distillation. We found that the system that used all three mechanisms consistently outperformed the other three. Relative to the system that used only softmax weights, the one that used all three mechanisms reduced the error rate by 12% when it was tasked with producing shortlists of three candidate skills.