By tokenizing time series data and treating it like a language, Amazon researchers were able to adapt a large language model's architecture to the task of time series forecasting. In experiments, the model's zero-shot performance matched or exceeded that of purpose-built time-series-forecasting models.

Addition on an elliptic curve. Elliptic-curve cryptography is a method for doing public-key cryptography that has some advantages over methods that rely on large-number factorization, and "25519" elliptic-curve cryptography — named for the large prime 2255 - 19 — is one of the most useful elliptic-curve schemes. Amazon Web Services researchers optimized assembly-level implementations of 25519 cryptography to run on AWS hardware, and they used automated reasoning to prove its functional correctness. The implementations are included in the open-source cryptographic library AWS LibCrypto,

Can language models trained simply to predict the next word in a sequence of words actually represent words' meanings? Amazon scientists Matthew Trager and Stefano Soatto argue that they not only can but do.

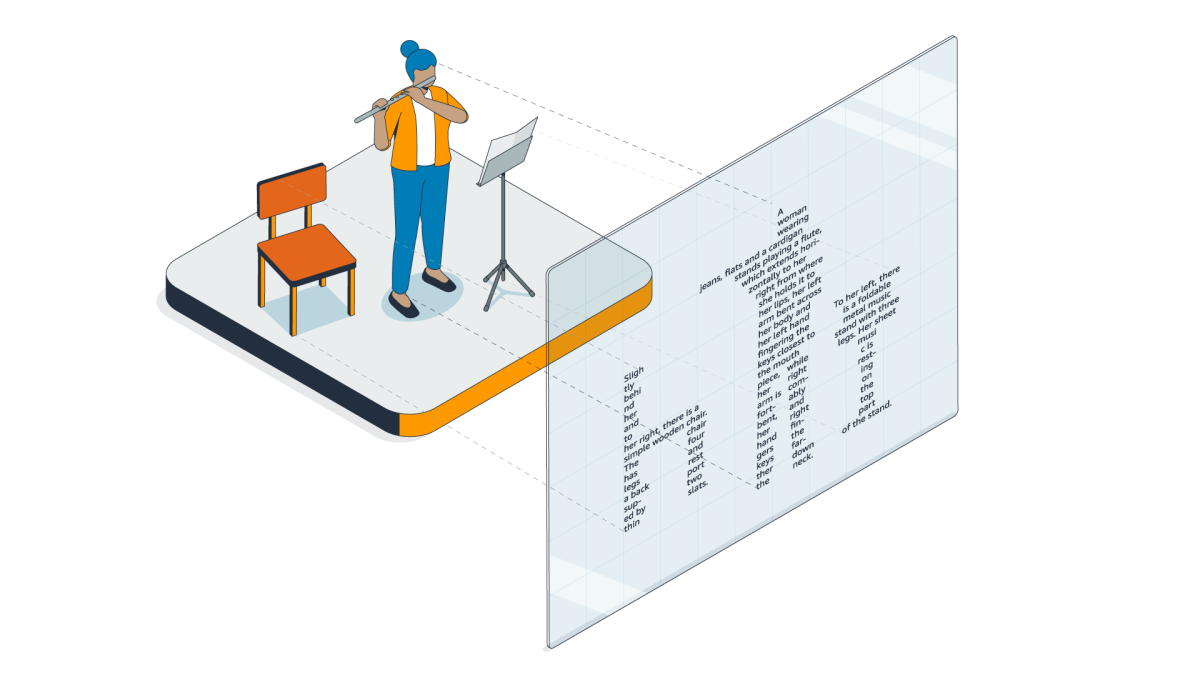

The passage from the multisensory realm to written language could be interpreted as a simple projection, similar to the projection from a three-dimensional scene down to a two-dimensional image. RefChecker is an approach to hallucination detection in LLMs that decomposes LLM outputs into knowledge triplets with a <subject, predicate, object> structure, enabling a more fine-grained assessment of factual accuracy than was possible with earlier methods, which used extracted sentences or phrases as claim summaries. RefChecker also includes a benchmark dataset with 100 examples of text generation tasks in each of three settings: zero context, noisy context, and accurate context.

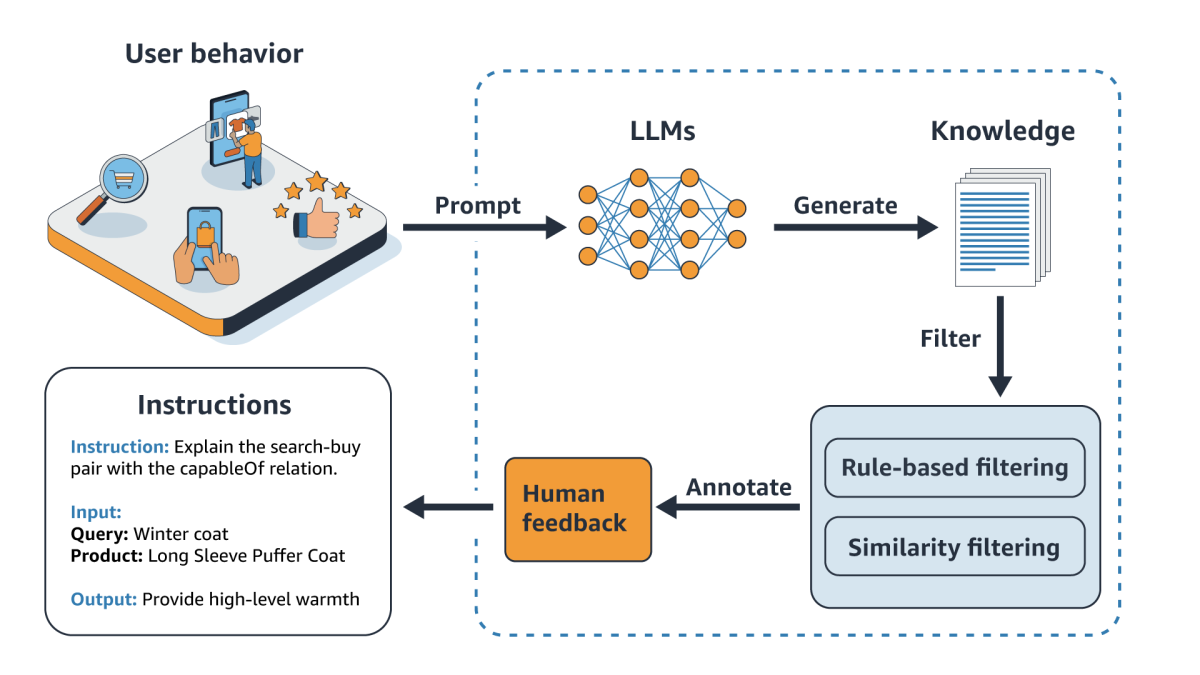

Sometimes, finding the product in Amazon Stores most relevant to a customer query can involve commonsense reasoning — inferring, say, that "slip-resistant shoes" might be relevant to the query "shoes for pregnant women". To help facilitate such reasoning, Amazon researchers used LLMs to extract commonsense relationships from customer interaction data and encoded those relationships in an enormous graph, which can be queried during product retrieval.

The COSMO framework. The fight against hallucination in retrieval-augmented-generation (RAG) models starts with a method for accurately assessing it. To that end, Amazon researchers developed an approach that uses an LLM to produce multiple-choice tests for each document in the knowledge corpus relevant to a particular task. The RAG model is then scored according to its performance on the tests.

Trishul Chilimbi, a vice president and distinguished scientist with Amazon Stores’ Foundational AI organization, describes his team's work on Rufus, the new generative-AI-powered shopping assistant for Amazon Stores. Rufus helps Amazon customers make more-informed shopping decisions by answering a wide range of questions in the Amazon Shopping app, from product details and comparisons to recommendations.

New "virtual try-all" method works with any product, in any personal setting, and enables precise control of image regions to be modified. In contrast to earlier virtual-try-on models, which generated images of a human figure wearing different clothes, Amazon's "virtual try-all" model allows users to seamlessly insert any product at any location in any scene. The user starts with a personal scene image and a product and draws a mask in the scene to tell the model where to insert the object. The model then integrates the item into the scene, with realistic angles, lighting, shadows, and so on. The key to the model's success is a secondary U-Net encoder that produces a “hint signal” based on a rough copy-paste collage in which the product image, resized to match the scale of the background scene, has been inserted into the mask

From LLMs that transcribe raw prescription data into standardized formats, to decision-tree models that generate accurate price estimates, to the Amazon Pharmacy assistant, which helps customers navigate the complexities of the pharmacy industry, AI is improving every aspect of customers' interactions with Amazon Pharmacy.

Almost 100 years ago, the Hungarian-Australian mathematician Esther Szekeres posed a problem that came to be known as the “happy-ending problem”:

what is the minimum number of points in a plane, no three of which are collinear, required to guarantee that n of the points constitute a convex polygon that does not contain any of the other points? The problem had been solved for n > 6 and n < 6, but not for n = 6. Using the tools of automated reasoning, Amazon Scholar Marijn Heule and colleagues finally solved the remaining case.

In a hexagon constructed from points in a prespecified set, if any of the "outer triangles" enclose points in the set, it's possible to draw a new hexagon — still constructed from the same set — that does not enclose them.

- Research areas

- Research areas

- News & blog

- The latest from Amazon researchers

- The latest from Amazon researchers

- Amazon Research Awards

- Research collaborations

- Overview

- Carnegie Mellon University

- Columbia University

- Hampton University

- Howard University

- IIT Bombay

- Johns Hopkins University

- Max Planck Society

- MIT

- Tennessee State University

- University of California, Los Angeles

- University of Illinois Urbana-Champaign

- University of Southern California

- University of Texas at Austin

- Virginia Tech

- University of Washington

- Amazon Research Awards

- Research collaborations

- Overview

- Carnegie Mellon University

- Columbia University

- Hampton University

- Howard University

- IIT Bombay

- Johns Hopkins University

- Max Planck Society

- MIT

- Tennessee State University

- University of California, Los Angeles

- University of Illinois Urbana-Champaign

- University of Southern California

- University of Texas at Austin

- Virginia Tech

- University of Washington