Most modern machine learning systems are classifiers. They take input data and sort it into categories: pictures of particular animals, vocalizations of particular words, words acting as particular parts of speech, and the like.

Ideally, when a system is being trained to perform multiple classifications — say, pictures of cats, dogs, and horses — it will see roughly the same number of training examples from each category. Otherwise, it will probably end up biased toward the category that it sees most frequently.

Sometimes, though, imbalances in training data are unavoidable, and somehow, the training process has to correct for them. This spring, at the International Conference on Acoustics, Speech, and Signal Processing, my colleagues and I will present a new technique for correcting such imbalances.

In experiments, we applied our technique to the problem of recognizing particular sounds, such as glass breaking or babies crying. We found that, for a type of neural network commonly used in audio detection, our technique reduced error rates by 15% to 30% over the standard technique for correcting data imbalances.

To get a sense of the problem of data imbalance, suppose that a classifier were trained on examples of three classes, and one of the classes had 20 times as much training data as the other two. By simply assigning every input to the overrepresented class, the classifier could achieve 90% accuracy on the training data; it would have little incentive to resolve borderline cases in favor of either of the other two classes.

The standard way to address data imbalances is simply to overweight the examples in the underrepresented classes: if a particular class has one-fifth as much training data as another, each of its examples should count five times as much.

A more sophisticated approach is to train a neural network to produce “embeddings” that capture differences between data in different classes. An embedding is a representation of a data item as a point in a fixed-dimensional space (a vector). A neural network can be trained to produce embeddings that maximize the distances between points in different classes. The embedding vectors, not the data themselves, then serve as inputs to a classifier.

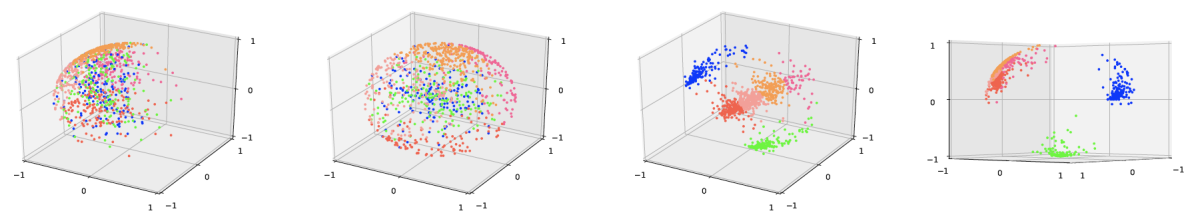

Data imbalance is a problem when learning embeddings, too, so during training, if any data class is larger than any others, it’s split into clusters that are approximately the size of the smallest class. (In the figure above, for instance, the dots on the red end of the color spectrum are all from the same class. As it was four times the size of the other two classes, it was split into four clusters.)

Historically, the problem with this approach has been that, during training, every time the neural network embeds a new data item, it has to measure the distance between that item and every other item it’s seen. For large data sets, this is impractically time consuming.

To solve this problem, our algorithm instead keeps a running measurement of the centroid of each data cluster in the embedding space. The centroid is the point that minimizes the average distance of all the points of the cluster (like a center of gravity). With each new embedding, our algorithm measures its distance from the centroids of the clusters, a much more efficient computation than exhaustively measuring pairwise distances.

Once the embedding network is trained, we use its outputs as training data for a new classifier, which, unlike the embedding network, applies labels to input data. We found that our system works best if we allow the process of training the classifier to adjust the internal settings of the embedding network, too.

We tested our system on four types of sounds from an industry-standard data set: dogs’ barks, babies’ cries, gunshots, and background sounds. We also experimented with two different network architectures for doing the embeddings: a small, efficient long short-term memory (LSTM) network and a larger, slower, but more accurate convolutional neural net (CNN). As a baseline, we used the same architectures but adjusted for data imbalances by overweighting underrepresented samples, rather than maximizing distances in the embedding space.

Across the board, our embeddings improved the performance of the LSTM model, reducing the error rate by 15% to 30% on particular sounds and by 22% overall. For many applications, smaller networks will be preferable because of their greater efficiency.

With the CNN model, the results were more varied. When the ratio of the sizes of the data classes was 6:1:2:16 (dog : baby : gunshot : background), the baseline CNN model slightly outperformed our system on the most underrepresented class (baby’s cries), with a relative error reduction of 6%. But our system’s advantage on the other two classes was great enough that it still performed better overall.

When the ratio of negative to positive data was larger, however (2:2:1:26), our system outperformed the CNN model across the board, with an average relative error reduction of 19%. This suggests that the greater the data imbalance, the greater the advantage our system enjoys.

Acknowledgments: Vipul Arora, Chao Wang